Correlation charts: Connect the dots between site speed and business success

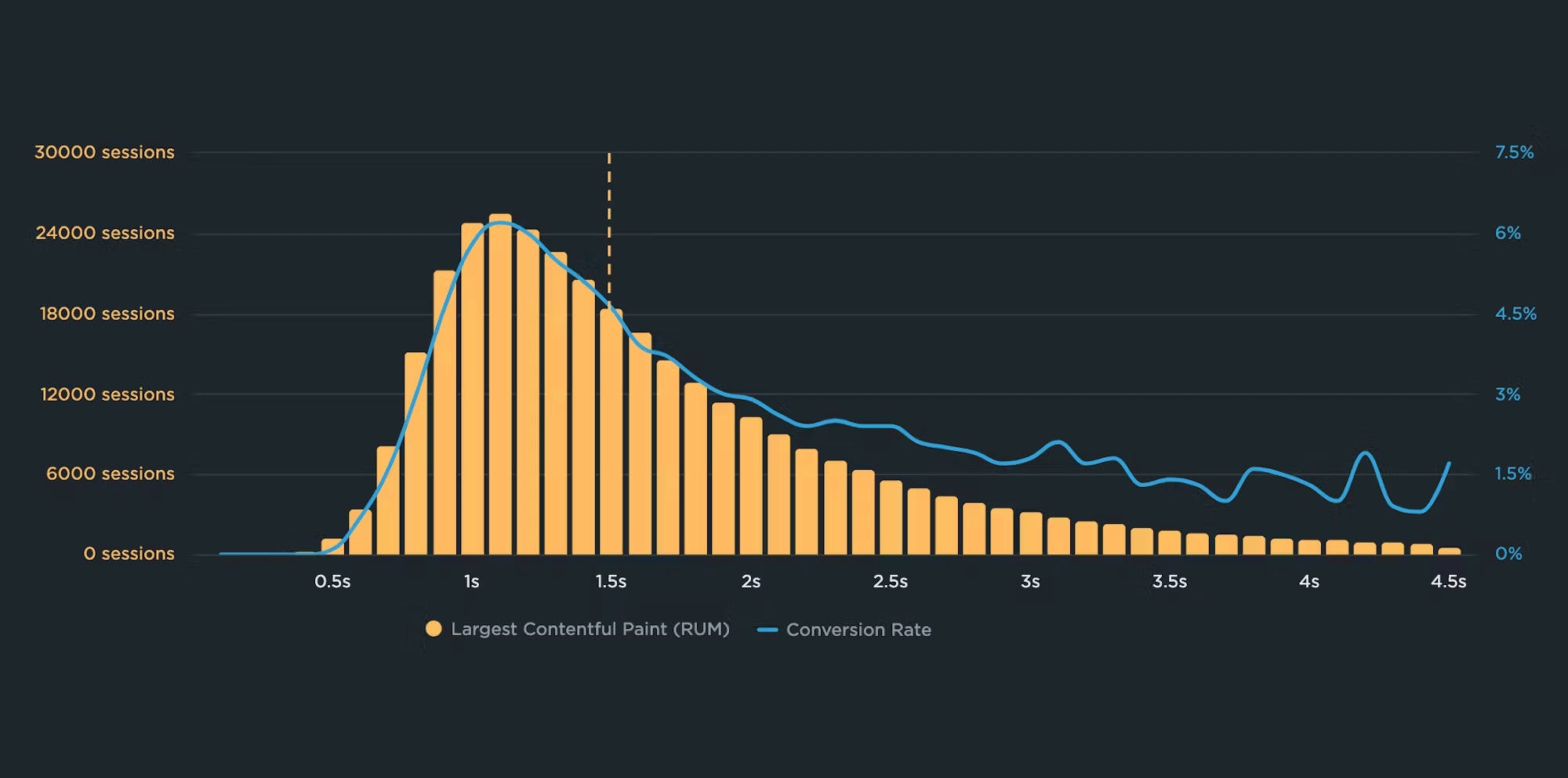

If you could measure the impact of site speed on your business, how valuable would that be for you? Say hello to correlation charts – your new best friend.

Here's the truth: The business folks in your organization probably don't care about page speed metrics. But that doesn't mean they don't care about page speed. It just means you need to talk with them using metrics they already care about – such as conversion rate, revenue, and bounce rate.

That's why correlation charts are your new best friend.

Downtime vs slowtime: Which costs you more?

Comparing site outages to page slowdowns is like comparing a tire blowout to a slow leak. One is big and dramatic. The other is quiet and insidious. Either way, you end up stranded on the side of the road.

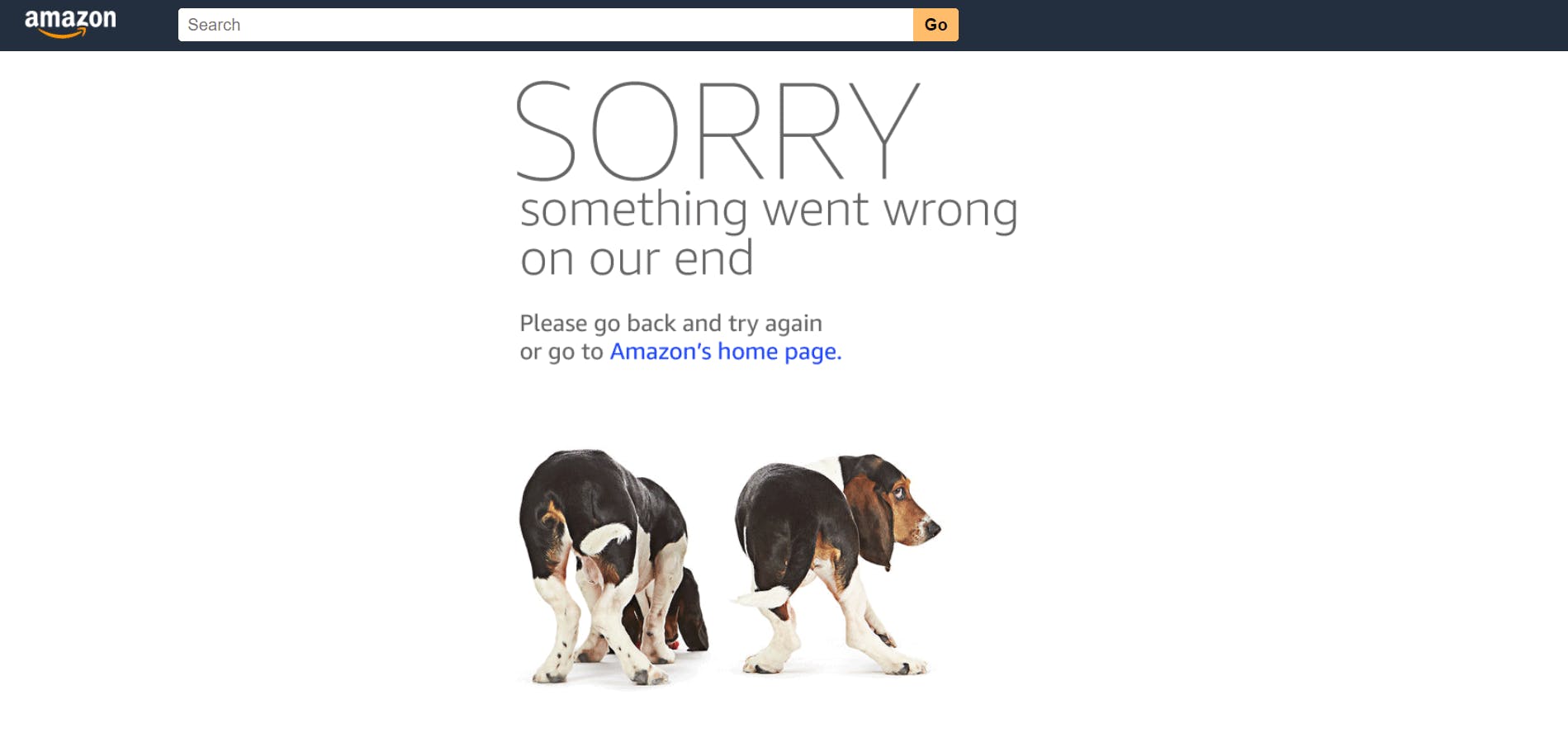

Downtime is horrifying for any company that uses the web as a vital part of its business (which is to say, most companies). Some of you may remember the Amazon outage of 2013, when the retail behemoth went down for 40 minutes. The incident made headlines, largely because those 40 minutes were estimated to have cost the company $5 million in lost sales.

Downtime makes headlines:

- 2015 – 12-hour Apple outage cost the company $25 million

- 2016 – 5-hour outage caused an estimated loss of $150 million for Delta Airlines

- 2019 – 14-hour outage cost Facebook an estimated $90 million

It's easy to see why these stories capture our attention. These are big numbers! No company wants to think about losing millions in revenue due to an outage.

Page slowdowns can cause as much damage as downtime

While Amazon and other big players take pains to avoid outages, these companies also go to great effort to manage the day-to-day performance – in terms of page speed and user experience – of their sites. That’s because these companies know that page slowdowns can cause at least as much damage as downtime.

Six things that slow down your site's UX (and why you have no control over them)

Have you ever looked at the page speed metrics – such as Start Render and Largest Contentful Paint – for your site in both your synthetic and real user monitoring tools and wondered "Why are these numbers so different?"

Photo by Freepik

Part of the answer is this: You have a lot of control over the design and code for the pages on your site, plus a decent amount of control over the first and middle mile of the network your pages travel over. But when it comes to the last mile – or more specifically, the last few feet – matters are no longer in your hands.

Your synthetic testing tool shows you how your pages perform in a clean lab environment, using variables – such as browser, connection type, even CPU power – that you've selected.

Your real user monitoring (RUM) tool shows you how your pages perform out in the real world, where they're affected by a myriad of variables that are completely outside your control.

In this post we'll review a handful of those performance-leaching culprits that are outside your control – and that can add precious seconds to the amount of time it takes for your pages to render for your users. Then we'll talk about how to use your monitoring tools to understand how your real people experience your site.

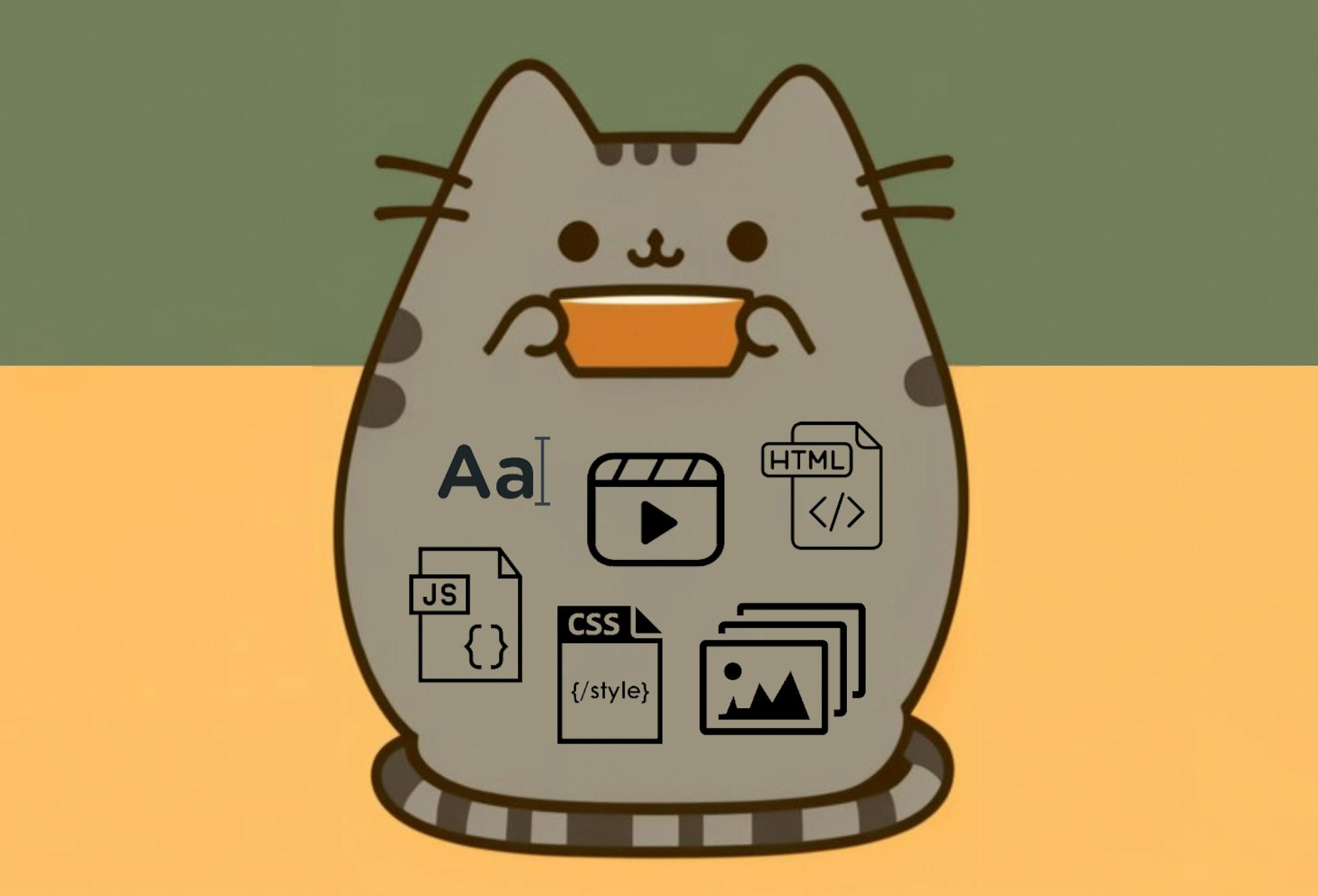

Page bloat update: How does ever-increasing page size affect your business and your users?

The median web page is 8% bigger than it was just one year ago. How does this affect your page speed, your Core Web Vitals, your search rank, your business, and most important – your users? Keep scrolling for the latest trends and analysis.

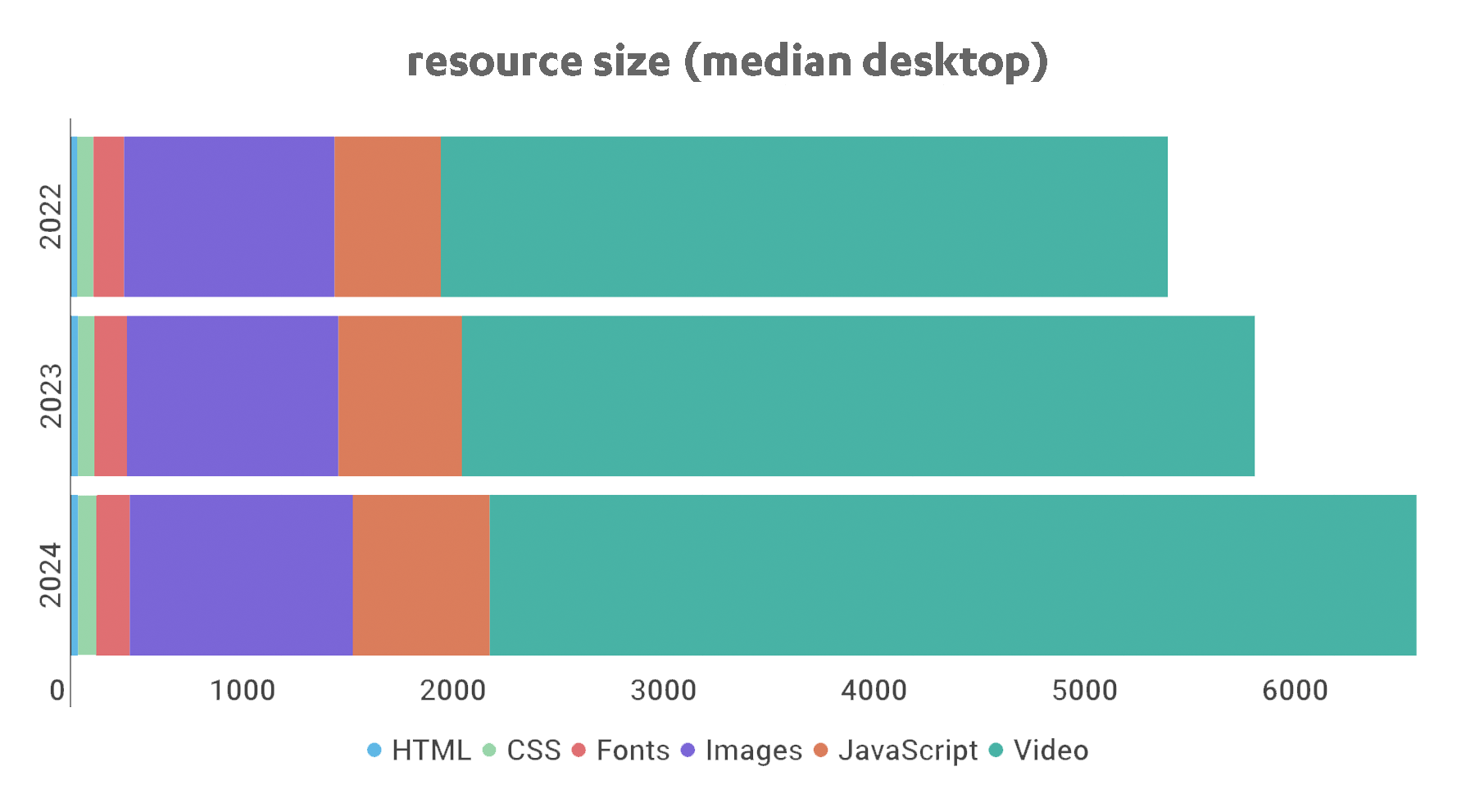

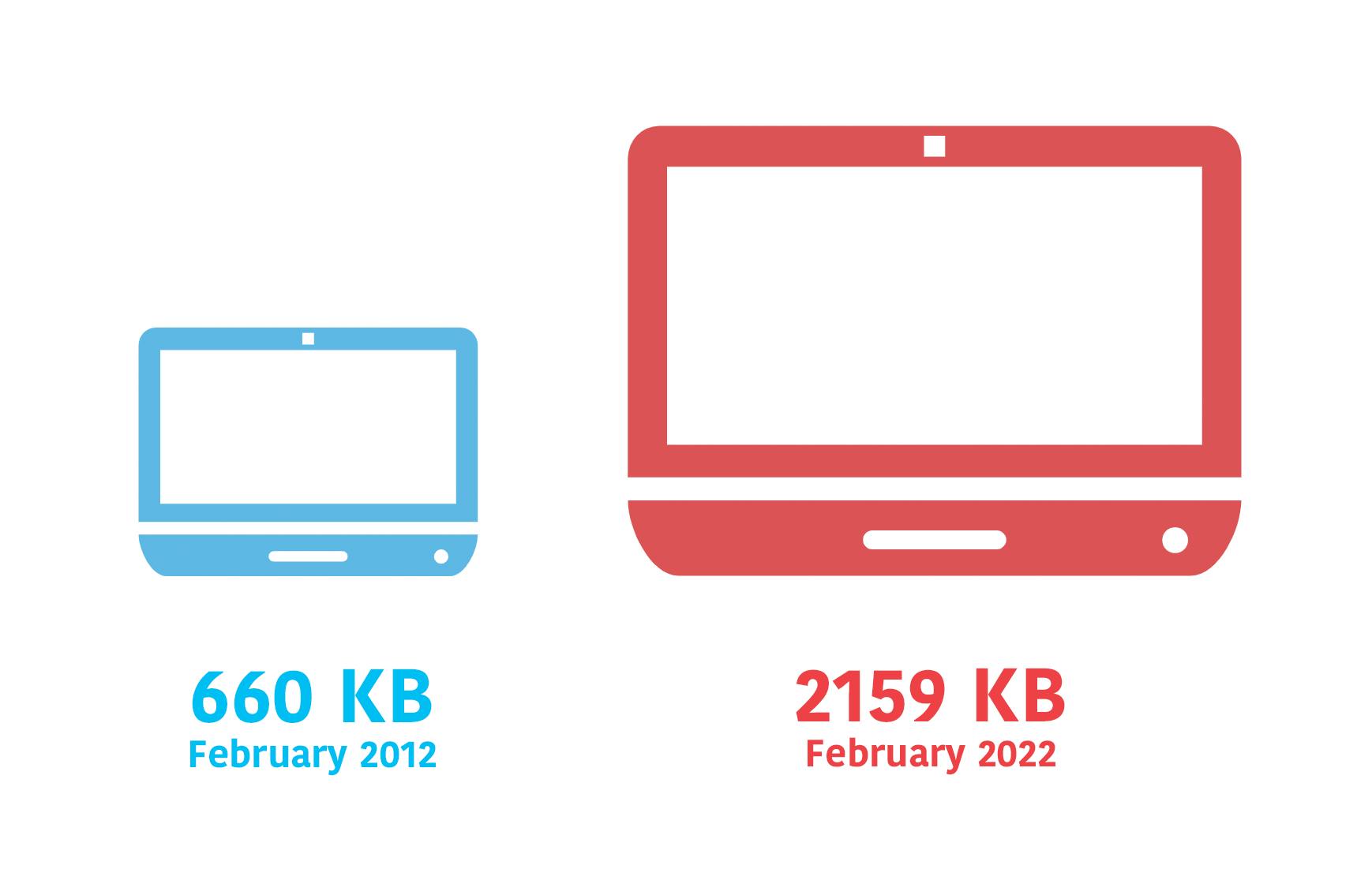

For almost fifteen years, I've been writing about page bloat, its impact on site speed, and ultimately how it affects your users and your business. You might think this topic would be exhausted by now, but every year I learn new things – beyond the overarching fact that pages keep getting bigger and more complex, as you can see in this chart, using data from the HTTP Archive:

In this post, we'll cover:

- How much pages have grown over the past year

- How page bloat hurts your business and – at the heart of everything – your users

- How page bloat affects Google's Core Web Vitals (and therefore SEO)

- If it's possible to have large pages that still deliver a good user experience

- Page size targets

- How to track page size and complexity

- How to fight regressions

2024 Holiday Readiness Checklist (Page Speed Edition!)

Delivering a great user experience throughout the holiday season is a marathon, not a sprint. Here are ten things you can do to make sure your site is fast and available every day, not just Black Friday.

Your design and development teams are working hard to attract users and turn browsers into buyers, with strategies like:

- High-resolution images and videos

- Geo-targeted campaigns and content

- Third-party tags for audience analytics and retargeting

However, all those strategies can take a toll on the speed and user experience of your pages – and each introduces the risk of introducing single points of failure (SPoFs).

Below we've curated ten steps for making your users happy throughout the holidays (and beyond). If you're scrambling to optimize your site before Black Friday, you still have time to implement some or all of these best practices. And if you're already close to being ready for your holiday code freeze, you can use this as a checklist to validate that you've ticked all the boxes on your performance to-do list.

Hello INP! Here's everything you need to know about the newest Core Web Vital

After years of development and testing, Google has added Interaction to Next Paint (INP) to its trifecta of Core Web Vitals – the performance metrics that are a key ingredient in its search ranking algorithm. INP replaces First Input Delay (FID) as the Vitals responsiveness metric.

Not sure what INP means or why it matters? No worries – that's what this post is for. :)

- What is INP?

- Why has it replaced First Input Delay?

- How does INP correlate with user behaviour metrics, such as conversion rate?

- What you need to know about INP on mobile devices

- How to debug and optimize INP

And at the bottom of this post, we'll wrap thing up with some inspiring case studies from companies that have found that improving INP has improved sales, pageviews, and bounce rate.

Let's dive in!

The psychology of site speed and human happiness

In the fourteen years that I've been working in the web performance industry, I've done a LOT of research, writing, and speaking about the psychology of page speed – in other words, why we crave fast, seamless online experiences. In fact, the entire first chapter of my book, Time Is Money (reprinted here courtesy of the good folks at O'Reilly), is dedicated to the subject.

I recently shared some of my favourite research at Beyond Tellerrand (video here) and thought it would be fun to round it up in a post. Here we're going to cover:

- Why time is a crucial (and often neglected) usability factor

- How we perceive wait times

- Why our memory is unreliable

- How the end of an experience has a disproportionate effect on our perception

- How fast we expect pages to be (and why)

- "Flow" and what it means in terms of how we use the web

- How delays hurt our productivity

- What we can learn from measuring "web stress"

- How slowness affects our entire perception of a brand

There's a lot of fascinating material to cover, so let's get started!

Mobile INP performance: The elephant in the room

Earlier this year, when Google announced that Interaction to Next Paint (INP) will replace First Input Delay (FID) as the responsiveness metric in Core Web Vitals in *gulp* March of 2024, we had a lot to say about it. (TLDR: FID doesn't correlate with real user behavior, so we don't endorse it as a meaningful metric.)

Our stance hasn't changed much since then. For the most part, everyone agrees the transition from FID to INP is a good thing. INP certainly seems to be capturing interaction issues that we see in the field.

However, after several months of discussing the impending change and getting a better look at INP issues in the wild, it's hard to ignore the fact that mobile stands out as the biggest INP offender by a wide margin. This doesn't get talked about as much as it should, so in this post we'll explore:

- The gap between "good" INP for desktop vs mobile

- Working theories as to why mobile INP is so much poorer than desktop INP

- Correlating INP with user behavior and business metrics (like conversion rate)

- How you can track and improve INP for your pages

Does Interaction to Next Paint actually correlate to user behavior?

Earlier this year, Google announced that Interaction to Next Paint (INP) is no longer an experimental metric. INP will replace First Input Delay (FID) as a Core Web Vital in March of 2024.

Now that INP has arrived to dethrone FID as the responsiveness metric in Core Web Vitals, we've turned our eye to scrutinizing its effectiveness. In this post, we'll look at real-world data and attempt to answer: What correlation – if any – does INP have with actual user behavior and business metrics?

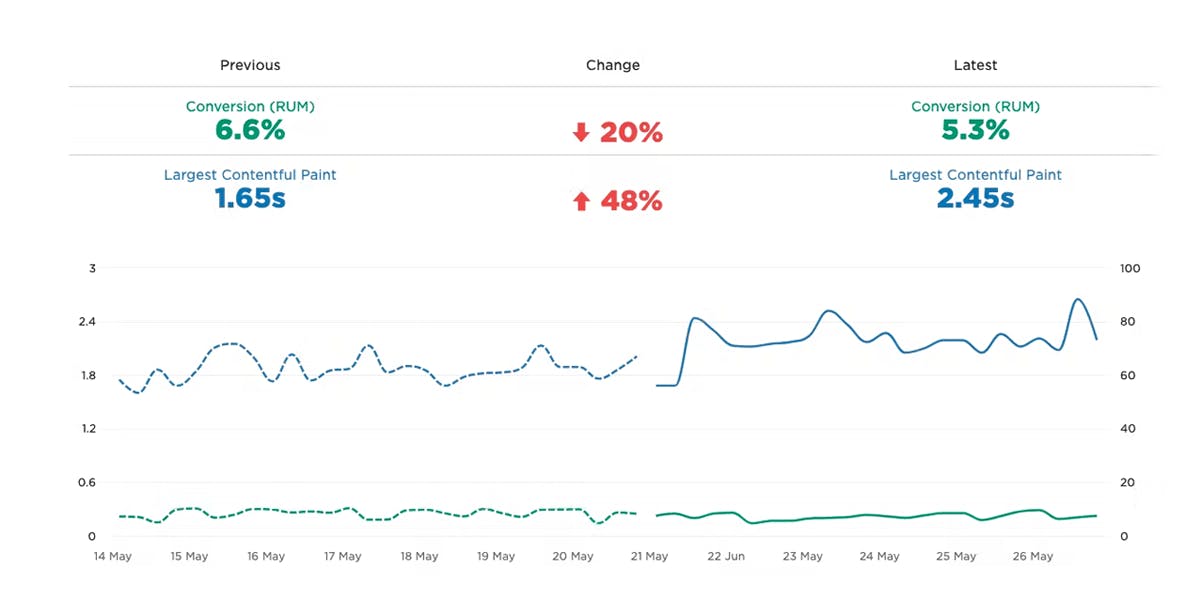

Exploring performance and conversion rates just got easier

Demonstrating the impact of performance on your users – and on your business – is one of the best ways to get your company to care about the speed of your site.

Tracking goal-based metrics like conversion rate alongside performance data can give you richer and more compelling insights into how the performance of your site affects your users. This concept is not new by any means. In 2010, the Performance and Reliability team I was fortunate enough to lead at Walmartlabs shared our findings around the impact of front-end times on conversion rates. (This study and a number of other case studies tracked over the years can be found at WPOstats.)

Setting up conversion tracking in SpeedCurve RUM is fairly simple and definitely worthwhile. This post covers:

- What is a conversion?

- How to track conversions in SpeedCurve

- Using conversion data with performance data for maximum benefit

- Conversion tracking and user privacy

What is page bloat? And how is it hurting your business, your search rank, and your users?

For more than ten years, I've been writing about page bloat, its impact on site speed, and ultimately how it affects your users and your business. You might think that this topic would be played out by now, but every year I learn new things – beyond the overarching fact that pages keep getting bigger and more complex, as you can see in this chart, using data from the HTTP Archive.

In this post, we'll cover:

- How much pages have grown over the past year

- How page bloat hurts your business and – at the heart of everything – your users

- How page bloat affects Google's Core Web Vitals (and therefore SEO)

- If it's possible to have large pages that still deliver a good user experience

- Page size targets

- How to track page size and complexity

- How to fight regressions

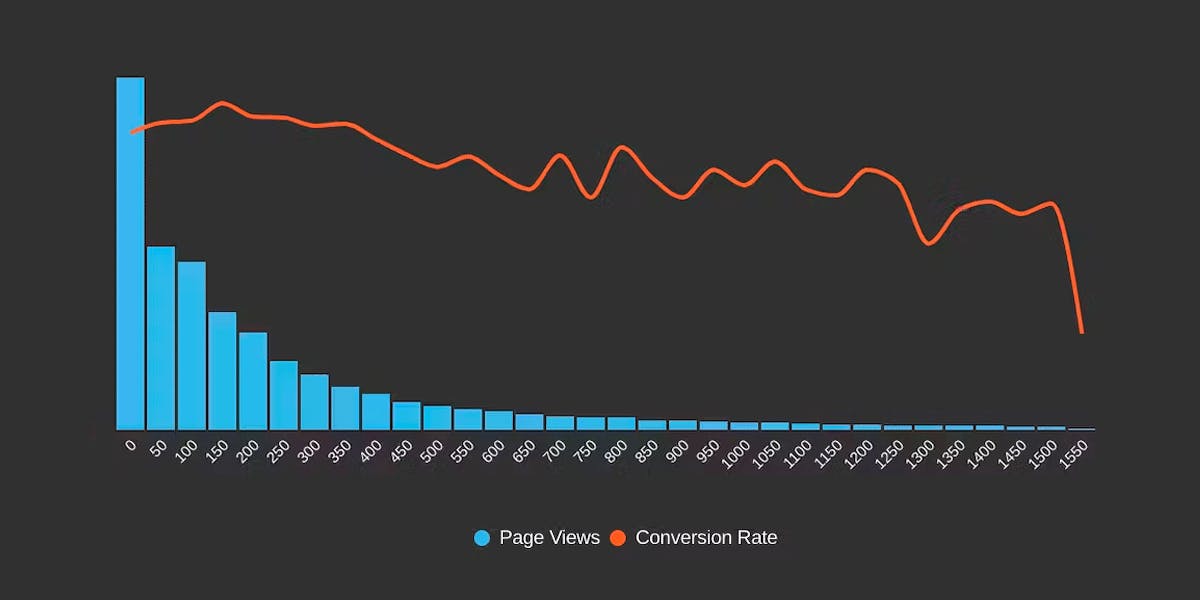

Why you need to know your site's performance plateau (and how to find it)

"I made my pages faster, but my business and user engagement metrics didn't change. WHY???"

"How do I know how fast my pages should be?"

"How can I demonstrate the business value of performance to people in my organization?"

If you've ever asked yourself any of these questions, then you could find the answers in identifying and understanding the performance plateau for your site.

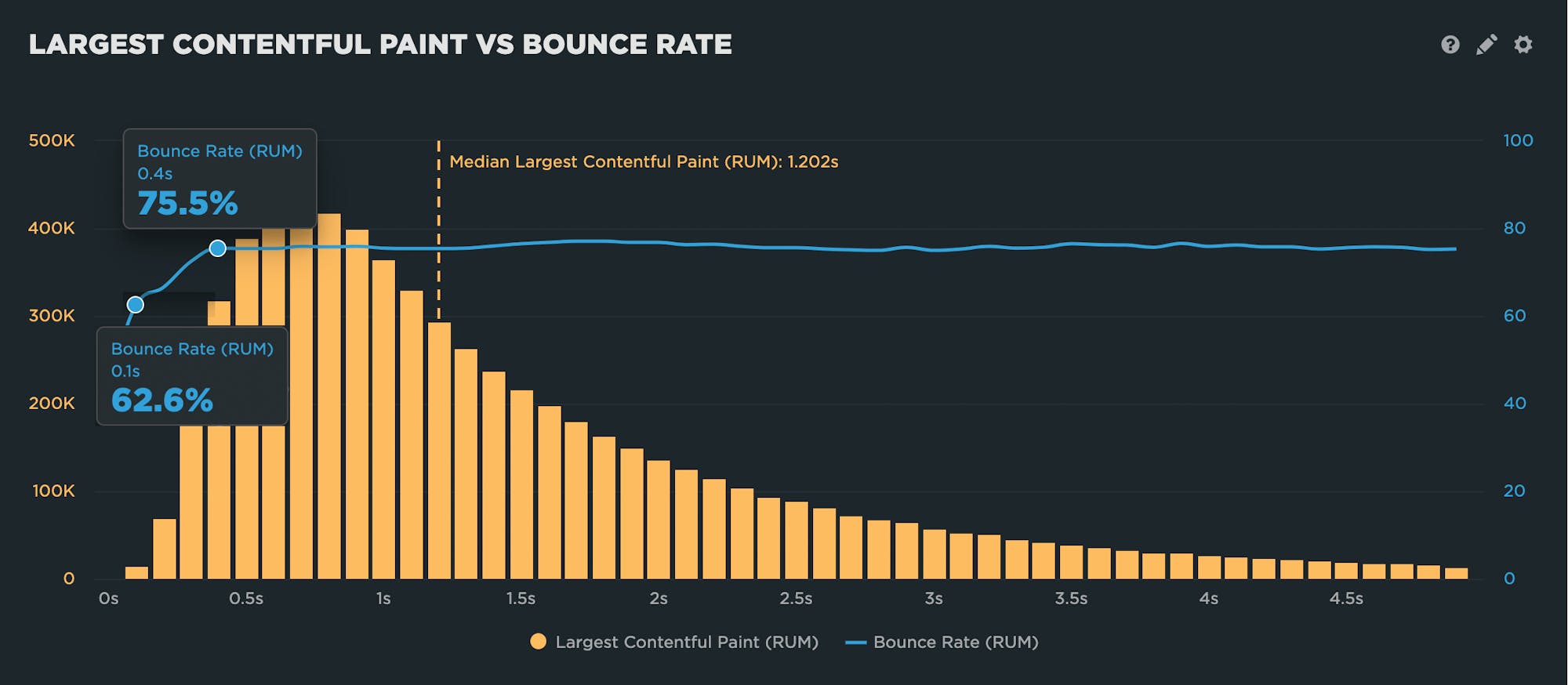

What is the "performance plateau"?

The performance plateau is the point at which changes to your website’s rendering metrics (such as Start Render and Largest Contentful Paint) cease to matter because you’ve bottomed out in terms of business and user engagement metrics.

In other words, if your performance metrics are on the performance plateau, making them a couple of seconds faster probably won't help your business.

The concept of the performance plateau isn't new. I first encountered it more than ten years ago, when I was looking at data for a number of sites and noticed that – not only was there a correlation between performance metrics and business/engagement metrics – there was also a noticeable plateau in almost every correlation chart I looked at.

A few months ago someone asked me if I've done any recent investigation into the performance plateau, to see if the concept still holds true. When I realized how much time has passed since my initial research, I thought it would be fun to take a fresh look.

In this post, I'll show how to use your own data to find the plateau for your site, and then what to do with your new insights.

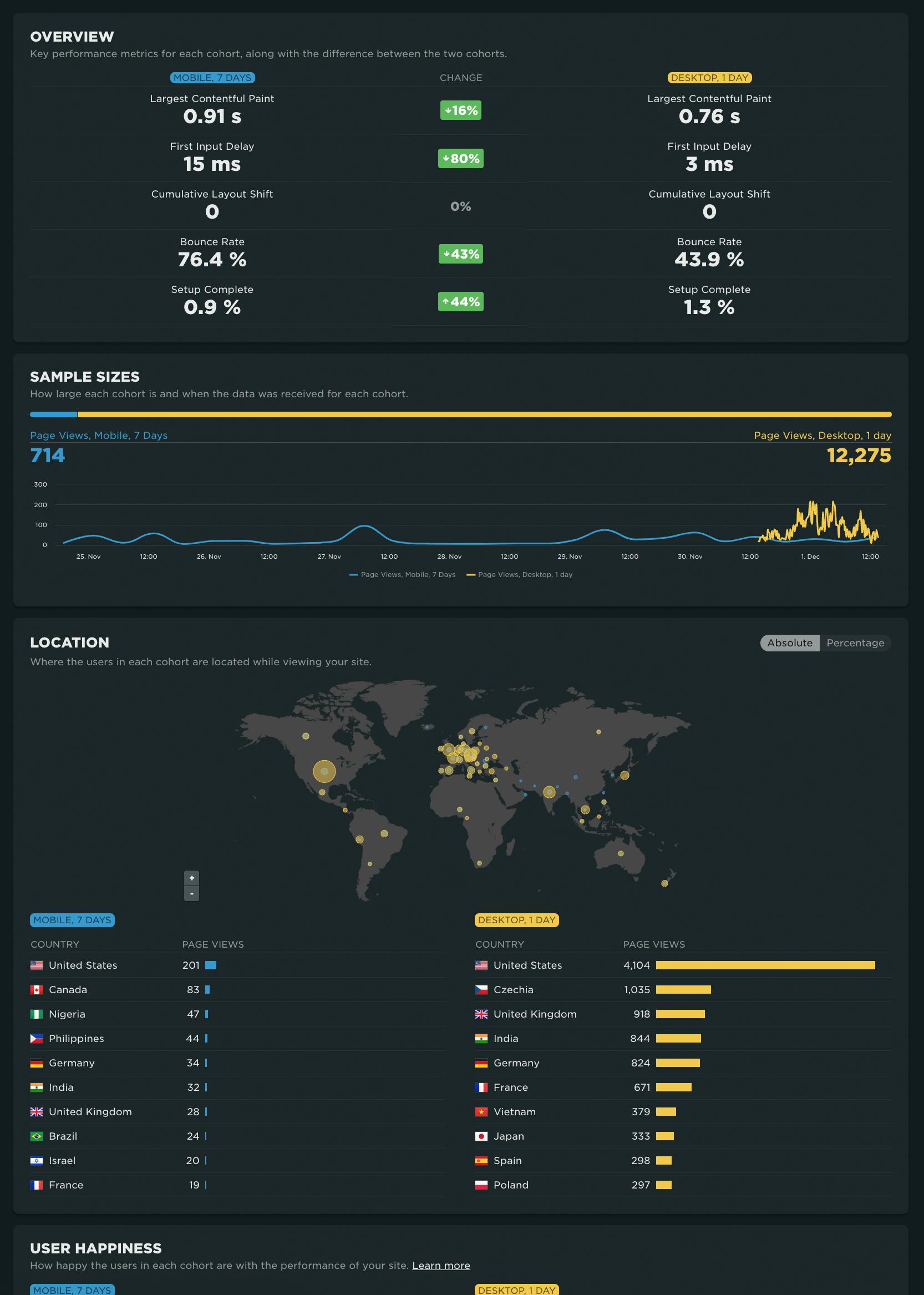

NEW! RUM Compare dashboard

Exploring real user (RUM) data can be a hugely enlightening process. It uncovers things about your users and their behavior that you never might have suspected. That said, it's not uncommon to spend precious time peeling back the layers of the onion, only to find false positives or uncertainty in all that data.

At SpeedCurve, we believe a big part of our job is making your job easier. This was a major driver behind the Synthetic Compare dashboard we released last year, which so many of you given us great feedback on.

As you may have guessed, since then we've been hard at work coming up with the right way to explore and compare your RUM datasets using a similar design pattern. Today, we are thrilled to announce your new RUM Compare dashboard!

With your RUM Compare dashboard, you can easily generate side-by-side comparisons for any two cohorts of data. Some of the many reasons you might want to do this include:

- Improve Core Web Vitals by identifying the tradeoffs between pages that have different layout and construction

- Triage a performance regression related to the latest change or deployment to your site by looking at a before/after comparison

- Explore and compare different out-of-the-box cohorts, such as device types, geographies, page labels, and more

- Analyze A/B tests or experiments to understand which had the most impact on user behavior, as well as performance

- Optimize your funnel by understanding differences between users that convert or bounce from your site and users who don't

- Evaluate CDN performance by exploring the impact of time-of-day traffic patterns

Let's take a tour...

Element Timing: One true metric to rule them all?

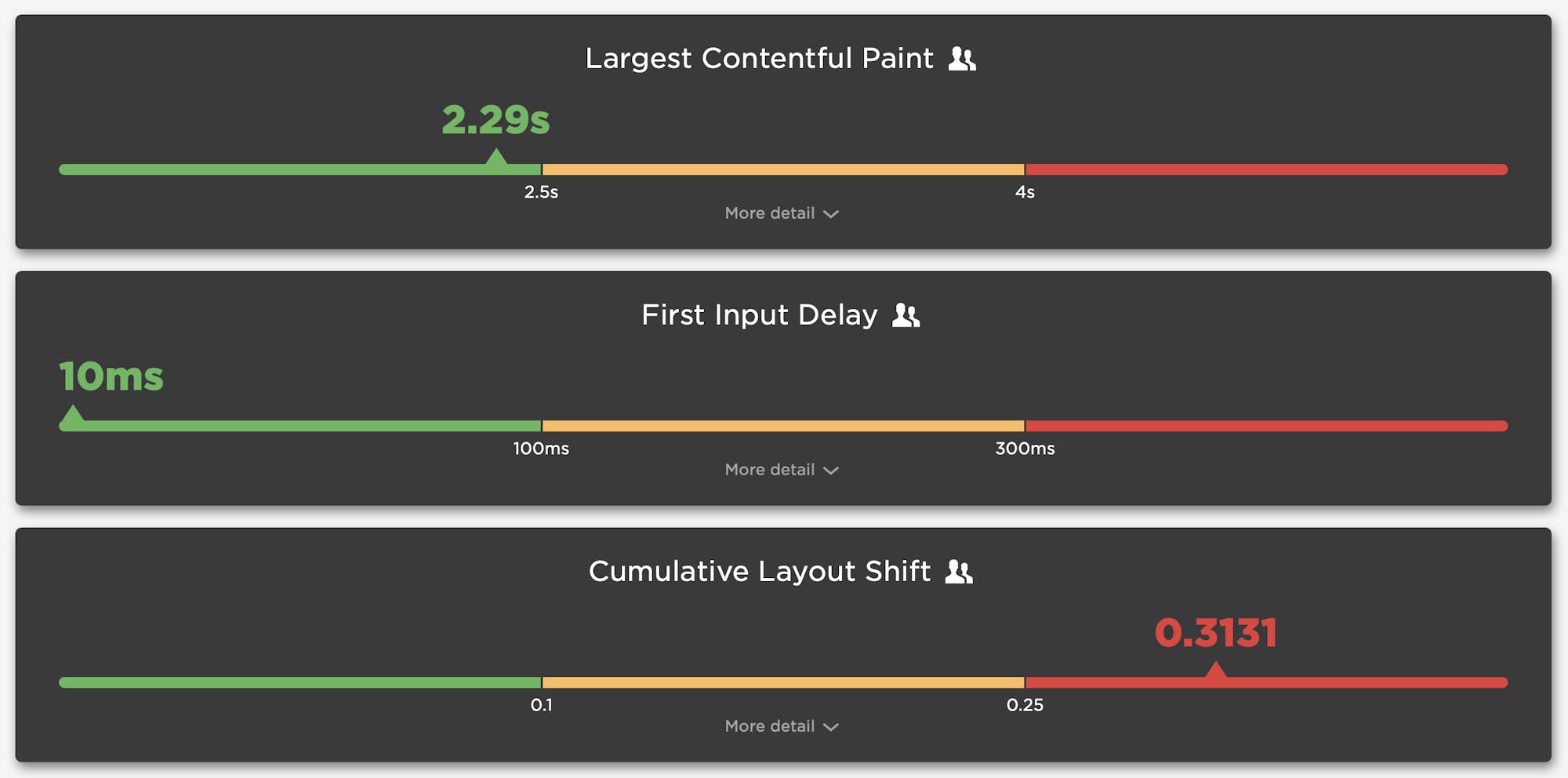

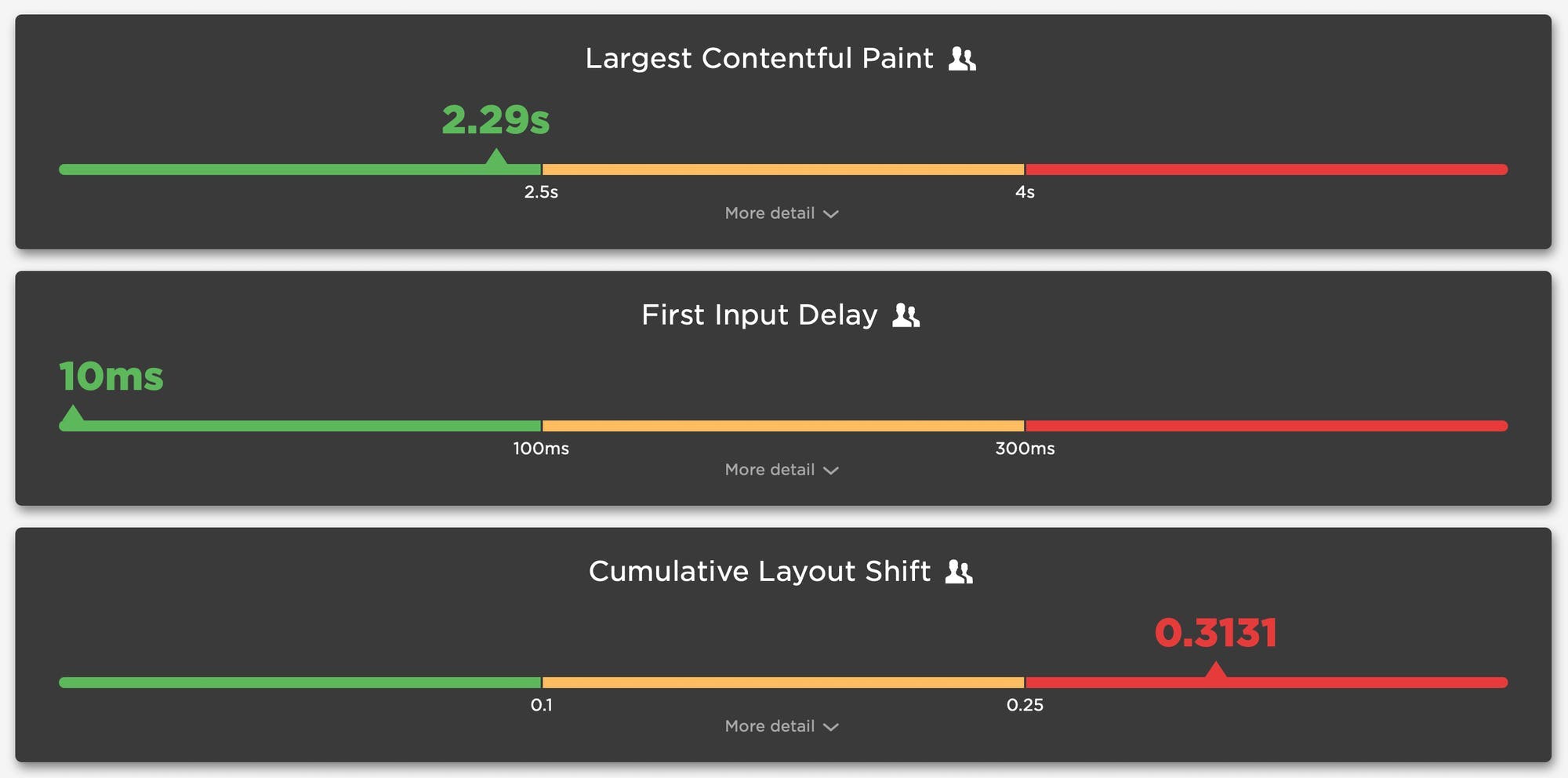

One of the great things about Google's Core Web Vitals is that they provide a standard way to measure our visitors’ experience. Core Web Vitals can answer questions like:

- When was the largest element displayed? Largest Contentful Paint (LCP) measures when the largest visual element (image or video) finishes rendering.

- How much did the content move around as it loads? Cumulative Layout Shift (CLS) measures the visual stability of a page.

- How responsive was the page to visitors' actions? First Input Delay (FID) measures how quickly a page responds to a user interaction, such as a click/tap or keypress.

Sensible defaults, such as Core Web Vitals, are a good start, but one pitfall of standard measures is that they can miss what’s actually most important.

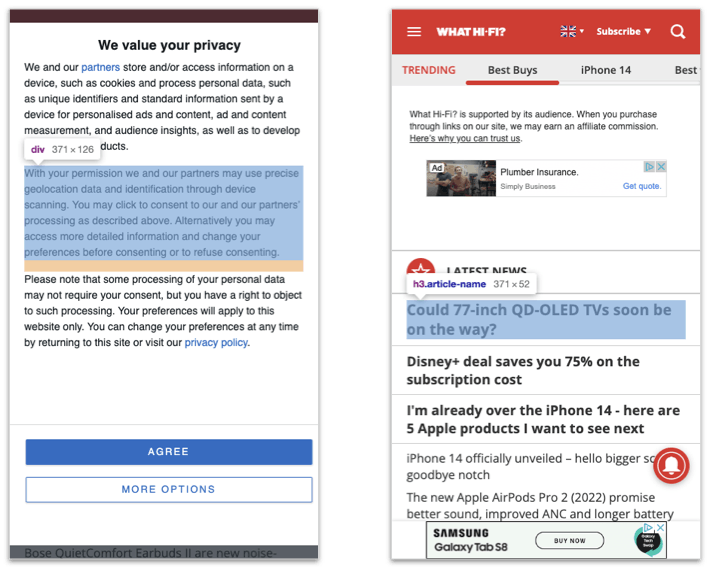

The (potential) problems with Largest Contentful Paint

Largest Contentful Paint (LCP) makes the assumption that the largest visible element is the most important content from the visitors’ perspective; however, we don’t have a choice about which element it measures. LCP may not be measuring the most appropriate – or even the same – element for each page view.

The LCP element can vary for first-time vs repeat visitors

In the case of a first-time visitor, the largest element might be a consent banner. On subsequent visits to the same page, the largest element might be an image for a product or a photo that illustrates a news story.

The screenshots from What Hifi (a UK audio-visual magazine) illustrate this problem. When the consent banner is shown, then one of its paragraphs is the LCP element. When the consent banner is not shown, an article title becomes the LCP element. In other words, the LCP timestamp varies depending on which of these two experiences the visitor had!

What Hi Fi with and without the consent banner visible

What Hi Fi with and without the consent banner visible

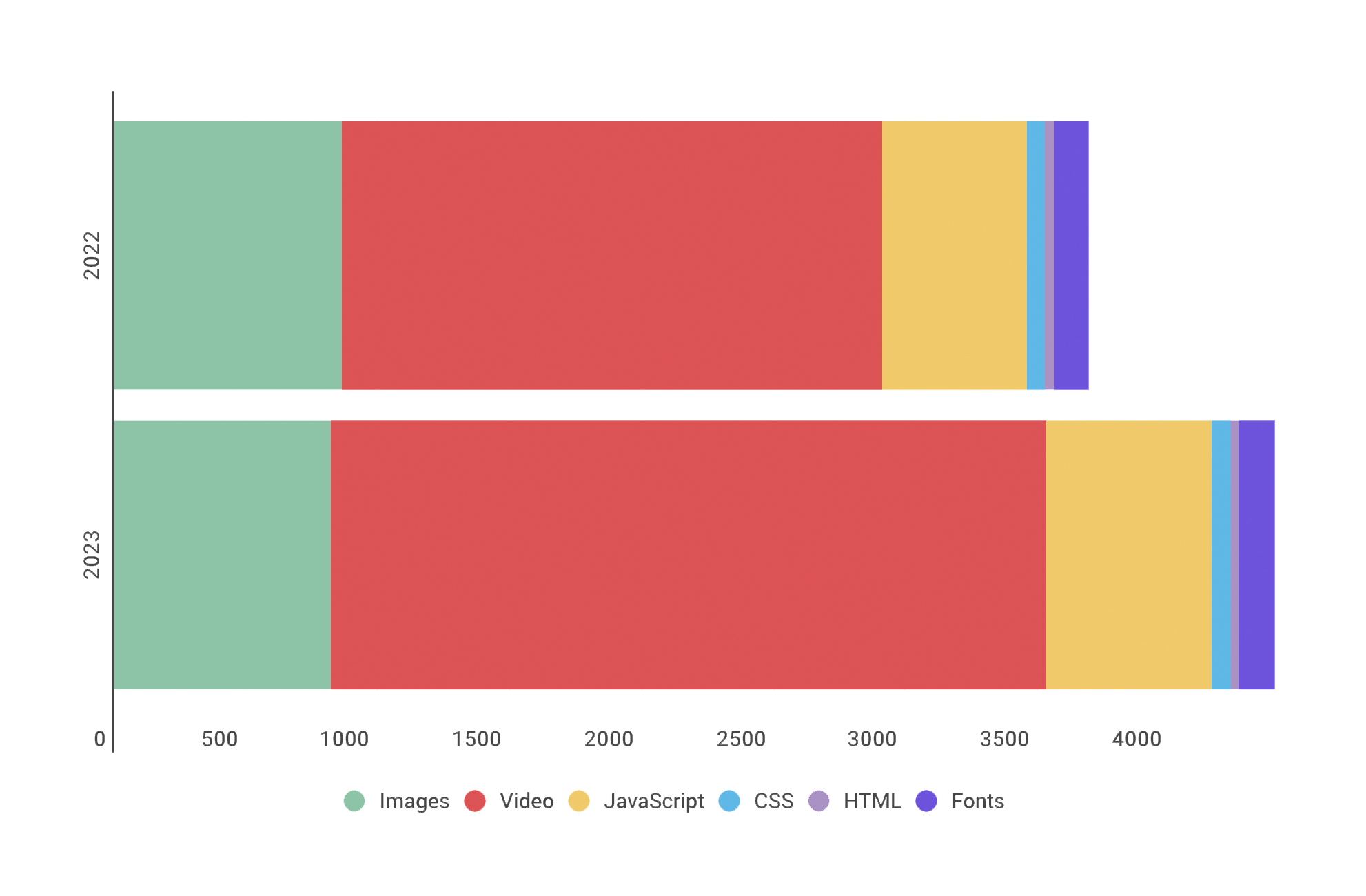

Ten years of page bloat: What have we learned?

(See our more recent page growth post: What is page bloat? And how is it hurting your business, search rank, and users?)

I've been writing about page size and complexity for years. If you've been working in the performance space for a while and you hear me start to talk about page growth, I'd forgive you if you started running away. ;)

But pages keep getting bigger and more complex year over year – and this increasing size and complexity is not fully mitigated by faster devices and networks, or by our hard-working browsers. Clearly we need to keep talking about it. We need to understand how ever-growing pages work against us. And we need to have strategies in place to understand and manage our pages.

In this post, we'll cover:

- How big are pages today versus ten years ago?

- How does page bloat hurt your business?

- How does page bloat affect other metrics, such as Google's Core Web Vitals?

- Is it possible to have large pages that deliver a good user experience?

- What can we do to manage our pages and fight regression?

SEO and web performance: What to measure and how to optimize

Chances are, you're here because of Google's update to its search algorithm, which affects both desktop and mobile, and which includes Core Web Vitals as a ranking factor. You may also be here because you've heard about the most recent potential candidates for addition to Core Web Vitals, which were just announced at Chrome Dev Summit.

A few things are clear:

- Core Web Vitals, as a premise, are here to stay for a while.

- The metrics that comprise Web Vitals are still evolving.

- These metrics will (I think) always be in a state of evolution. That's a good thing. We need to do our best to stay up to date – not just with which metrics to track, but also with what they measure and why they're important.

If you're new to Core Web Vitals, this is a Google initiative that was launched in early 2020. Web Vitals is (currently) a set of three metrics – Largest Contentful Paint, First Input Delay, and Cumulative Layout Shift – that are intended to measure the loading, interactivity, and visual stability of a page.

When Google talks, people listen. I talk with a lot of companies and I can attest that, since Web Vitals were announced, they've shot to the top of many people's list of things to care about. But Google's prioritization of page speed in search ranking isn't new, even for mobile. As far back as 2013, Google announced that pages that load slowly on mobile devices would be penalized in mobile search.

Keep reading to find out:

- How much does web performance matter when it comes to SEO?

- Which performance metrics should you focus on for SEO?

- What can you do to make your pages faster for SEO purposes?

- What are some of the common issues that can hurt your Web Vitals?

- How can you track performance for SEO?

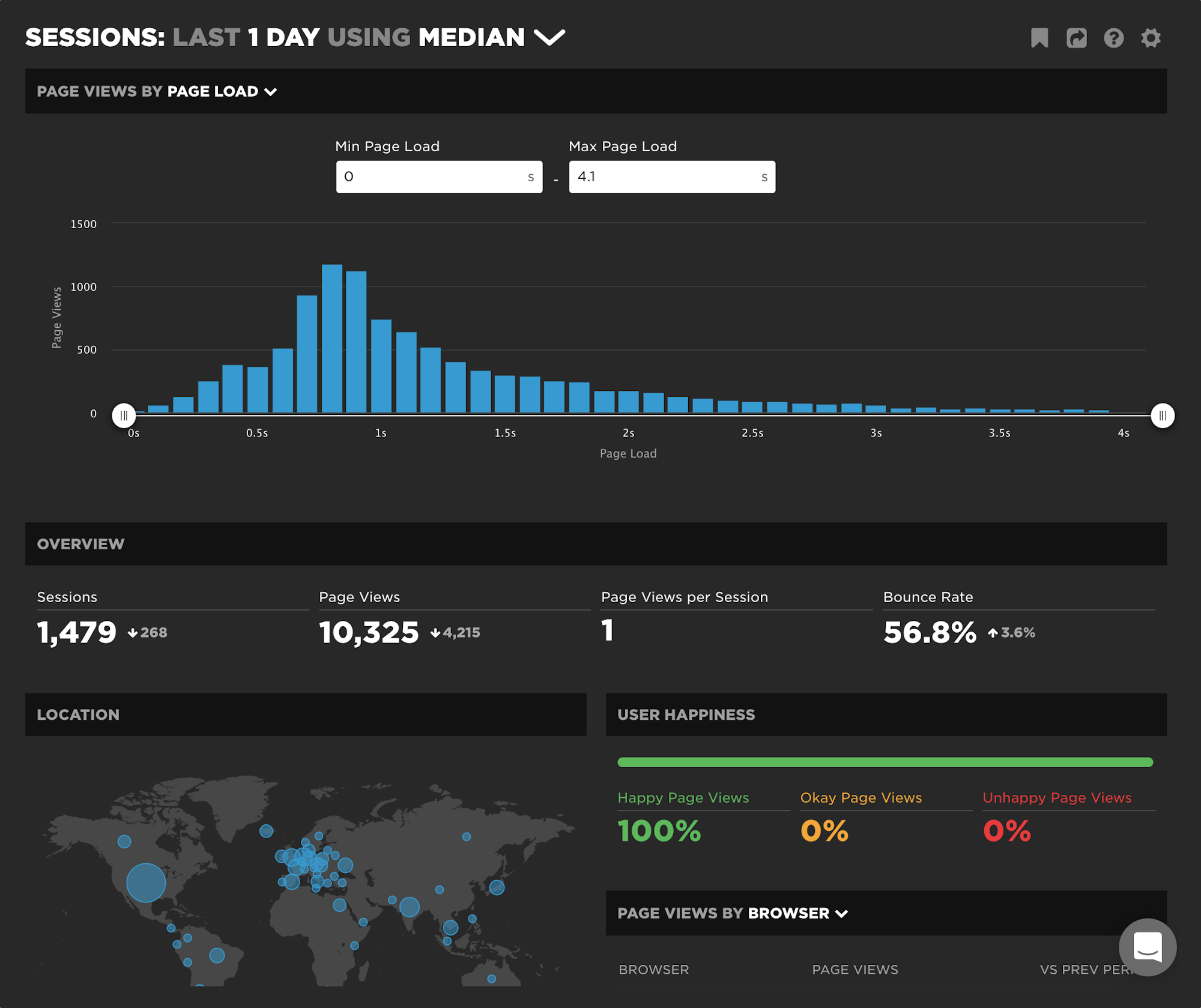

NEW: Exploring RUM sessions

If you want to understand how people actually experience your site, you need to monitor real users. The data we get from real user monitoring (RUM) is extremely useful when trying to get a grasp on performance. Not only does it serve as the source of truth for your most important budgets and KPIs, it help us understand that performance is a broad distribution that encompasses many different cohorts of users.

While real user monitoring gives us the opportunity for unparalleled insight into user experience, the biggest challenge with RUM data is that there's so much of it. Navigating through all this data has typically been done by peeling back one layer of information at a time, and it often proves difficult to identify the root cause when we see a change:

"What happened here?"

"Did the last release cause a drop in performance?"

"How can I drill down from here to see what's going on?"

"Is the issue confined to a specific region? Browser? Page?"

Today we're excited to release a new capability – your RUM Sessions dashboard – which allows you to drill into a dataset and explore those sessions that occurred within a given span of time.

NEW: Lighthouse v8 support!

After Google's announcement about Lighthouse 8 this past month, we have updated our test agents. We've gotten a lot of questions about what has changed and the impact on your performance metrics, so here's a summary.

Web Performance for Product Managers

I love conversations about performance, and I'm fortunate enough to have them a lot. The audience varies. A lot of the time it’s a front-end developer or head of engineering, but more and more I’m finding myself in great conversations with product leaders. As great as these discussions can be, I often walk away feeling like there was a better way to streamline the conversation while still conveying my passion for bringing fellow PMs into the world of webperf. I hope this post can serve that purpose and cover a few of the fundamental areas of web performance that I’ve found to be most useful while honing the craft of product management.

So, whether you are a PM or not, if you're new to performance I've put together a few concepts and guidelines you can refer to in order to ramp up quickly. This post covers:

- What makes a page slow?

- How is performance measured?

- What do the different metrics mean?

- Understanding percentiles and how to use them

- How to communicate performance to different stakeholders

Let's get started...

New! Vitals Dashboard

Getting up to speed on Core Web Vitals seems to be at the top of everyone's to-do list these days. Just in time for the holidays, we are happy to bring you our new Vitals dashboard to help you get a huge jumpstart.

We love to visualize performance data (in case you haven't heard). We love it even more when we can be true to one of our biggest motivations at SpeedCurve: leveraging both RUM and Synthetic data to help you take action on what matters most.