Farewell FID... and hello Interaction to Next Paint!

Today at Google I/O 2023, it was announced that Interaction to Next Paint (INP) is no longer an experimental metric. INP will replace First Input Delay (FID) as a Core Web Vital in March of 2024.

It's been three years since the Core Web Vitals initiative was kicked off in May 2020. In that time, we've seen people's interest in performance dramatically increase, especially in the world of SEO. It's been hugely helpful to have a simple set of three metrics – focused on loading, interactivity, and responsiveness – that everyone can understand and focus on.

During this time, SpeedCurve has stayed objective when looking at the CWV metrics. When it comes to new performance metrics, it's easy to jump on hype-fuelled bandwagons. While we definitely get excited about emerging metrics, we also approach each new metric with an analytical eye. For example, back in November 2020, we took a closer look at one of the Core Web Vitals, First Input Delay, and found that it was sort of 'meh' overall when it came to meaningfully correlating with actual user behavior.

Now that INP has arrived to dethrone FID as the responsiveness metric for Core Web Vitals, we've turned our eye to scrutinizing its effectiveness.

In this post, we'll take a closer look and attempt to answer:

- What is Interaction to next Paint?

- How does INP compare to FID?

- What is a 'good' INP result?

- Will there be differences between INP collected in RUM vs. Chrome User Experience Report (CrUX)?

- What correlation does INP have with real user behavior?

- When should you start caring about INP?

- How can you see INP for your own site in SpeedCurve?

Onward!

What is Interaction to Next Paint?

Interaction to Next Paint (INP) is intended to measure how responsive a page is to user interaction(s). This is measured based on how quickly the page responds visually after a user interaction (i.e. when the page paints something in the browser's next frame after you interact with it). Because INP measures actual user interactions, it can only be monitored using a real user monitoring (RUM) tool.

For the purposes of INP, an interaction is considered any of the following:

- Mouse click

- Touchscreen tap

- Key press

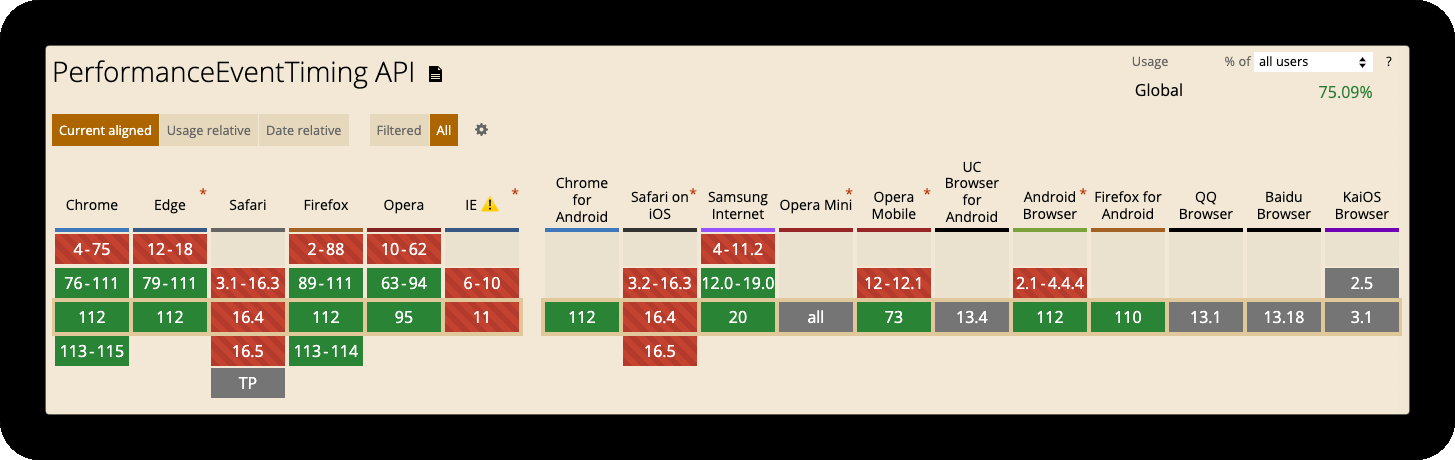

INP is measured using the Event Timing API, which as of today is supported in Chrome, Edge, Firefox and Opera. Alas, there is no support for Safari.

For a more detailed explainer of INP – including a breakdown of what's in an interaction, how INP is calculated and more – see this post by Jeremy Wagner from the Google Chrome team.

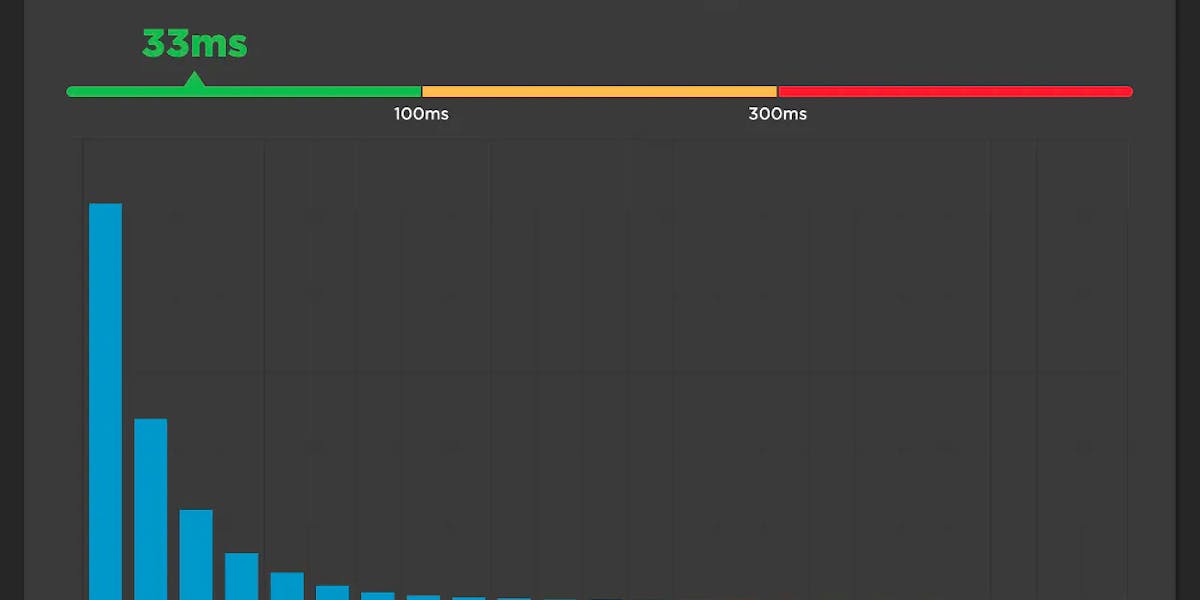

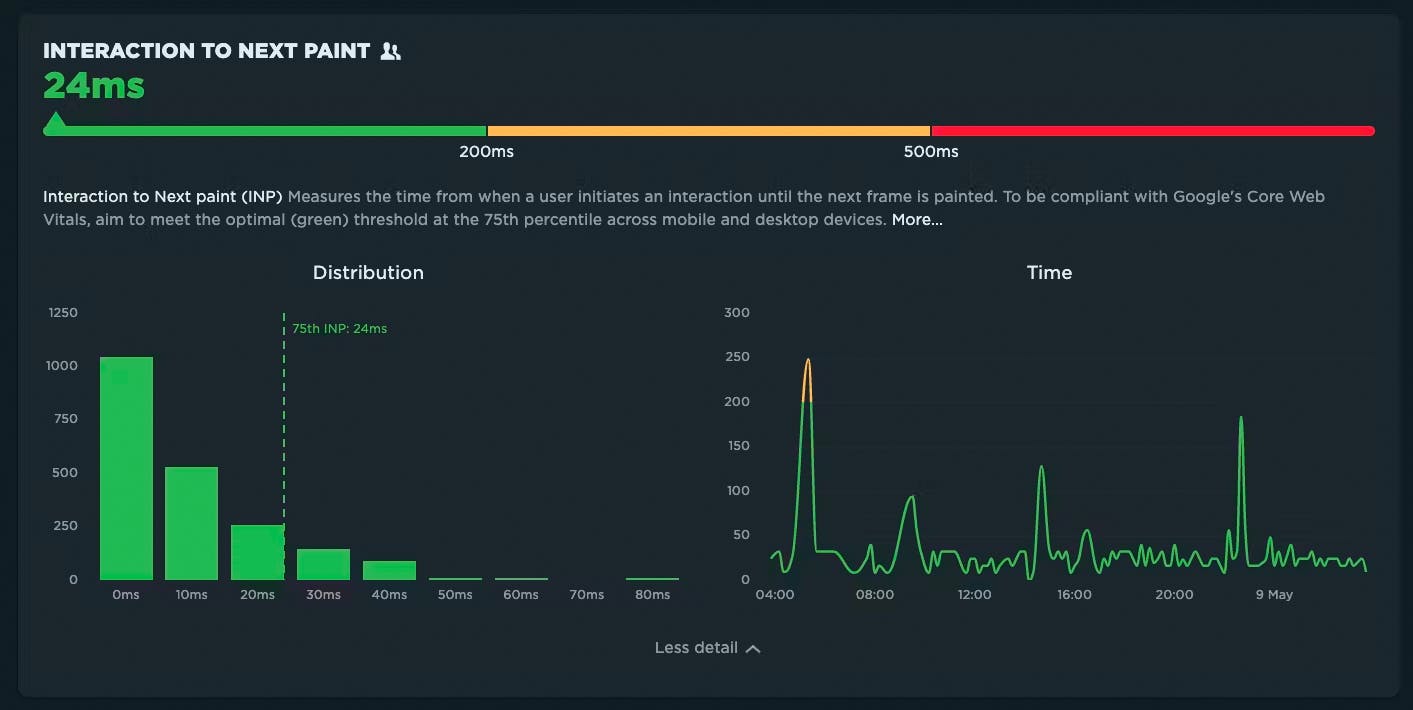

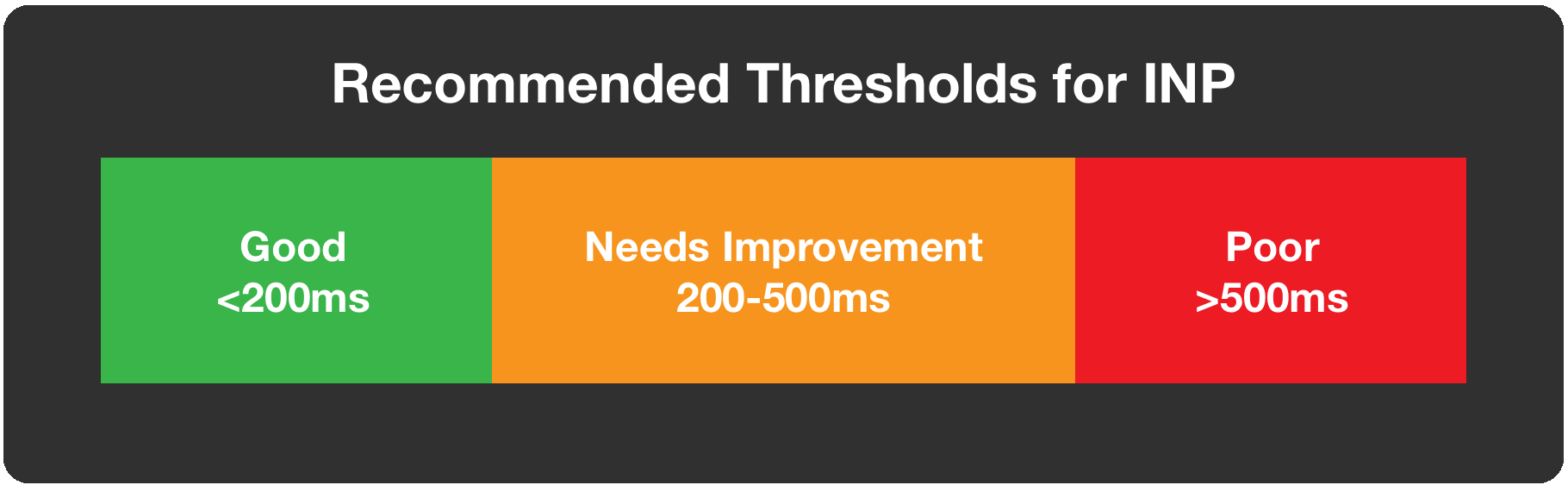

What is a 'good' INP number?

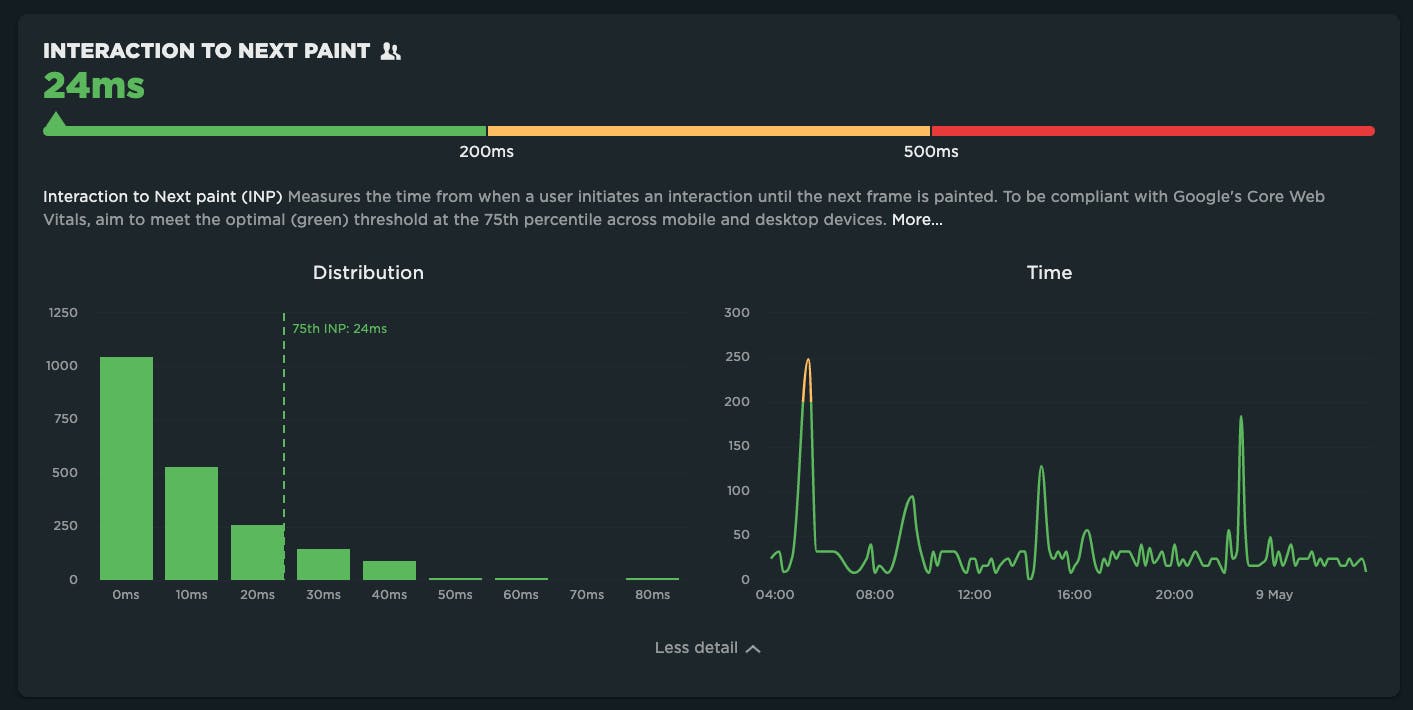

INP is a new metric, so the suggested thresholds from Google are subject to change. For now, those thresholds are as follows:

- Good – Under 200ms

- Needs improvement – Between 200-500ms

- Poor – More than 500ms

Note that these thresholds are all based on RUM data at the 75th percentile.

What can affect INP?

Most user interactions will take place after the page is initially loaded, so it's important to understand that the measurement is typically captured after most of your other metrics (i.e. FCP, LCP, Load, etc.) have occurred. Oftentimes the page seems dormant at this point, but this is not always the case.

Things that can affect INP include:

- Long-running JavaScript event handlers

- Input delay due to Long Tasks blocking the main thread

- Poorly performing JavaScript frameworks

- Page complexity leading to presentation delay

For some ideas around optimizing INP, I've included some great resources at the bottom of this article.

How do INP numbers compare to FID?

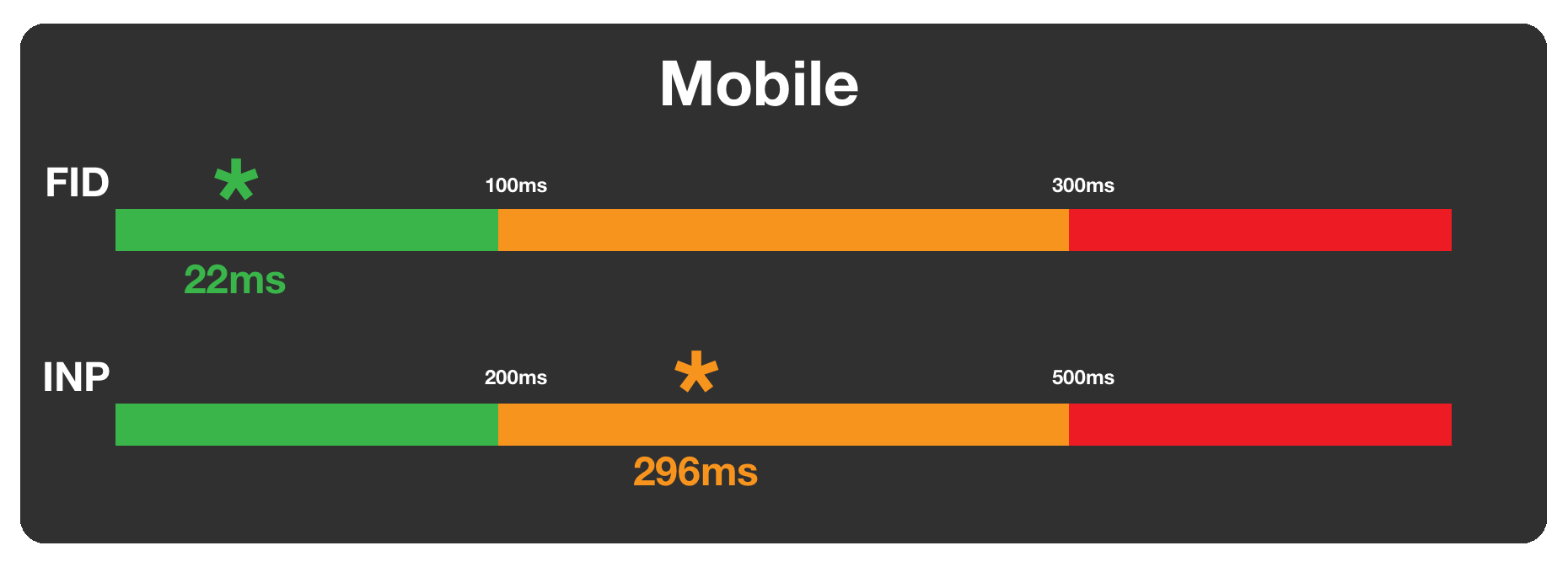

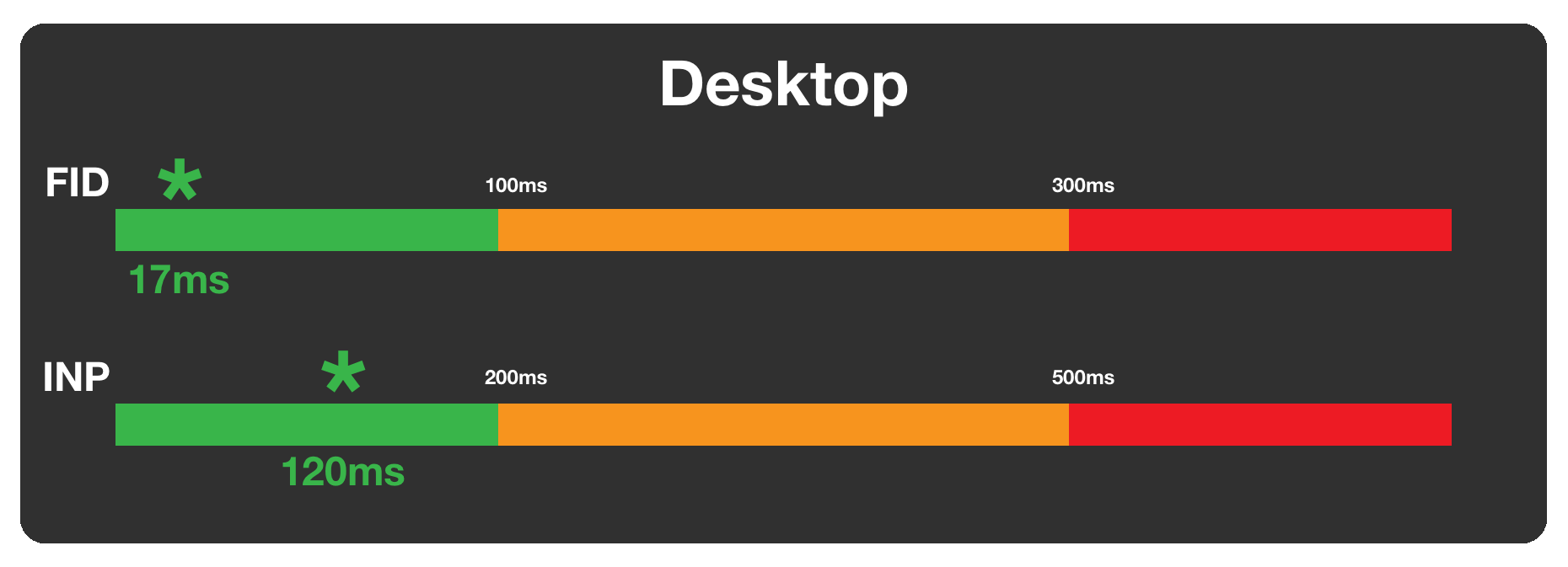

There are a few important things to understand about FID versus INP. Most importantly, INP includes more than just input delay. Processing time and presentation delay are also included. This is inclusive of time spent running event handlers, as well as rendering/painting delay. The following is a comparison of FID and INP at the 75th percentile for Mobile and Desktop for RUM data collected from SpeedCurve.

It's good to see INP values so much higher than FID, which was expected but still nice to see. While desktop numbers look encouraging, mobile is clearly an opportunity for focus. This isn't a big surprise considering the impact JavaScript has on lower-powered mobile devices.

What can I expect when comparing CrUX data to RUM?

There are some important caveats to understand when comparing INP measured from CrUX (Chrome user experience report) to INP from RUM. While RUM and CrUX do their best to align, there are other times where you may see differences between the two. Barry Pollard wrote a comprehensive post on this found here.

For INP specifically, there a a few gotchas to consider:

- For CrUX, INP can change during the lifecycle of the page. Today, SpeedCurve is reporting INP for the first interaction. Other RUM tooling may also be limited by when the beacon is being sent, likely only collecting interactions that occur before the page is loaded.

- RUM, which uses JavaScript APIs, will not be able to collect INP from within iframes for the page they are measuring, while CrUX will.

- INP is collected for all supported browsers in SpeedCurve RUM. CrUX is a subset of Chrome users only.

How does INP correlate with user behavior?

While understanding INP is important, does it really correlate with how users interact with your site? It's easy to focus so much on improving metrics such as Core Web Vitals that we lose site of the bigger picture. Creating a delightful experience for end users is at the heart of web performance.

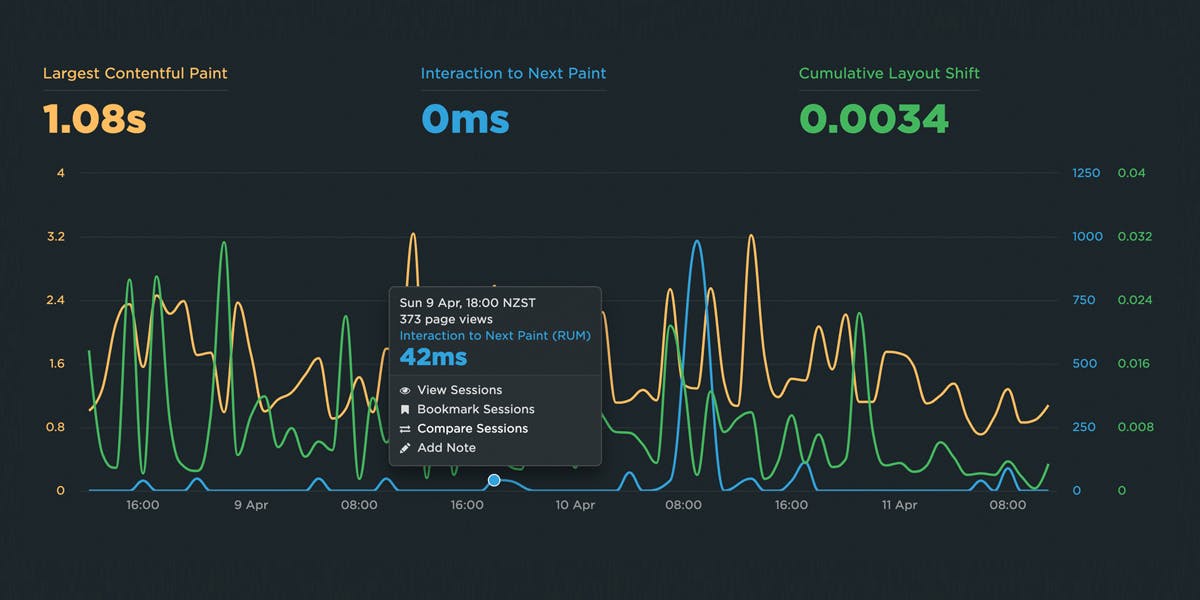

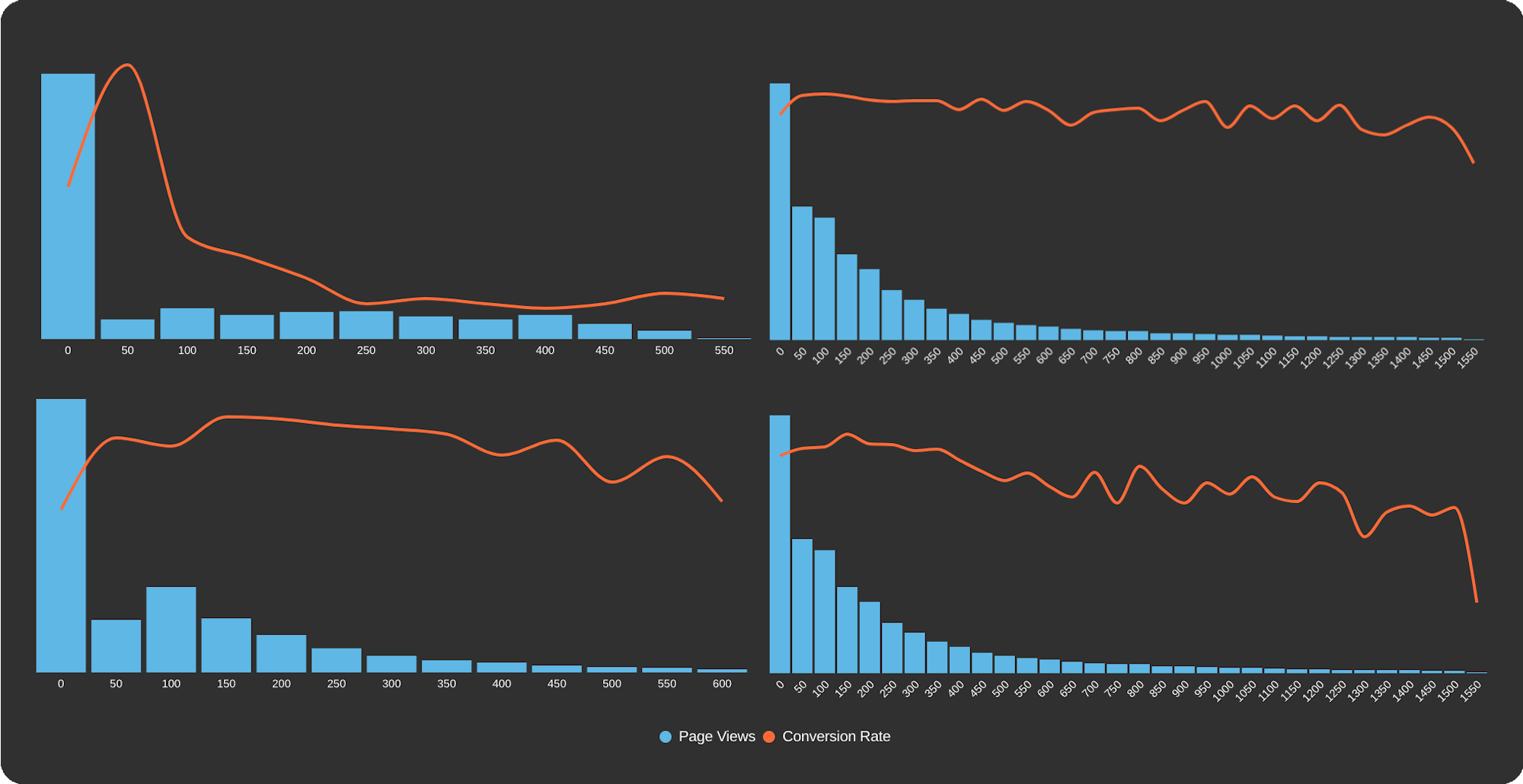

One way of understanding how metrics such as INP align with users is to correlate with outcomes, such as conversion rates. Here is an example of how INP correlated with conversion rates for four different sites.

Not surprisingly, we see the impact is different based on the slope of the conversion line, as well as the distribution of INP across user sessions. However, it's notable that there is an overall negative correlation between INP and conversion. This tells us that yes, INP seems to be a meaningful metric when it comes to user-perceived performance.

Measuring INP in SpeedCurve

We've added INP everywhere that your Vitals are showcased throughout SpeedCurve – including the Home, Vitals, Performance, and JavaScript dashboards.

FID hasn't disappeared from the dashboards yet, but likely will as we get closer to March 2024. If you're still interested in tracking FID at that point, you can do that by creating custom charts in your Favorites dashboards.

Summary

We hope that focusing on Interaction to Next Paint will drive more attention to the issues that were being glossed over by FID. There has been a lot of back and forth over whether or not improving your Core Web Vitals has a significant impact on search rankings. I don't have any data to make an informed statement on that question.

However, I will say that if you're solely optimizing your site for SEO purposes, perhaps you're missing the bigger picture. Doing the right thing for your users – including creating a responsive and delightfully fast experience – will have a lasting impact on your brand and yes, your revenue.

Resources

- INP Explainer

- Optimizing INP

- Adding INP to Favorites in SpeedCurve [video]

- Modern Frameworks and INP

- Core Web Vitals Changelog