The Definitive Guide to Long Animation Frames (LoAF)

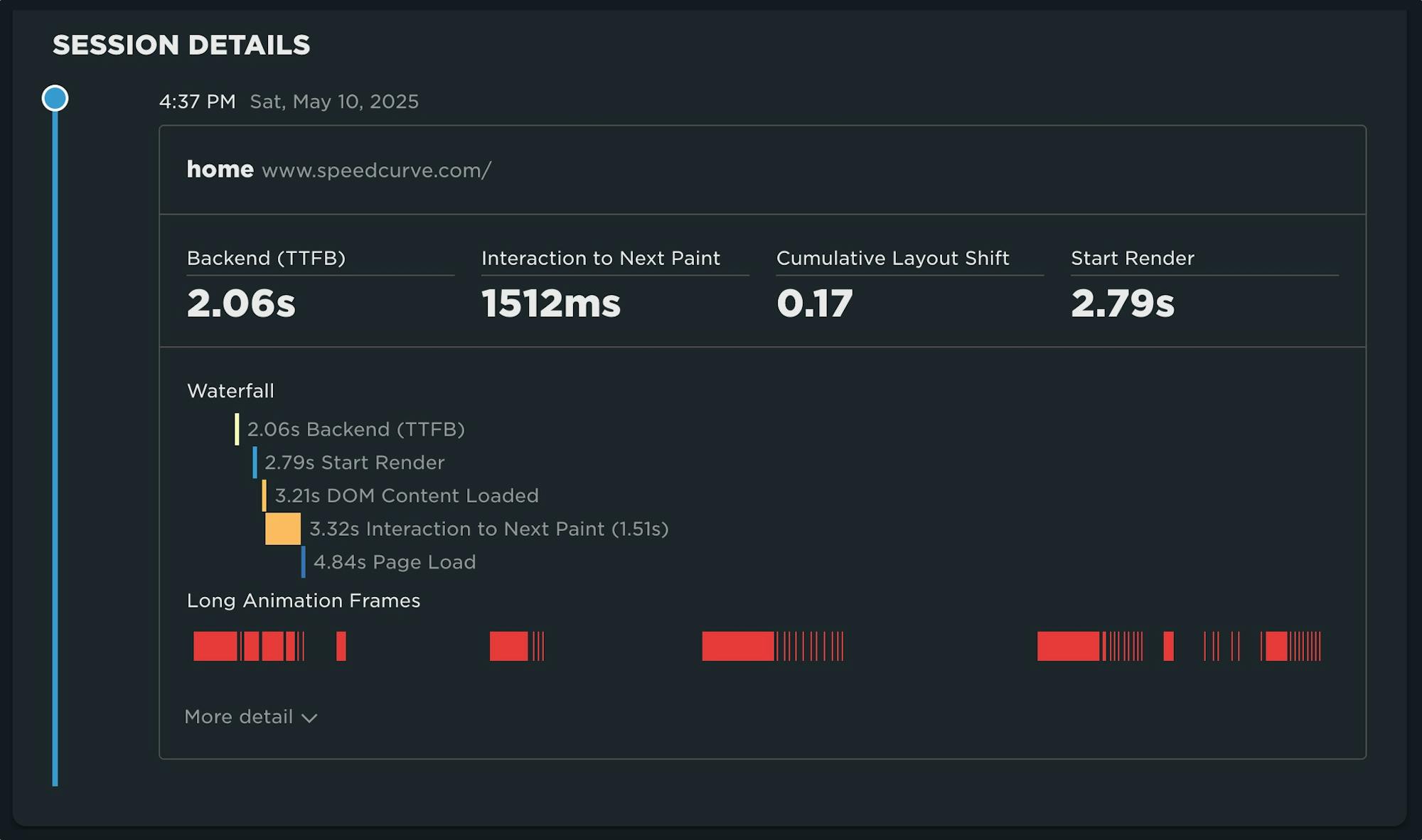

With Long Animation Frames (commonly referred to as LoAF, pronounced 'LO-aff') we finally have a way to understand the impact of our code on our visitors' experiences.

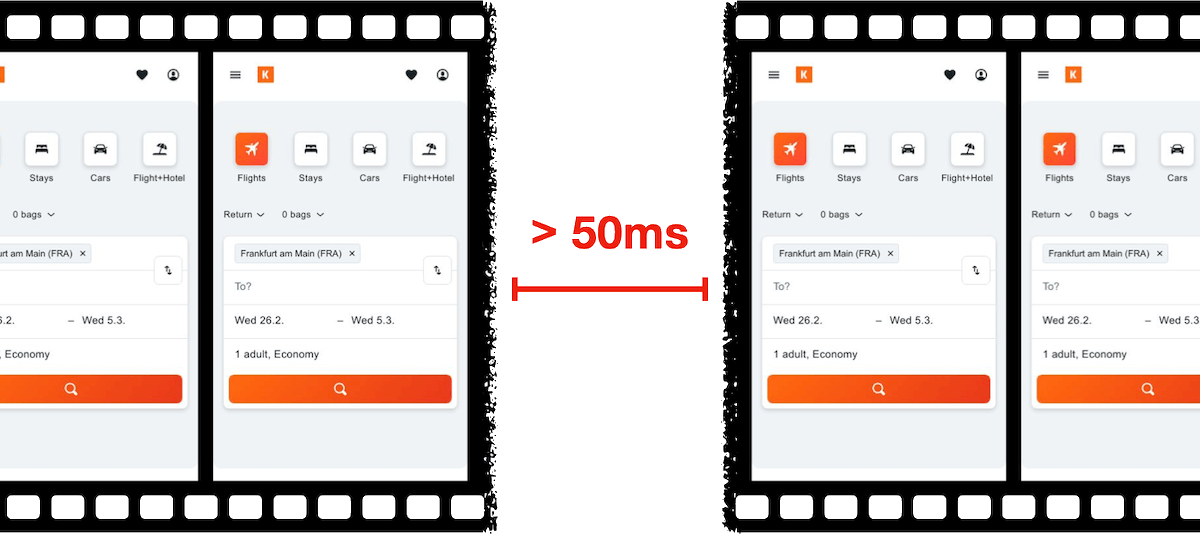

Long Animation Frame – a frame that took longer then 50ms from its start to when it started painting

LoAF allows us to understand how scripts and other tasks affect both hard and soft navigations, as well as how scripts affect interactions. Using the data LoAF provides, we can identify problem scripts and target changes that improve our visitors' experience. We can also finally start to quantify the impact of third-party scripts as they execute in our visitors' browsers.

Keep reading to learn:

- Why animation frame rate matters

- Anatomy of a Long Animation Frame

- Key LoAF milestones and what we can do with milestone data

- Script attribution (and why script details might sometimes be unavailable)

- How to match script data to Interaction to Next Paint, including sub-parts

- How to capture LoAF entries

- Getting started with LoAF

- LoAF support in SpeedCurve

NEW! Monitor Long Animation Frames and get to the bottom of your JavaScript issues

CPU consumption by the browser is one of the main causes – if not the number one cause – of a poor user experience. The primary culprit? JavaScript execution. Now you can use SpeedCurve to monitor Long Animation Frames (LoAFs) and fix the third parties and other scripts that are hurting your page speed.

Until recently, we've had little evidence from the field that definitively attributes the root cause of rendering delays. While JavaScript Long Tasks gave us a good indication that there were blocking tasks affecting metrics such as Interaction to Next Paint and Largest Contentful Paint, there was no way to attribute the work or understand how it was ultimately affecting rendering.

Fortunately, we've gotten a lot of help from Chrome in improving the attribution – and ultimately the actionability – of the data we collect in the field with RUM. The introduction of the Long Animation Frames API (LoAF) not only gives us better methods for understanding what's happening on the browser's main thread, in some cases it also gives us attribution to both first- and third-party scripts that occur during a LoAF.

This has been a highly anticipated addition to SpeedCurve, which is available for all our RUM users today. This post covers what's new in the product and points you to a few new resources to help you get up to speed on all things related to LoAF.

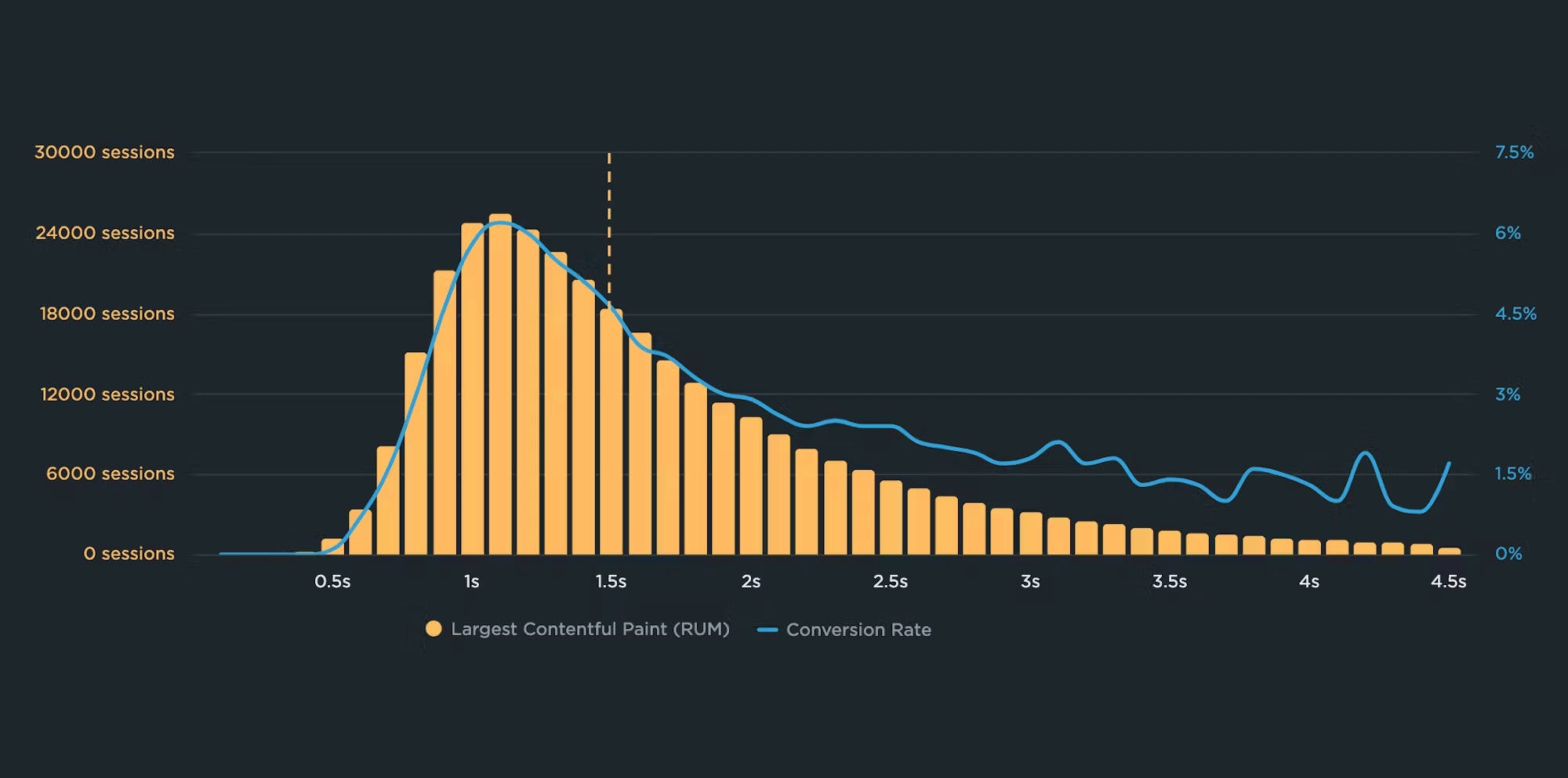

Correlation charts: Connect the dots between site speed and business success

If you could measure the impact of site speed on your business, how valuable would that be for you? Say hello to correlation charts – your new best friend.

Here's the truth: The business folks in your organization probably don't care about page speed metrics. But that doesn't mean they don't care about page speed. It just means you need to talk with them using metrics they already care about – such as conversion rate, revenue, and bounce rate.

That's why correlation charts are your new best friend.

Our 10 most popular web performance articles of 2024

We love writing articles and blog posts that help folks solve real web performance and UX problems. Here are the ones you loved most in 2024. (The number one item may surprise you!)

Some of these articles come from our recently published Web Performance Guide – a collection of evergreen how-to resources (written by actual humans!) that will help you master website monitoring, analytics, and diagnostics. The rest come from this blog, where we tend to publish industry news and analysis.

Regardless of the source, we hope you find these pieces useful!

A Holiday Wish: Core Web Vitals in Safari

Did you know that key performance metrics – like Core Web Vitals – aren't supported in Safari? If that's news to you, you're not alone! Here's why that is... and what we and the rest of the web performance community are doing to fix it.

Somebody pinch me. Seeing this post and the resulting thread gives me great hope.

Nicole Sullivan (aka Stubbornella, WebKit Engineering Manager at Apple, and OG web performance evangelist) isn't making promises or dangling a carrot. Nonetheless, it's evidence of the willingness for some public discussion on a topic that's been exhaustively discussed in our community for years. Nicole's post has gotten some great responses from many leaders in our community, hopefully shaping a strong use case for future WebKit support for Core Web Vitals.

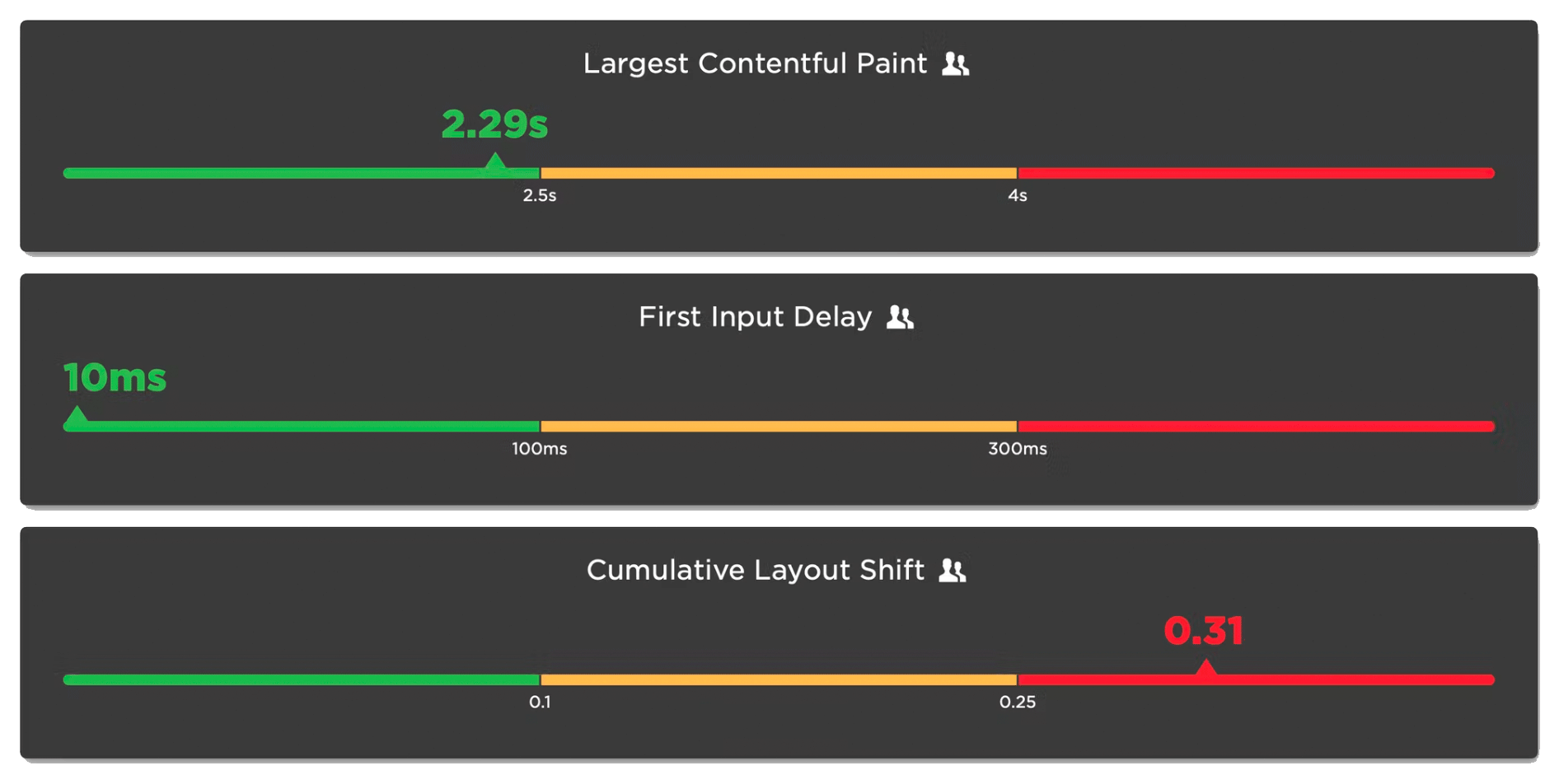

(If you're new to performance, Core Web Vitals is a set of three metrics – Largest Contentful Paint, Cumulative Layout Shift, and Interaction to Next Paint – that are intended to measure the rendering speed, interactivity, and visual stability of web pages.)

In this post, I'm going to highlight some of the discussion around the topic of Core Web Vitals and Safari, which was a major theme coming out of the recent web performance marathon in Amsterdam that included WebPerf Days, performance.sync(), and the main event, performance.now().

2024 Holiday Readiness Checklist (Page Speed Edition!)

Delivering a great user experience throughout the holiday season is a marathon, not a sprint. Here are ten things you can do to make sure your site is fast and available every day, not just Black Friday.

Your design and development teams are working hard to attract users and turn browsers into buyers, with strategies like:

- High-resolution images and videos

- Geo-targeted campaigns and content

- Third-party tags for audience analytics and retargeting

However, all those strategies can take a toll on the speed and user experience of your pages – and each introduces the risk of introducing single points of failure (SPoFs).

Below we've curated ten steps for making your users happy throughout the holidays (and beyond). If you're scrambling to optimize your site before Black Friday, you still have time to implement some or all of these best practices. And if you're already close to being ready for your holiday code freeze, you can use this as a checklist to validate that you've ticked all the boxes on your performance to-do list.

How to provide better attribution for your RUM metrics

Here's a detailed walkthrough showing how to make more meaningful and intuitive attributions for your RUM metrics – which makes it much easier for you to zero in on your performance issues.

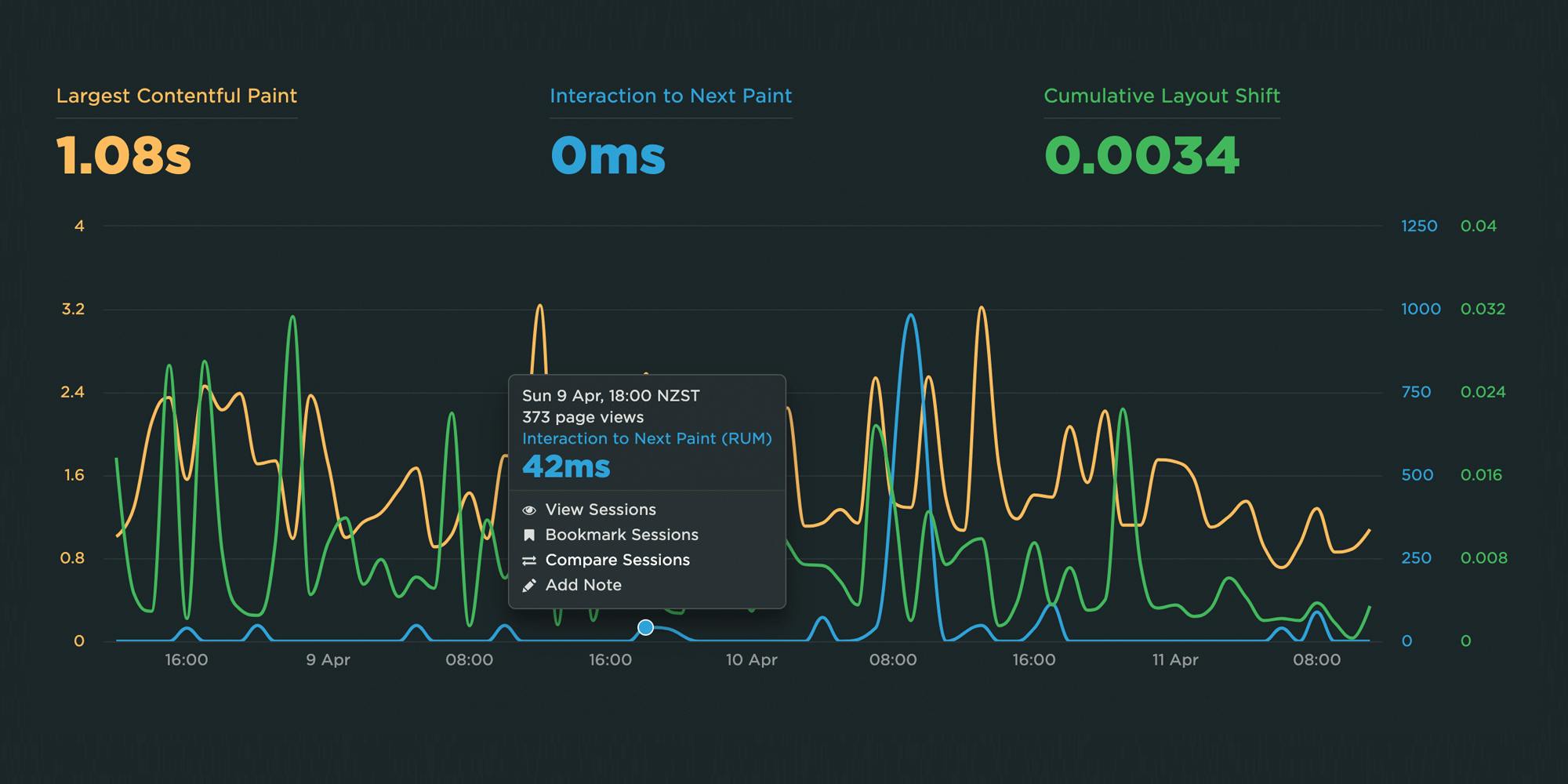

Real user monitoring (RUM) has always been incredibly important for any organization focused on performance. RUM – also known as field testing – captures performance metrics as real users browse your website and helps you understand how actual users experience your site. But it’s only in the last few years that RUM data has started to become more actionable, allowing you to diagnose what is making your pages slower or less usable for your visitors.

Making newer RUM metrics – such as Core Web Vitals – more actionable has been a significant priority for standards bodies. A big part of this shift has been better attribution, so we can tell what's actually going on when RUM metrics change.

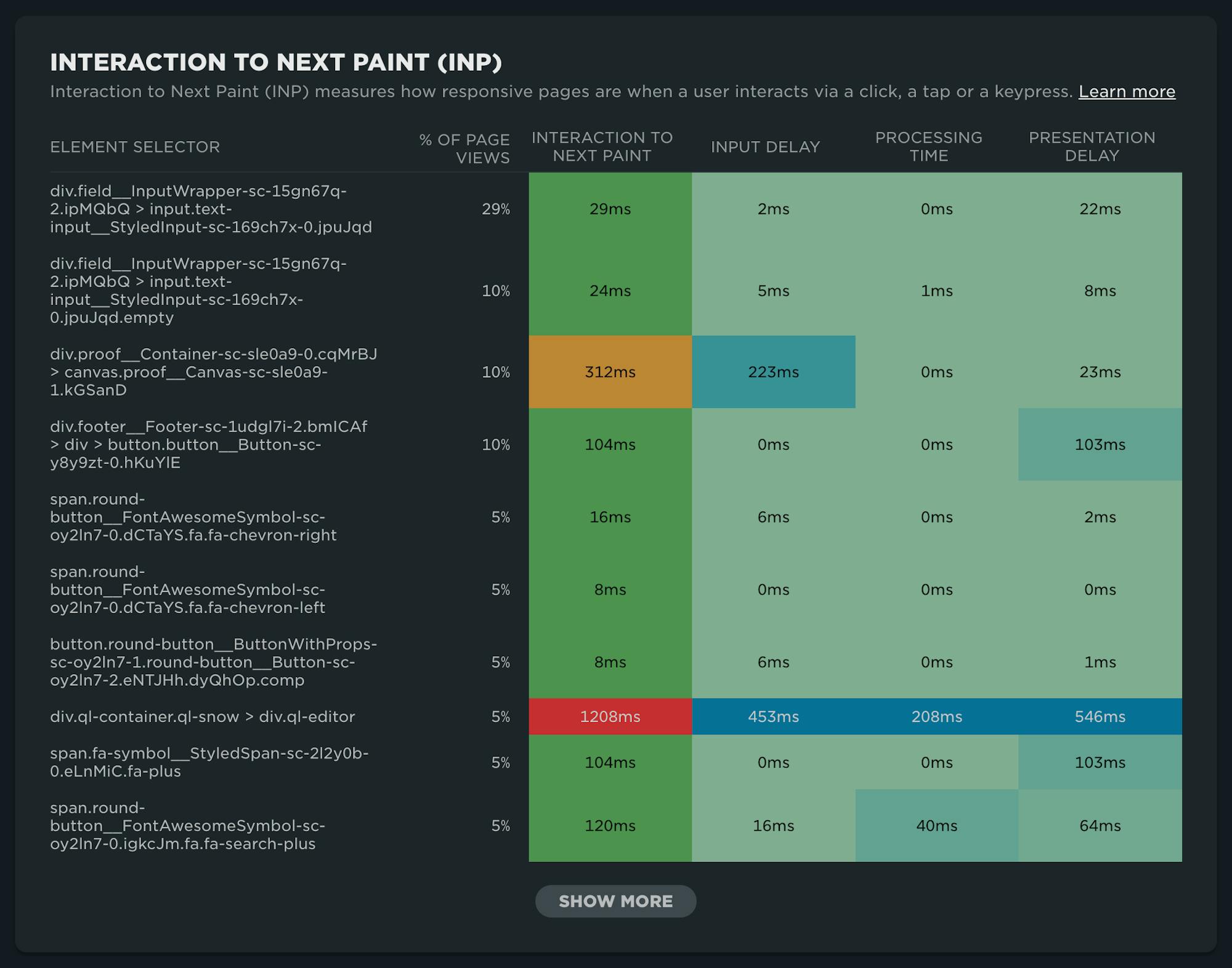

Core Web Vitals metrics – like Largest Contentful Paint (LCP), Interaction to Next Paint (INP), and Cumulative Layout Shift (CLS) – all have some level of attribution associated with them, which helps you identify what exactly is triggering the metric. The LoAF API is all about attribution, helping you zero in on which scripts are causing issues.

Having this attribution available, particularly when paired with meaningful subparts, can help us to quickly identify which specific components we should prioritize in our optimization work.

We can help make this attribution even more valuable by ensuring that key components in our page have meaningful, semantic attributes attached to them.

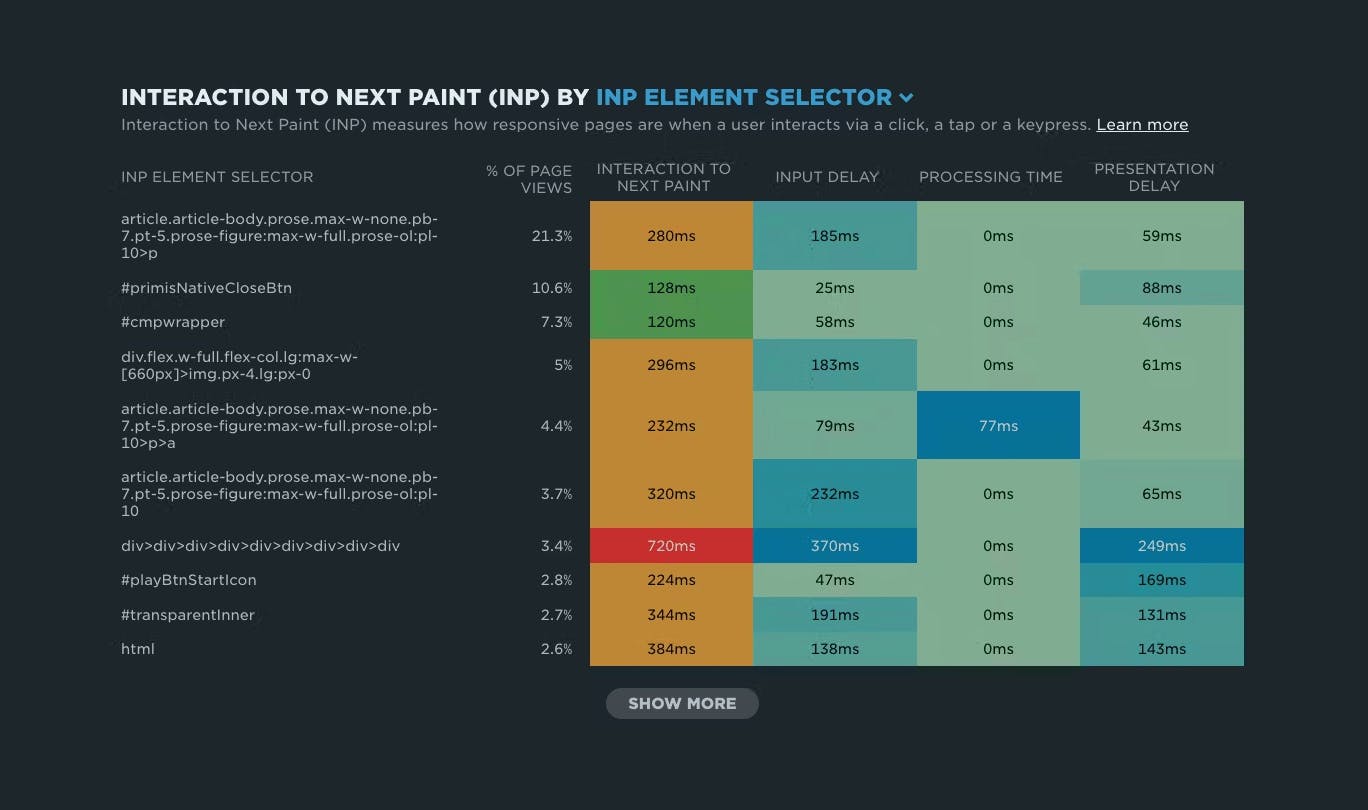

NEW: RUM attribution and subparts for Interaction to Next Paint!

Now it's even easier to find and fix Interaction to Next Paint issues and improve your Core Web Vitals.

Our newest release continues our theme of making your RUM data even more actionable. In addition to advanced settings, navigation types, and page attributes, we've just released more diagnostic detail for the latest flavor in Core Web Vitals: Interaction to Next Paint (INP).

This post covers:

- Element attribution for INP

- A breakdown of where time is spent within INP, leveraging subparts

- How to use this information to find and fix INP issues

- A look ahead at RUM diagnostics at SpeedCurve

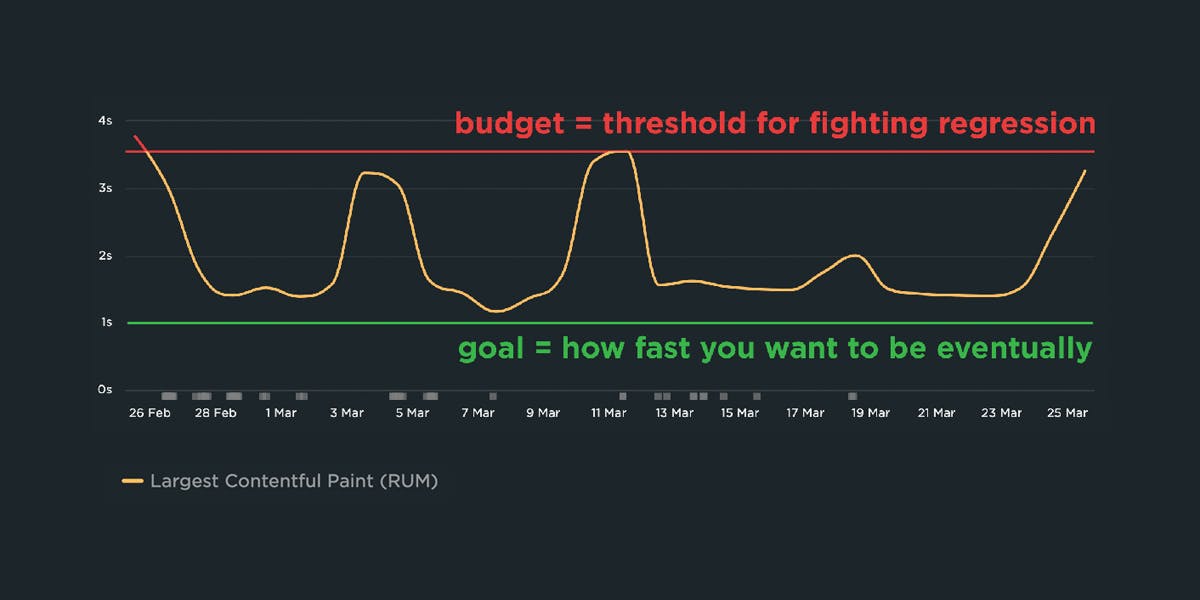

A Complete Guide to Web Performance Budgets

It's easier to make a fast website than it is to keep a website fast. If you've invested countless hours in speeding up your site, but you're not using performance budgets to prevent regressions, you could be at risk of wasting all your efforts.

In this post we'll cover how to:

- Use performance budgets to fight regressions

- Understand the difference between performance budgets and performance goals

- Identify which metrics to track

- Validate your metrics to make sure they're measuring what you think they are – and to see how they correlate with your user experience and business metrics

- Determine what your budget thresholds should be

- Focus on the pages that matter most

- Get buy-in from different stakeholders in your organization

- Integrate with your CI/CD process

- Synthesize your synthetic and real user monitoring data

- Maintain your budgets

This bottom of this post also contains a collection of case studies from companies that are using performance budgets to stay fast.

Let's get started!

Hello INP! Here's everything you need to know about the newest Core Web Vital

After years of development and testing, Google has added Interaction to Next Paint (INP) to its trifecta of Core Web Vitals – the performance metrics that are a key ingredient in its search ranking algorithm. INP replaces First Input Delay (FID) as the Vitals responsiveness metric.

Not sure what INP means or why it matters? No worries – that's what this post is for. :)

- What is INP?

- Why has it replaced First Input Delay?

- How does INP correlate with user behaviour metrics, such as conversion rate?

- What you need to know about INP on mobile devices

- How to debug and optimize INP

And at the bottom of this post, we'll wrap thing up with some inspiring case studies from companies that have found that improving INP has improved sales, pageviews, and bounce rate.

Let's dive in!

Debugging Interaction to Next Paint (INP)

Not surprisingly, most of the conversations I've had with SpeedCurve users over the last few months have focused on improving INP.

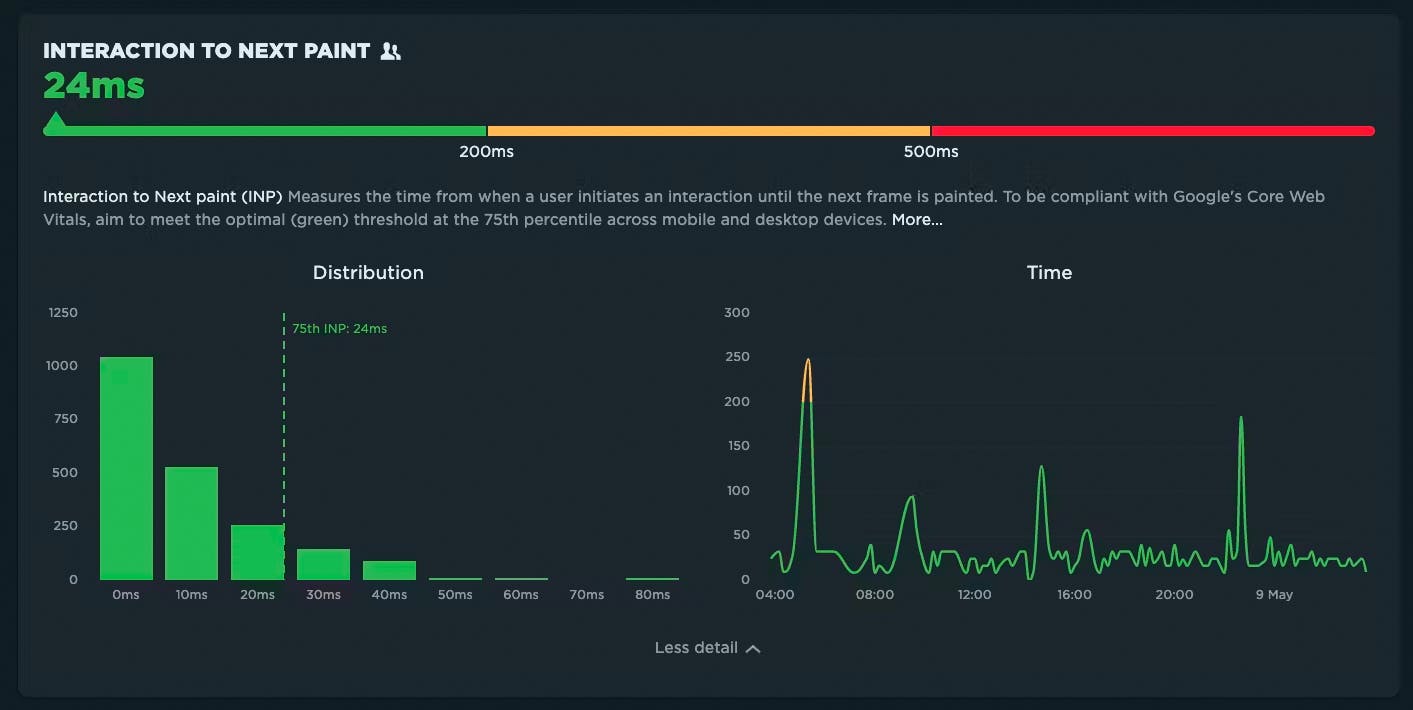

INP measures how responsive a page is to visitor interactions. It measures the elapsed time between a tap, a click, or a keypress and the browser next painting to the screen.

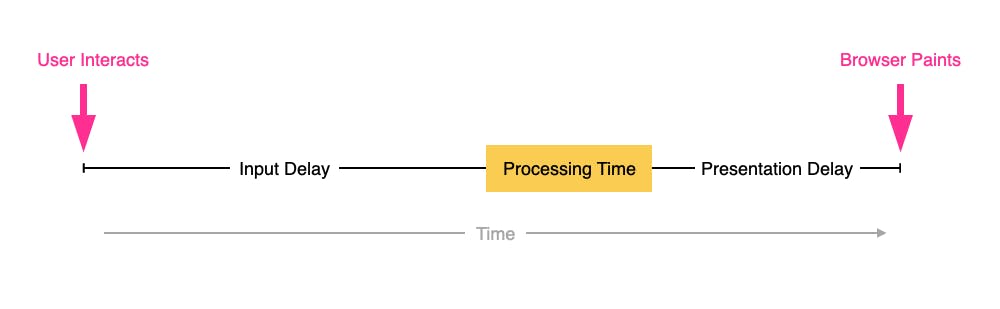

INP breaks down into three sub-parts

- Input Delay – How long the interaction handler has to wait before executing

- Processing Time – How long the interaction handler takes to execute

- Presentation Delay – How long it takes the browser to execute any work it needs to paint updates triggered by the interaction handler

Pages can have multiple interactions, so the INP time you'll see reported by RUM products and other tools, such as Google Search Console and Chrome's UX Report (CrUX), will generally be the worst/highest INP time at the 75th percentile.

Like all Core Web Vitals, INP has a set of thresholds:

INP thresholds for Good, Needs Improvement, and Poor

Many sites tend to be in the Needs Improvement or Poor categories. My experience over the last few months is that getting to Good is achievable, but it's not always easy.

In this post I'm going to walk through:

- How I help people identify the causes of poor INP times

- Examples of some of the most common issues

- Approaches I've used to help sites improve their INP

How to use Server Timing to get backend transparency from your CDN

80% of end-user response time is spent on the front end.

That performance golden rule still holds true today. However, that pesky 20% on the back end can have a big impact on downstream metrics like First Contentful Paint (FCP), Largest Contentful Paint (LCP), and any other 'loading' metric you can think of.

Server-timing headers are a key tool in understanding what's happening within that black box of Time to First Byte (TTFB).

In this post we'll explore a few areas:

- Look at industry benchmarks to get an idea of how a slow backend influences key metrics, including Core Web Vitals

- Demonstrate how you can use server-timing headers to break down where that time is being spent

- Provide examples of how you can use server-timing headers to get more visibility into your content delivery network (CDN)

- Show how you can capture server-timing headers in SpeedCurve

How to find (and fix!) INP interactions on your pages

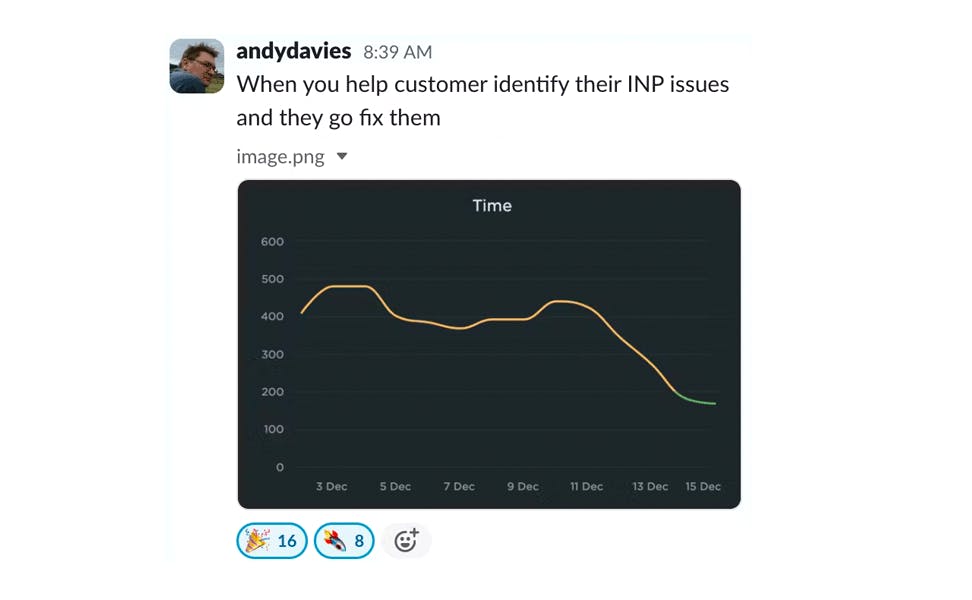

Andy Davies – fellow SpeedCurver and web performance consultant extraordinaire – recently shared an impressive Interaction to Next Paint (INP) success:

Andy has promised us a more in-depth post on debugging Interaction to Next Paint. While he's working on that, I'll try not to steal his thunder while I share a tip that may help you identify element(s) causing INP issues for your pages.

Mobile INP performance: The elephant in the room

Earlier this year, when Google announced that Interaction to Next Paint (INP) will replace First Input Delay (FID) as the responsiveness metric in Core Web Vitals in *gulp* March of 2024, we had a lot to say about it. (TLDR: FID doesn't correlate with real user behavior, so we don't endorse it as a meaningful metric.)

Our stance hasn't changed much since then. For the most part, everyone agrees the transition from FID to INP is a good thing. INP certainly seems to be capturing interaction issues that we see in the field.

However, after several months of discussing the impending change and getting a better look at INP issues in the wild, it's hard to ignore the fact that mobile stands out as the biggest INP offender by a wide margin. This doesn't get talked about as much as it should, so in this post we'll explore:

- The gap between "good" INP for desktop vs mobile

- Working theories as to why mobile INP is so much poorer than desktop INP

- Correlating INP with user behavior and business metrics (like conversion rate)

- How you can track and improve INP for your pages

10 things I love about SpeedCurve (that I think you'll love, too)

This month, SpeedCurve enters double digits with our tenth birthday. We're officially in our tweens! (Cue the mood swings?)

I joined the team in early 2017, and I'm blown away at how quickly the years have flown by. Every day, I marvel at my great luck in getting to work alongside an amazing team to build amazing tools to help amazing people like you!

In the spirit of celebration, I thought it would be fun to round up my ten favourite things to do in SpeedCurve (that I think you'll like, too). Keep scrolling to learn how to:

- Fight regressions and stay fast

- See the impact of performance on your business

- Benchmark your site against your competitors

- Track third parties to make sure they're not quietly hurting performance

- Make sure you're tracking the best metrics for your pages

- Get a prioritized list of performance recommendations

- Bookmark and compare synthetic tests and RUM sessions so you can quickly find and fix performance issues

- Run A/B tests so you see how code changes affect your performance and user engagement metrics

- Get customized weekly reports

- Motivate your team with a wall-mounted monitor showing your favourite charts

Demystifying Cumulative Layout Shift with CLS Windows

As we all know, naming things is hard.

Google's Core Web Vitals are an attempt to help folks new to web performance focus on three key metrics. Not all of these metrics are easy to understand based on their names alone:

- Largest Contentful Paint (LCP) – When the largest visual element on the page renders

- First Input Delay (FID) – How quickly a page responds to a user interaction (FID will be replaced by Interaction to Next Paint in March 2024)

- Cumulative Layout Shift (CLS) – How visually stable a page is

Any time a new metric is introduced, it puts the burden on the rest of us to first unpack all the acronyms, and then explore and digest what concepts the words might refer to. This gets even trickier if the acronym stays the same, but the logic and algorithm behind the acronym changes.

In this post, we will dive deeper into Cumulative Layout Shift (CLS) and how it has quietly evolved over the years. Because CLS has been around for a while, you may already have some idea of what it represents. Before we go any further, I have a simple question for you:

How do you think Cumulative Layout Shift is measured?

Hold your answer in your head as we explore the depths of CLS. I'm interested if your assumptions were correct, and there's a poll at the bottom of this post I'd love you to answer.

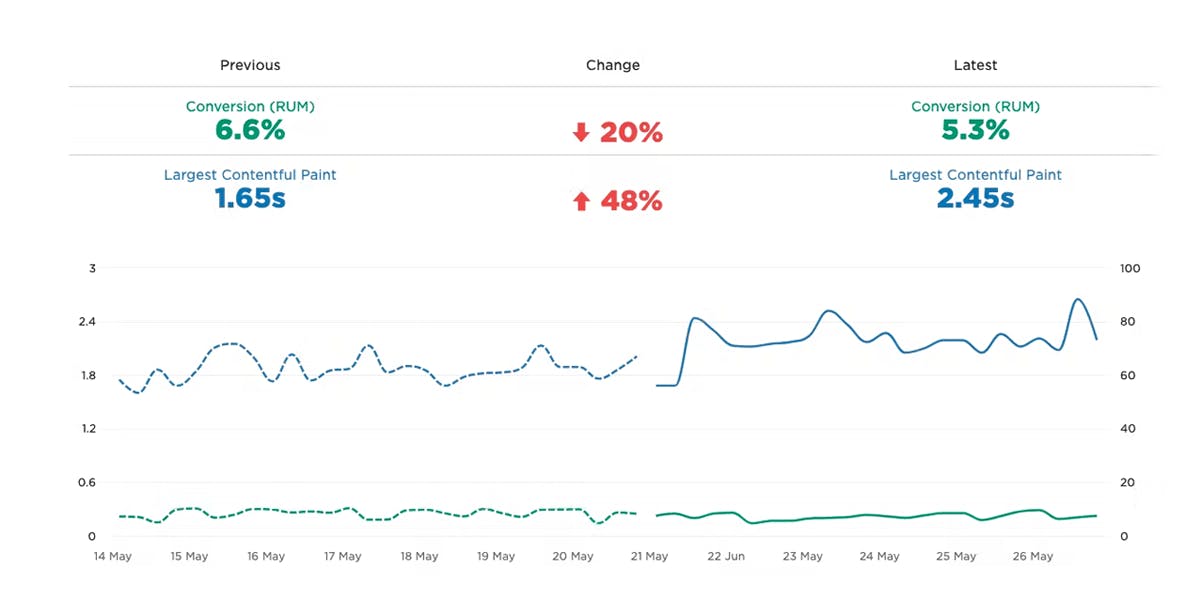

Exploring performance and conversion rates just got easier

Demonstrating the impact of performance on your users – and on your business – is one of the best ways to get your company to care about the speed of your site.

Tracking goal-based metrics like conversion rate alongside performance data can give you richer and more compelling insights into how the performance of your site affects your users. This concept is not new by any means. In 2010, the Performance and Reliability team I was fortunate enough to lead at Walmartlabs shared our findings around the impact of front-end times on conversion rates. (This study and a number of other case studies tracked over the years can be found at WPOstats.)

Setting up conversion tracking in SpeedCurve RUM is fairly simple and definitely worthwhile. This post covers:

- What is a conversion?

- How to track conversions in SpeedCurve

- Using conversion data with performance data for maximum benefit

- Conversion tracking and user privacy

Farewell FID... and hello Interaction to Next Paint!

Today at Google I/O 2023, it was announced that Interaction to Next Paint (INP) is no longer an experimental metric. INP will replace First Input Delay (FID) as a Core Web Vital in March of 2024.

It's been three years since the Core Web Vitals initiative was kicked off in May 2020. In that time, we've seen people's interest in performance dramatically increase, especially in the world of SEO. It's been hugely helpful to have a simple set of three metrics – focused on loading, interactivity, and responsiveness – that everyone can understand and focus on.

During this time, SpeedCurve has stayed objective when looking at the CWV metrics. When it comes to new performance metrics, it's easy to jump on hype-fuelled bandwagons. While we definitely get excited about emerging metrics, we also approach each new metric with an analytical eye. For example, back in November 2020, we took a closer look at one of the Core Web Vitals, First Input Delay, and found that it was sort of 'meh' overall when it came to meaningfully correlating with actual user behavior.

Now that INP has arrived to dethrone FID as the responsiveness metric for Core Web Vitals, we've turned our eye to scrutinizing its effectiveness.

In this post, we'll take a closer look and attempt to answer:

- What is Interaction to next Paint?

- How does INP compare to FID?

- What is a 'good' INP result?

- Will there be differences between INP collected in RUM vs. Chrome User Experience Report (CrUX)?

- What correlation does INP have with real user behavior?

- When should you start caring about INP?

- How can you see INP for your own site in SpeedCurve?

Onward!

NEW! Lighthouse 10, Core Web Vitals updates, and Interaction to Next Paint

There is a lot of excitement in the world of web performance these days, and April has been no exception! At SpeedCurve, we've been focused on staying on top of the items that affect you the most.

Here is a look at what's new in SpeedCurve:

- Support for Lighthouse 10, including metric scoring changes as well as audits

- Updated RUM Core Web Vitals, including the much-anticipated addition of Interaction to Next Paint (INP)

All of this work driven by the community is having a big impact in our collective goal to make performance accessible for everyone.

Read on to learn more about these exciting changes!

Element Timing: One true metric to rule them all?

One of the great things about Google's Core Web Vitals is that they provide a standard way to measure our visitors’ experience. Core Web Vitals can answer questions like:

- When was the largest element displayed? Largest Contentful Paint (LCP) measures when the largest visual element (image or video) finishes rendering.

- How much did the content move around as it loads? Cumulative Layout Shift (CLS) measures the visual stability of a page.

- How responsive was the page to visitors' actions? First Input Delay (FID) measures how quickly a page responds to a user interaction, such as a click/tap or keypress.

Sensible defaults, such as Core Web Vitals, are a good start, but one pitfall of standard measures is that they can miss what’s actually most important.

The (potential) problems with Largest Contentful Paint

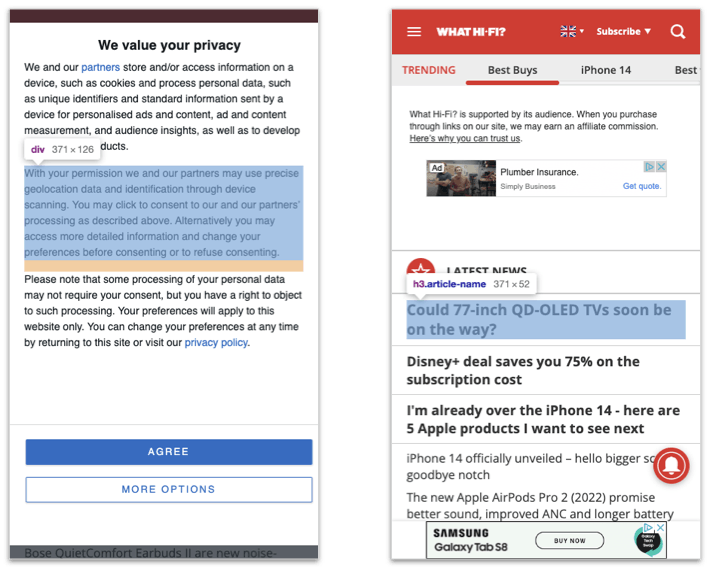

Largest Contentful Paint (LCP) makes the assumption that the largest visible element is the most important content from the visitors’ perspective; however, we don’t have a choice about which element it measures. LCP may not be measuring the most appropriate – or even the same – element for each page view.

The LCP element can vary for first-time vs repeat visitors

In the case of a first-time visitor, the largest element might be a consent banner. On subsequent visits to the same page, the largest element might be an image for a product or a photo that illustrates a news story.

The screenshots from What Hifi (a UK audio-visual magazine) illustrate this problem. When the consent banner is shown, then one of its paragraphs is the LCP element. When the consent banner is not shown, an article title becomes the LCP element. In other words, the LCP timestamp varies depending on which of these two experiences the visitor had!

What Hi Fi with and without the consent banner visible

What Hi Fi with and without the consent banner visible