Mobile INP performance: The elephant in the room

Earlier this year, when Google announced that Interaction to Next Paint (INP) will replace First Input Delay (FID) as the responsiveness metric in Core Web Vitals in *gulp* March of 2024, we had a lot to say about it. (TLDR: FID doesn't correlate with real user behavior, so we don't endorse it as a meaningful metric.)

Our stance hasn't changed much since then. For the most part, everyone agrees the transition from FID to INP is a good thing. INP certainly seems to be capturing interaction issues that we see in the field.

However, after several months of discussing the impending change and getting a better look at INP issues in the wild, it's hard to ignore the fact that mobile stands out as the biggest INP offender by a wide margin. This doesn't get talked about as much as it should, so in this post we'll explore:

- The gap between "good" INP for desktop vs mobile

- Working theories as to why mobile INP is so much poorer than desktop INP

- Correlating INP with user behavior and business metrics (like conversion rate)

- How you can track and improve INP for your pages

Only 2/3 mobile sites have "good" INP

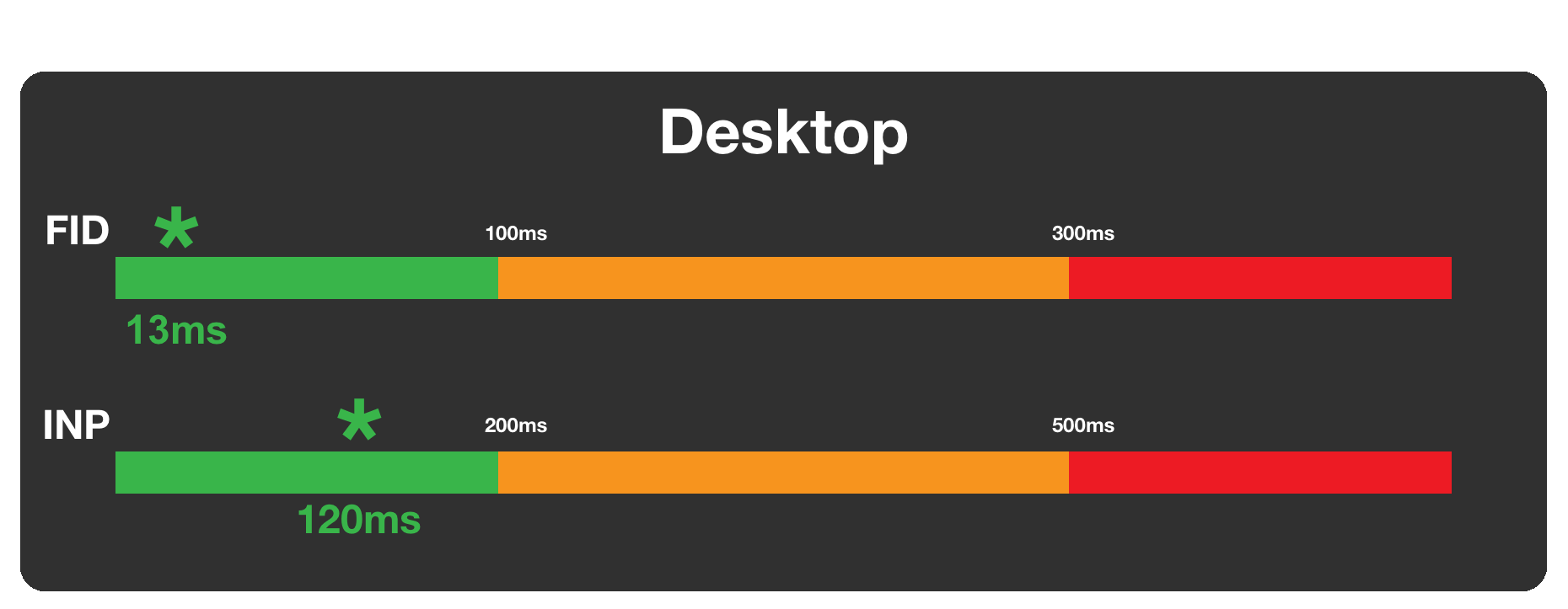

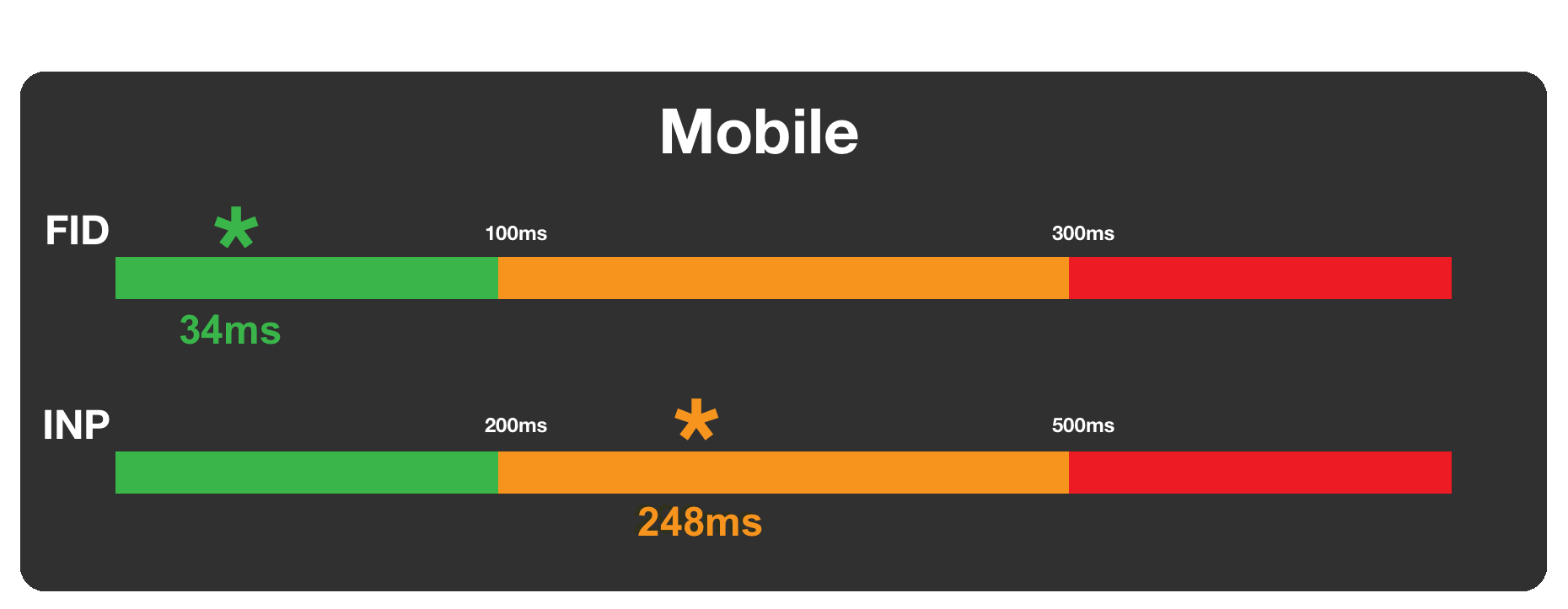

According to Google, a "good" Interaction to Next Paint time – for both desktop and mobile – is below 200 milliseconds.

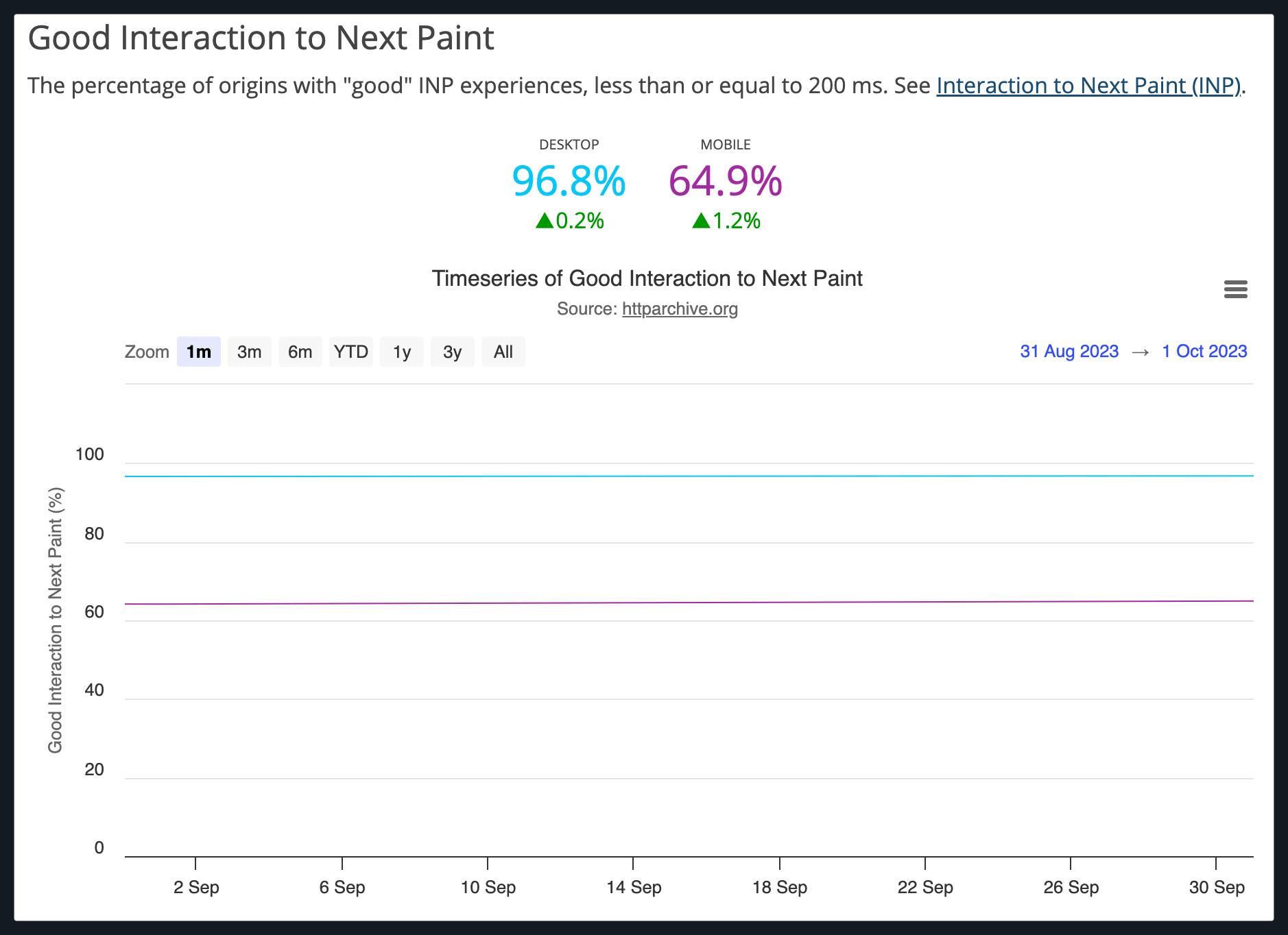

Looking at data from the HTTP Archive, which tracks performance metrics for the top million sites on the web, the percentage of sites that have good INP is 96.8% for desktop, but only 64.9% for mobile.

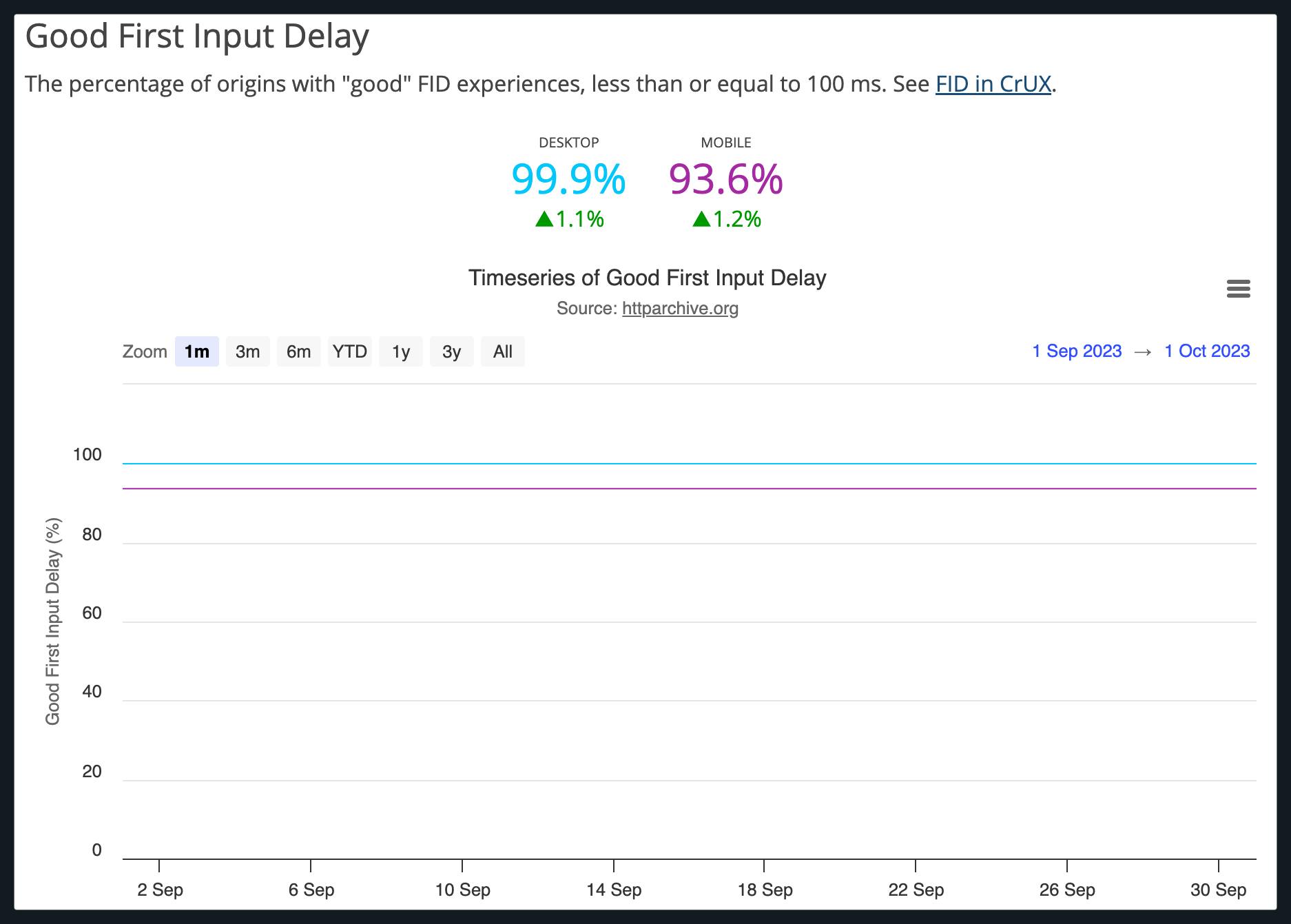

Not to belabor the point, but FID paints quite a different story, with virtually all (99.9%) desktop sites and 93.6% of mobile sites demonstrating a "good" FID rating (less than 100ms).

Our own data reflects a similar story. At the 75th percentile, desktop INP across pages measured for all origins does reasonably well at 120ms, while mobile is quite a bit slower at 248ms.

The elephant in the room: Why does mobile INP perform so poorly?

And why does desktop do so well? We are still working on understanding that. Here are some theories I explored...

Latency is traditionally worse on mobile

Despite improved network conditions in some parts of the world, round trip time is still much worse for mobile than desktop. However, if that were the case, why do we see a smaller divide between mobile and desktop on metrics that are certainly more sensitive to network conditions?

It's also a bit of a stretch to think that Interaction to Next Paint has much to do with network requests, other than when those requests are triggered by an event handler. As we've discussed before, most interactions happen after the page has loaded.

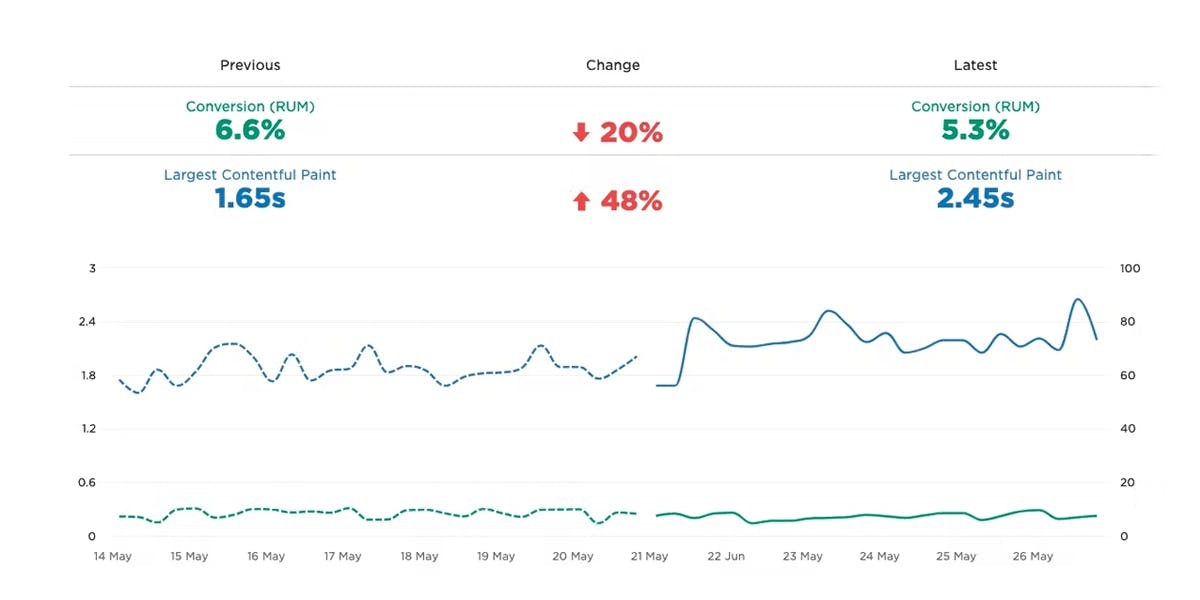

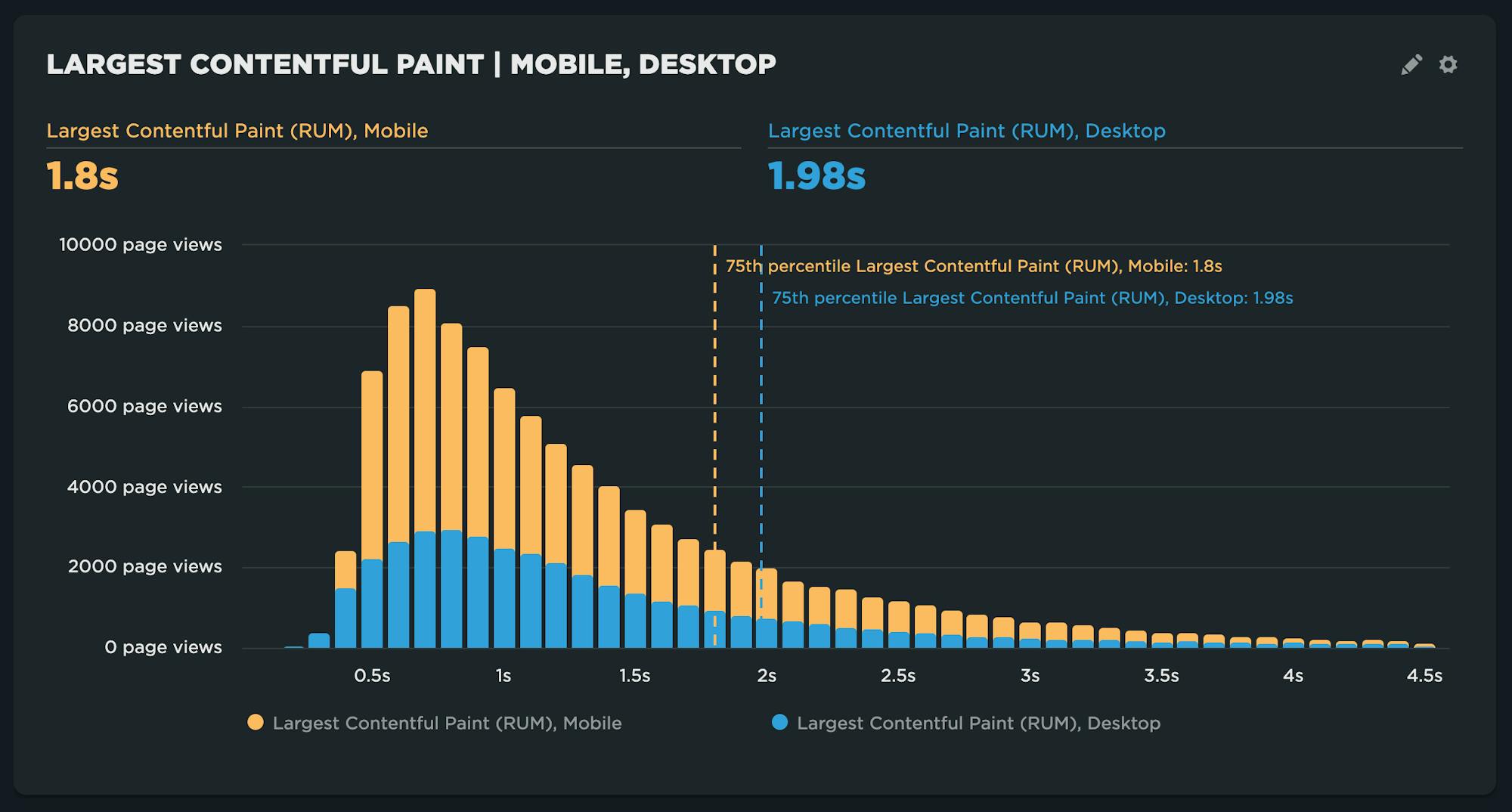

In this example, mobile is only slightly slower than desktop when looking at Largest Contentful Paint (LCP) at the 75th percentile.

In this example, mobile is only slightly slower than desktop when looking at Largest Contentful Paint (LCP) at the 75th percentile.

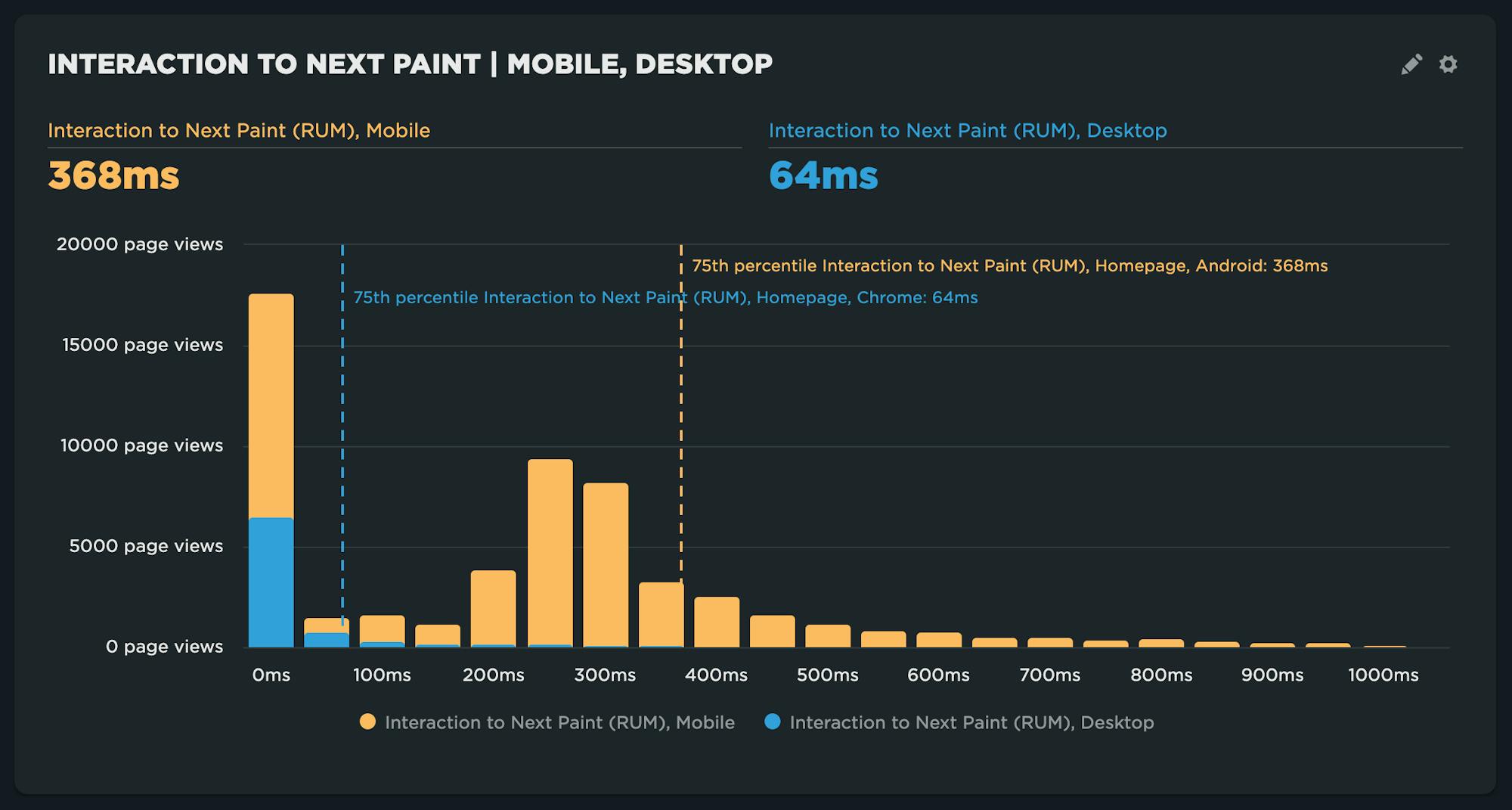

Conversely, in this example mobile is drastically slower than desktop when looking at Interaction to Next Paint (INP) at the 75th percentile.

Conversely, in this example mobile is drastically slower than desktop when looking at Interaction to Next Paint (INP) at the 75th percentile.

Mobile INP really means Android INP... and Android devices are slooowww

Unfortunately, due to lack of Safari support for some performance metrics – including Core Web Vitals – iPhone performance continues to be a blind spot. When we look at Interaction to Next Paint data, we're really looking at data that's almost exclusively from Android devices.

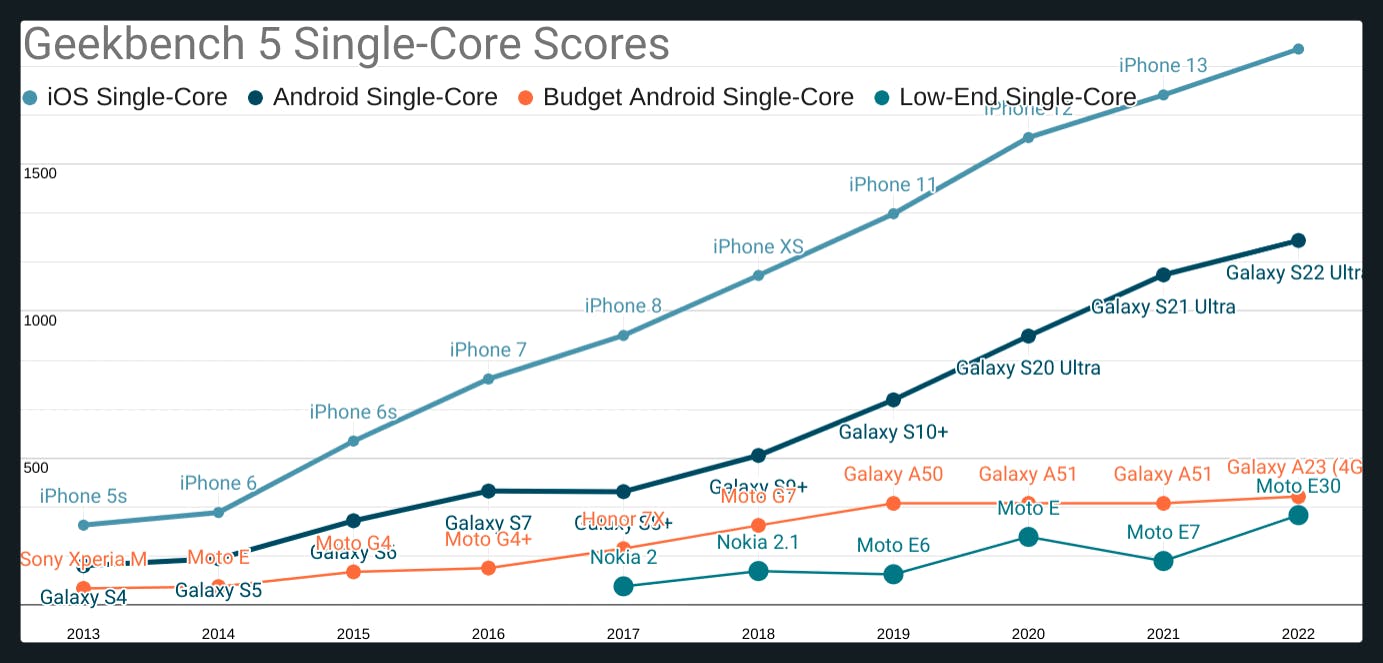

However, the performance gap is widening when it comes to processing power on Android devices. There are a growing number of high-end devices in use, especially in countries like the United States.

Credit: Alex Russell The Performance Inequality Gap, 2023

As shown above, there are a wider range of performance characteristics for Android devices. This is a growing trend that may mask issues for those sites trying to serve users in mainland Europe or other markets where Android has more market share. Arguably, building the web to make it accessible for everyone should be the biggest area of focus for site owners. But is it?

Mobile interactions are different, thus mobile design is different

Are there common practices in mobile that could affect Interaction to Next Paint? It's not much of a stretch to think so.

- Touch targets have to be sized accordingly due to less precision than when a mouse is used for the input device on a large screen.

- Hamburger menus widely used in mobile often have elaborate animations compared to their static counterparts on desktop.

- Cookie consent seems to plague a lot of mobile sites we look at.

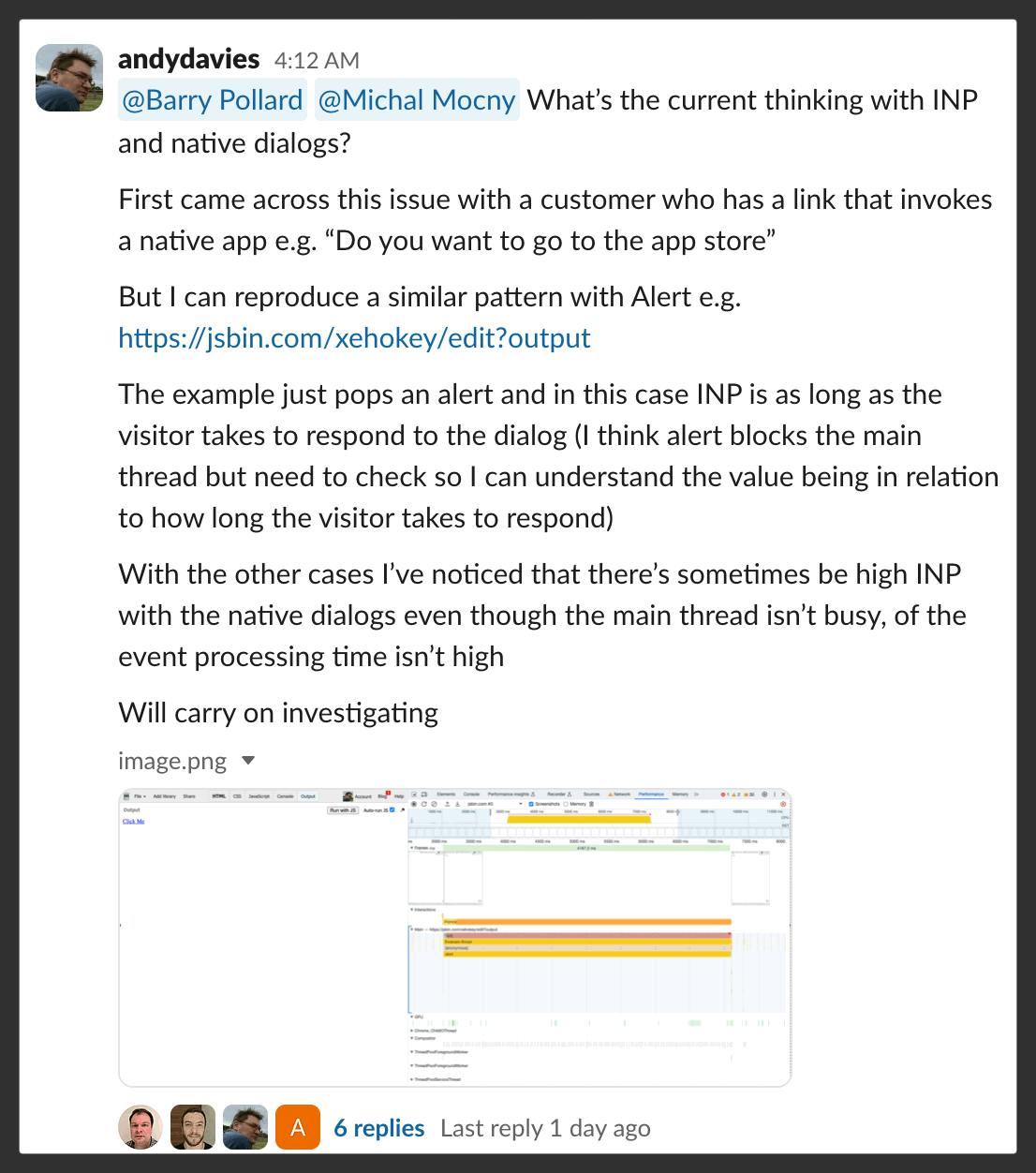

- Long, synchronous modal dialogs used on mobile to 'Open in App' are already known to have an impact on INP.

I recently came across this discussion in the Web Performance Slack related to the old issue of a 300ms delay introduced while waiting for a second tap. This was addressed by browsers for mobile-optimized sites, assuming that your viewport is properly set for your device. This issue may be an edge case, but are we sure?

I can't help but think there are more mobile-specific issues that affect INP. They just haven't surfaced just yet.

INP for mobile has a *stronger* correlation with business metrics than INP for desktop

I've been looking at a lot of data around Interaction to Next Paint and user behavior, and there definitely appears to be a correlation. I had started to convince myself that maybe it didn't matter as much on mobile. Thinking about my own behavior, I often assume things aren't working well on my phone and I just expect a bit more of a delay.

When it comes to conversion, unfortunately, the numbers tell a different story. In most of the cases I've looked at, INP for mobile seems to have an even stronger correlation with business metrics such as bounce rate and conversion than INP for desktop.

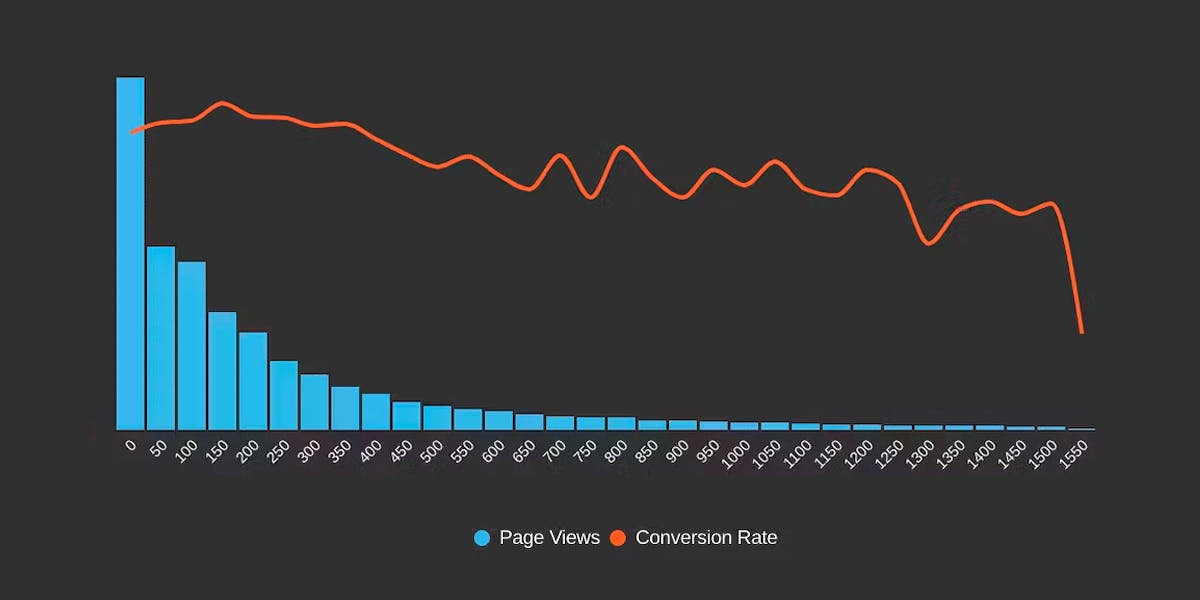

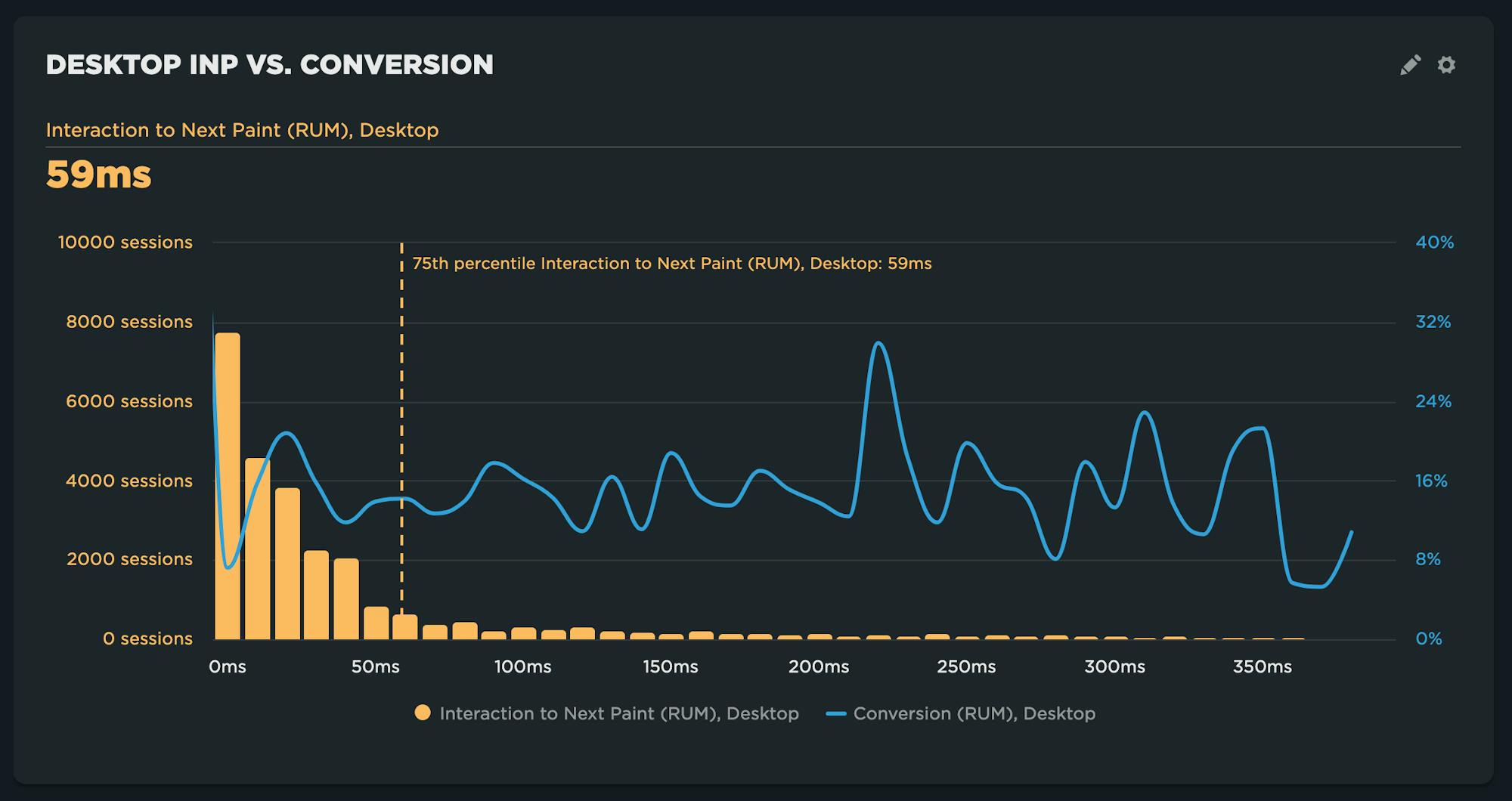

In this example, conversion correlated with desktop INP shows little correlation and very few sessions with INP over 50ms.

In this example, conversion correlated with desktop INP shows little correlation and very few sessions with INP over 50ms.

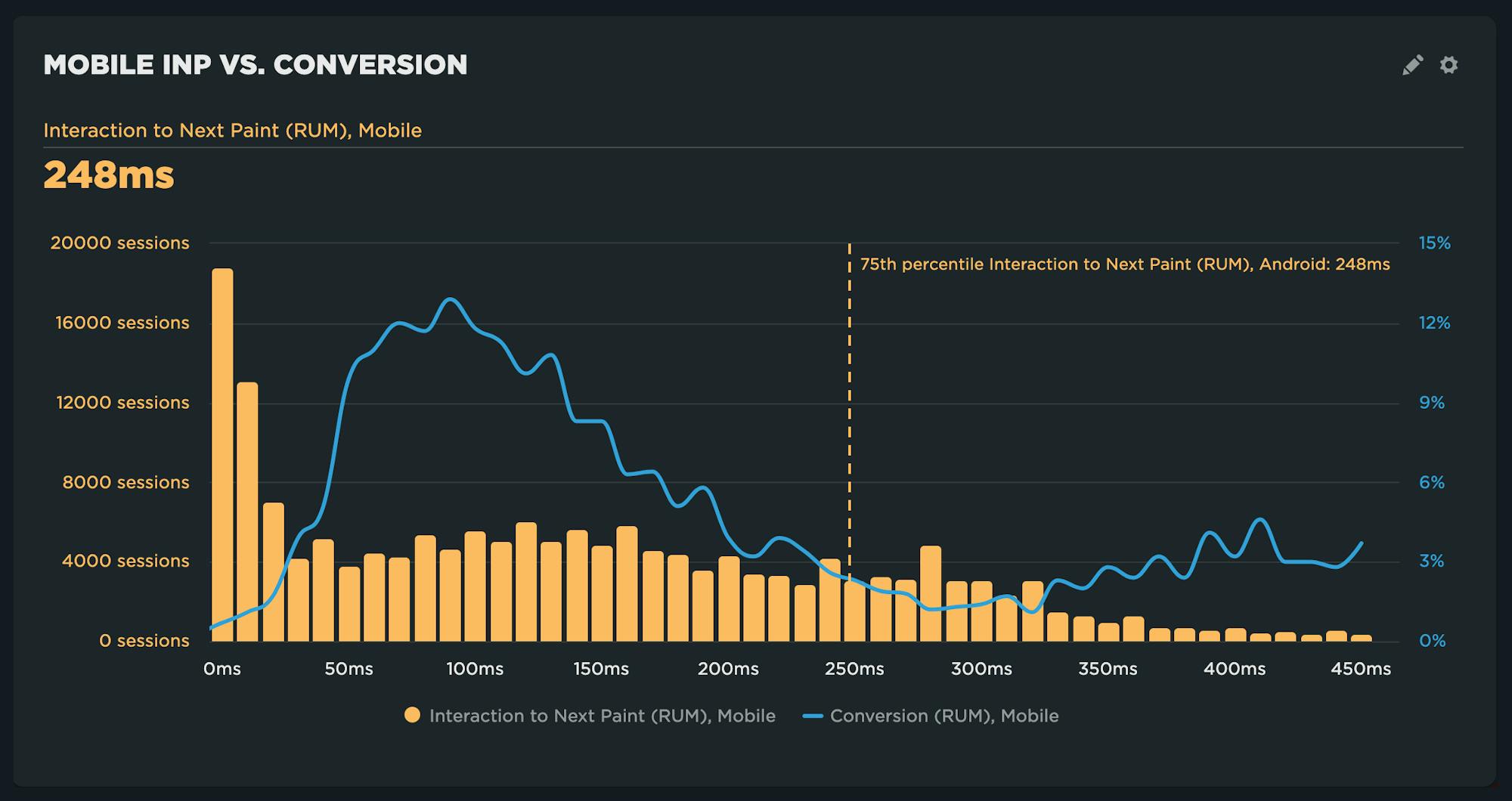

For the same site, conversion correlated with mobile INP is heavily correlated along with more instances of INP in the "needs improvement" range.

For the same site, conversion correlated with mobile INP is heavily correlated along with more instances of INP in the "needs improvement" range.

What now?

Don't panic. If you've spent the last few years ignoring excessive JavaScript or main thread contention because your FID numbers gave you a false sense of security, you are probably not alone. It's never too late to start optimizing your pages to improve your Interaction to Next Paint results.

Some of these improvements may take a while, while others are more straightforward.

First, know your INP score

Some RUM providers, including SpeedCurve, are already measuring INP or have plans to soon. If you want to give our solution a try, sign up here for a free trial. In addition to tracking Core Web Vitals, you can set up alerts so you know when your metrics regress, and get a detailed list of performance recommendations so you know what to fix.

Identify excessive JavaScript, Long Tasks, and inefficient event handlers

It's (still) the JavaScript. Most of the issues you'll find are somehow related to excessive JS on your pages, long-running tasks that block the main thread, or excessive execution of event handlers. This can come from third-party scripts as well as your origin.

Communicate early and often

If you are like a lot of our customers, Core Web Vitals have sparked a sudden interest in performance from all sides. When you find a good solution for tracking your INP, report any changes proactively and communicate your potential plans for the future.

Watch this space

At SpeedCurve, we are focused on making your real user monitoring (RUM) data actionable – so you can spend less time finding problems and more time fixing them. Our focus in the coming months is centred around RUM attribution for Core Web Vitals and improved lab (synthetic) testing to reproduce and troubleshoot INP as well as all of your Core Web Vitals. As always, your feedback is welcome!