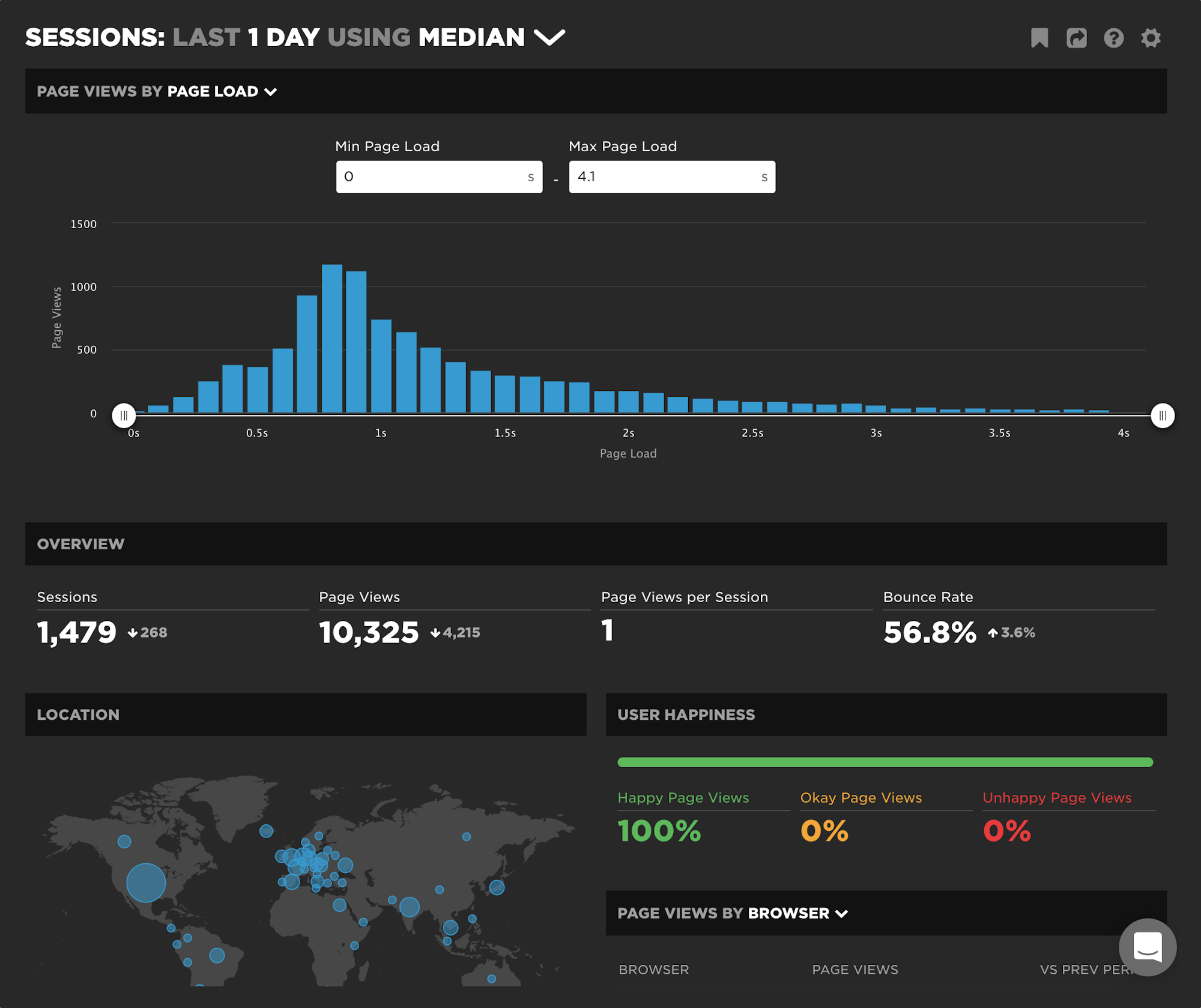

NEW: Exploring RUM sessions

If you want to understand how people actually experience your site, you need to monitor real users. The data we get from real user monitoring (RUM) is extremely useful when trying to get a grasp on performance. Not only does it serve as the source of truth for your most important budgets and KPIs, it help us understand that performance is a broad distribution that encompasses many different cohorts of users.

While real user monitoring gives us the opportunity for unparalleled insight into user experience, the biggest challenge with RUM data is that there's so much of it. Navigating through all this data has typically been done by peeling back one layer of information at a time, and it often proves difficult to identify the root cause when we see a change:

"What happened here?"

"Did the last release cause a drop in performance?"

"How can I drill down from here to see what's going on?"

"Is the issue confined to a specific region? Browser? Page?"

Today we're excited to release a new capability – your RUM Sessions dashboard – which allows you to drill into a dataset and explore those sessions that occurred within a given span of time.

UPDATE: Bookmark and compare synthetic tests

One of the huge benefits of tracking web performance over time is the ability to see trends and compare metrics. Last year we added new functionality that makes it easy for you to bookmark and compare different synthetic tests in your test history. We recently added some additional enhancements to make comparing tests even easier.

With the 'Compare' feature, you can generate side-by-side comparisons that let you not only spot regressions, but easily identify what caused them:

- Compare the same page at different points in time

- Compare two versions of the same page – for example, one with ads and one without

- Understand which metrics got better or worse

- Identify which common requests got bigger/smaller or slower/faster

- Spot any new or unique requests – such as JavaScript and images – and see their impact on performance

Along the way, we've also made it much more intuitive for you to drill down into your detailed synthetic test results. Let's take a look...

NEW: Lighthouse v8 support!

After Google's announcement about Lighthouse 8 this past month, we have updated our test agents. We've gotten a lot of questions about what has changed and the impact on your performance metrics, so here's a summary.

Hello from Europe!

I’m delighted to be joining some of my favourite web performance people at SpeedCurve!

Who am I?

I’ve been a full-time web performance consultant for around nine years. For about half that time I worked freelance, and the other half for Site Confidence / NCC Group in the UK.

My journey into performance started in the late 1990s, while I was working for an elearning provider and discovering the challenges of delivering rich content over the internet. To overcome some of these challenges, we built our own Java-based player, complete with caching, content compression, and even bandwidth detection so it could switch between video, audio, and text versions of a course depending on network speed.

Ultimately the business didn’t survive the dotcom bust, but it lit a spark...

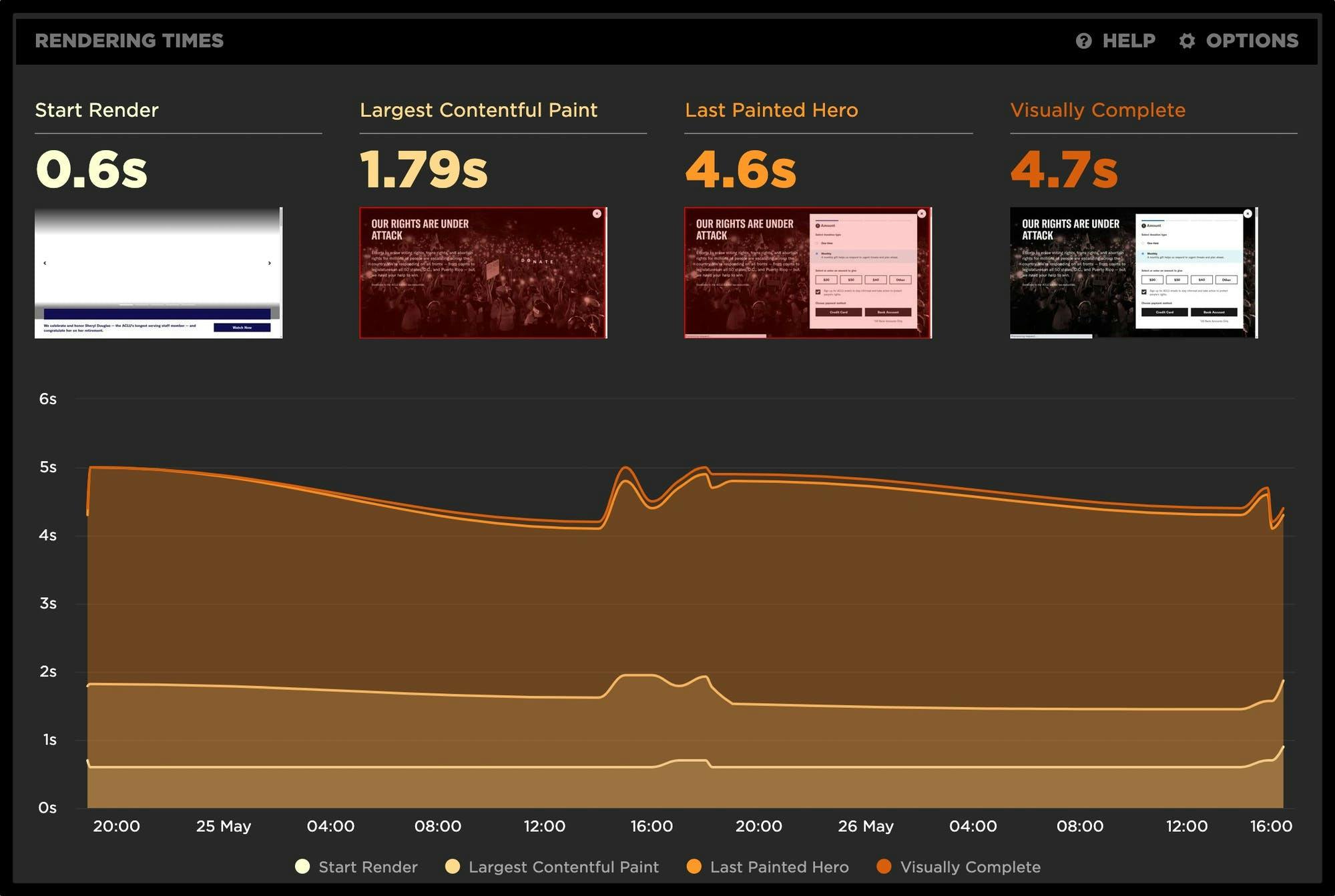

NEW! Chrome Beta and Canary support & LCP element highlighting

Phew! Between the fast-paced release cycle for Chrome and the rapid evolution of Core Web Vitals, the month of May has been a busy one here at SpeedCurve. With that, we are excited to bring you some new features and enhancements to help you stay focused and ahead of the game as we move into summer.

Read on to learn more about:

- Chrome Beta and Canary support

- Largest Contentful Paint (LCP) element highlighting

- Key rendering times

Web Performance for Product Managers

I love conversations about performance, and I'm fortunate enough to have them a lot. The audience varies. A lot of the time it’s a front-end developer or head of engineering, but more and more I’m finding myself in great conversations with product leaders. As great as these discussions can be, I often walk away feeling like there was a better way to streamline the conversation while still conveying my passion for bringing fellow PMs into the world of webperf. I hope this post can serve that purpose and cover a few of the fundamental areas of web performance that I’ve found to be most useful while honing the craft of product management.

So, whether you are a PM or not, if you're new to performance I've put together a few concepts and guidelines you can refer to in order to ramp up quickly. This post covers:

- What makes a page slow?

- How is performance measured?

- What do the different metrics mean?

- Understanding percentiles and how to use them

- How to communicate performance to different stakeholders

Let's get started...

Joining the SpeedCurve team

After all the twists and turns of 2020, the unprecedented year ended up with the pleasure of joining the SpeedCurve team and helping to build the tool trusted by so many brands around the world that are striving to improve their customer experience.

As a developer I've always been fascinated by the web and how it enriches people's lives, and now I am jumping into the very essence of it – how it renders, performs and behaves! Thanks to SpeedCurve for this opportunity, I am so excited to work along with the veterans of the web performance industry (and just plain talented people), as well as to be closer to the performance community.

An Opinionated Guide to Performance Budgets

Performance budgets are one of those ideas that everyone gets behind conceptually, but then are challenged to put into practice – and for very good reason. Web pages are unbelievably complex, and there are hundreds of different metrics available to track. If you're just getting started with performance budgets – or if you've been using them for a while and want to validate your work – this post is for you.

What is a performance budget?

A performance budget is a threshold that you apply to the metrics you care about the most. You can then configure your monitoring tools to send you alerts – or even break the build, if you're testing in your staging environment – when your budgets are violated.

Understanding the basic premise of performance budgets is pretty easy. The tricky part comes when you try to put them into practice. This is when you run into three important questions:

- Which metrics should you focus on?

- What should your budget thresholds be?

- How do you stay on top of your budgets?

Depending on whom you ask, you could get very different answers to these questions. Here are mine.

New! Vitals Dashboard

Getting up to speed on Core Web Vitals seems to be at the top of everyone's to-do list these days. Just in time for the holidays, we are happy to bring you our new Vitals dashboard to help you get a huge jumpstart.

We love to visualize performance data (in case you haven't heard). We love it even more when we can be true to one of our biggest motivations at SpeedCurve: leveraging both RUM and Synthetic data to help you take action on what matters most.

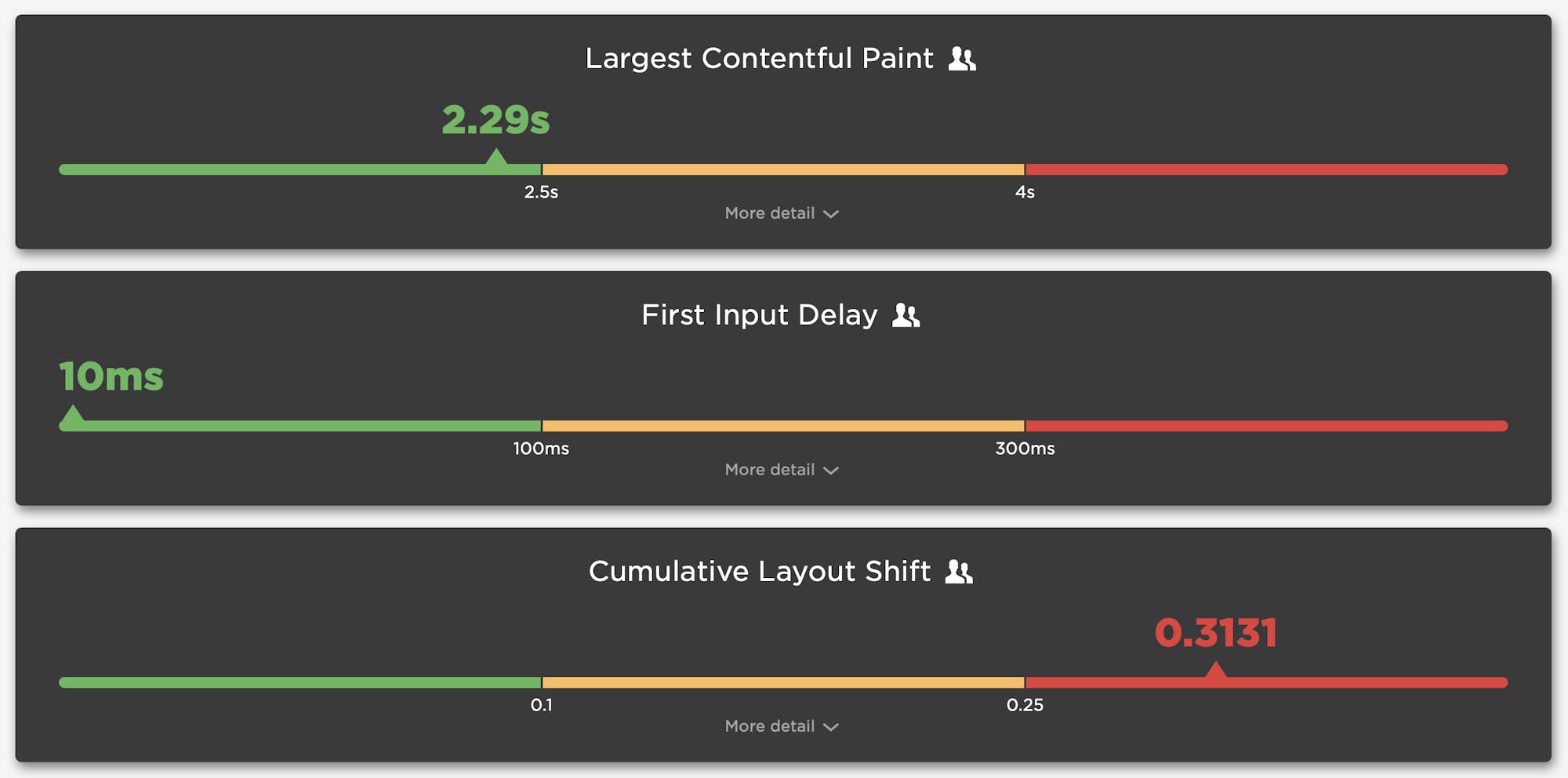

First Input Delay: How vital is it?

We’ve been pretty vocal about Core Web Vitals since Google announced this initiative last spring. We love the idea of having a lean, shared set of metrics that we can all rally around – not to mention having a broader conversation about web performance that includes teams throughout an organization.

For many site owners, the increased focus on Core Web Vitals is driven by the fact that Google will be including them as a factor in search ranking in May 2021. Other folks are more interested in distilling the extremely large barrel of performance metrics into an easily digested trinity of guidelines to follow in order to provide a delightful user experience.

We’ve had some time to evaluate and explore these metrics, and we're committed to transparently discussing their pros and cons.

The purpose of this post is to explore First Input Delay (FID). This metric is unique among the three Web Vitals in that it is can only be measured using real user monitoring (RUM), while the other two (Largest Contentful Paint and Cumulative Layout Shift) can be measured using both RUM and synthetic monitoring.

In this post we'll cover:

- What is FID?

- What does FID look like across the web?

- The importance of measuring user interactions

- How JavaScript affects user behavior

- Suggestions for how you can look at FID in relation to your other key metrics

Let's dig in!