NEW: Vitals dashboard updates and filter improvements

Our development team recently emerged from an offsite with two wonderful improvements to SpeedCurve. The team tackled a project to unify our filtering, and then they over-delivered with a re-Vital-ized dashboard that I'm finding to be one of the most useful views in the product.

Take a look at the recent updates – and a big thank you to our amazing team for putting so much love into SpeedCurve!

Performance Hero: Sia Karamalegos

Sia Karamalegos is a web performance diva we've come to know through her many articles, workshops, conference talks, and her stint as an MC at performance.now() last year – not to mention her role in speeding up a pretty big slice of the internet!

Sia is kind, funny, smart as heck, and always down to talk web performance (especially if you have a Shopify site). For those reasons and many more, we are excited to share that Sia is this month's Performance Hero!

How to provide better attribution for your RUM metrics

Here's a detailed walkthrough showing how to make more meaningful and intuitive attributions for your RUM metrics – which makes it much easier for you to zero in on your performance issues.

Real user monitoring (RUM) has always been incredibly important for any organization focused on performance. RUM – also known as field testing – captures performance metrics as real users browse your website and helps you understand how actual users experience your site. But it’s only in the last few years that RUM data has started to become more actionable, allowing you to diagnose what is making your pages slower or less usable for your visitors.

Making newer RUM metrics – such as Core Web Vitals – more actionable has been a significant priority for standards bodies. A big part of this shift has been better attribution, so we can tell what's actually going on when RUM metrics change.

Core Web Vitals metrics – like Largest Contentful Paint (LCP), Interaction to Next Paint (INP), and Cumulative Layout Shift (CLS) – all have some level of attribution associated with them, which helps you identify what exactly is triggering the metric. The LoAF API is all about attribution, helping you zero in on which scripts are causing issues.

Having this attribution available, particularly when paired with meaningful subparts, can help us to quickly identify which specific components we should prioritize in our optimization work.

We can help make this attribution even more valuable by ensuring that key components in our page have meaningful, semantic attributes attached to them.

NEW: Paper cuts update!

Paper cut: (literal) A wound caused by a piece of paper or any thin, sharp material that can slice through skin. (figurative) A trivial-seeming problem that causes a surprising amount of pain.

We all love big showy features, and this year we've released our share of those. But sometimes it's the small stuff that can make a big difference. We recently took a look at our backlog of smaller requests from our customers – which we labelled "paper cuts" – and decided to dedicate time to tackle them.

Are they all glamorous changes? Maybe not, though some are pretty exciting.

Are they worthy of a press release? Ha! We don't even know how to issue a press release.

Will they make your day better and put a smile on your face? We sure hope so.

In total, our wonderful development team tackled more than 30 paper cuts! These include:

- Exciting new chart types for Core Web Vitals and User Happiness

- Filter RUM data by region

- Create a set of tests for one or multiple sites or custom URLs

- Test directly from your site settings when saving changes

- Usability improvements

- Better messaging for test failures

- And more!

Keep scrolling for an overview of some of the highlights.

NEW: Improving how we collect RUM data

We've made improvements to how we collect RUM data. Most SpeedCurve users won't see significant changes to Core Web Vitals or other metrics, but for a small number of users some metrics may increase.

This post covers:

- What the changes are

- How the changes can affect Core Web Vitals and other metrics

- Why we are making the changes now

15 page speed optimizations that sites ignore (at their own risk)

A recent analysis of twenty leading websites found a surprising number of page speed optimizations that sites are not taking advantage of – to the detriment of their performance metrics, and more importantly, to the detriment of their users and ultimately their business.

I spend a lot of time looking at waterfall charts and web performance audits. I recently investigated the test results for twenty top sites and discovered that many of them are not taking advantage of optimizations – including some fairly easy low-hanging fruit – that could make their pages faster, their users happier, and their businesses more successful.

More on this below, but first, a few important reminders about the impact of page speed on businesses...

Performance Hero: Michelle Vu

Michelle Vu is one of the most knowledgeable, helpful, kind people you could ever hope to meet. As a founding member of Pinterest's performance team, she has created an incredibly strong culture of performance throughout Pinterest. She's also pioneered important custom metrics and practices, like Pinner Wait Time and performance budgets. We are super excited to share that Michelle is this month's Performance Hero!

New! Web Performance Guide

Our customers often tell us how much they appreciate the user-friendliness of the articles we create for them, so we recently decided to make them available to everyone (not just SpeedCurve users). Introducing the Web Performance Guide!

The Web Performance Guide is – as its name suggests – a collection of articles we've been writing over the years to answer the most common questions we field about performance topics like site speed, why it matters, how it's measured, website monitoring tools, metrics, analytics, and optimization techniques.

You'll find the articles grouped into these topics...

Performance Hero: Estela Franco

Continuing our series of Performance Heroes, this month we celebrate Estela Franco! Estela is a passionate web performance and technical SEO specialist with more than ten years of contributing to our community. She loves to talk and share about web performance optimization, technical SEO, JavaScript, and Jamstack whenever she can.

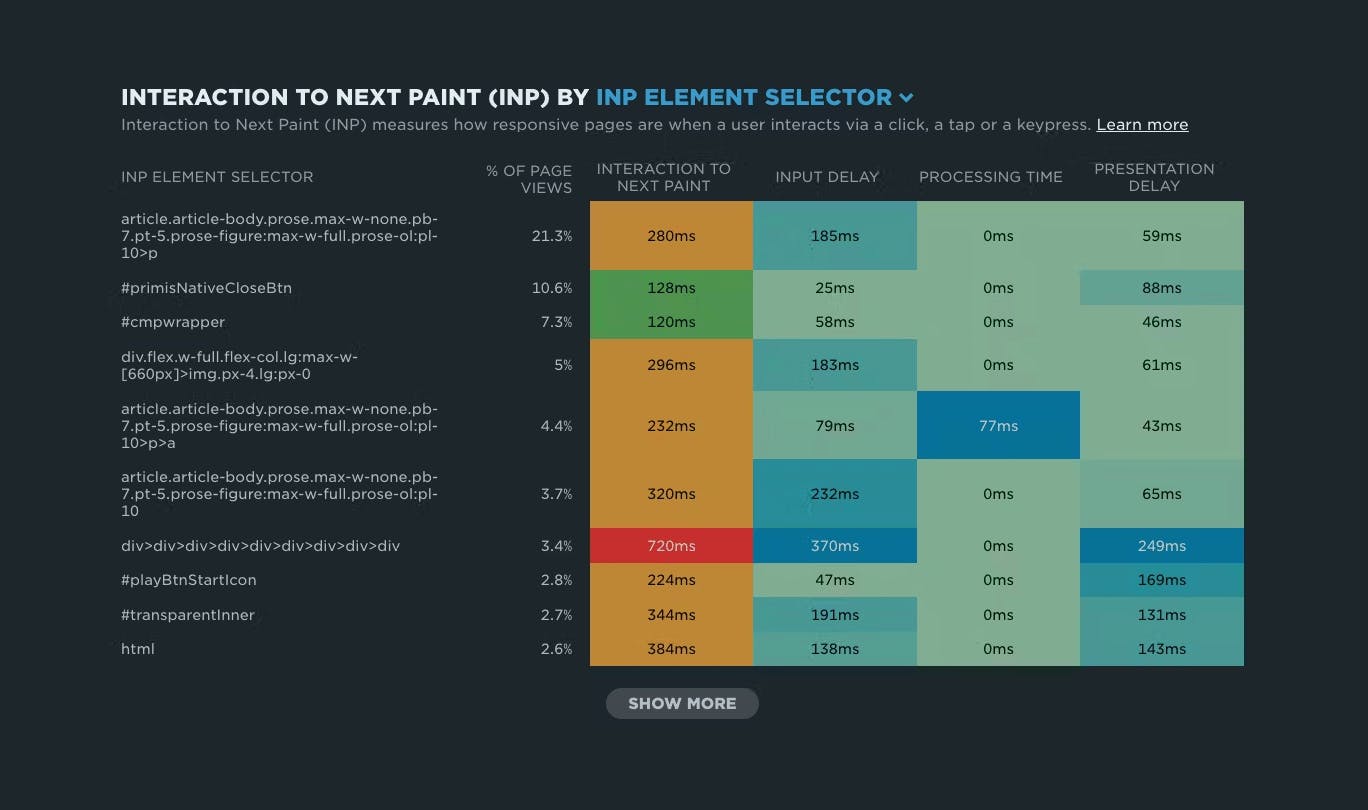

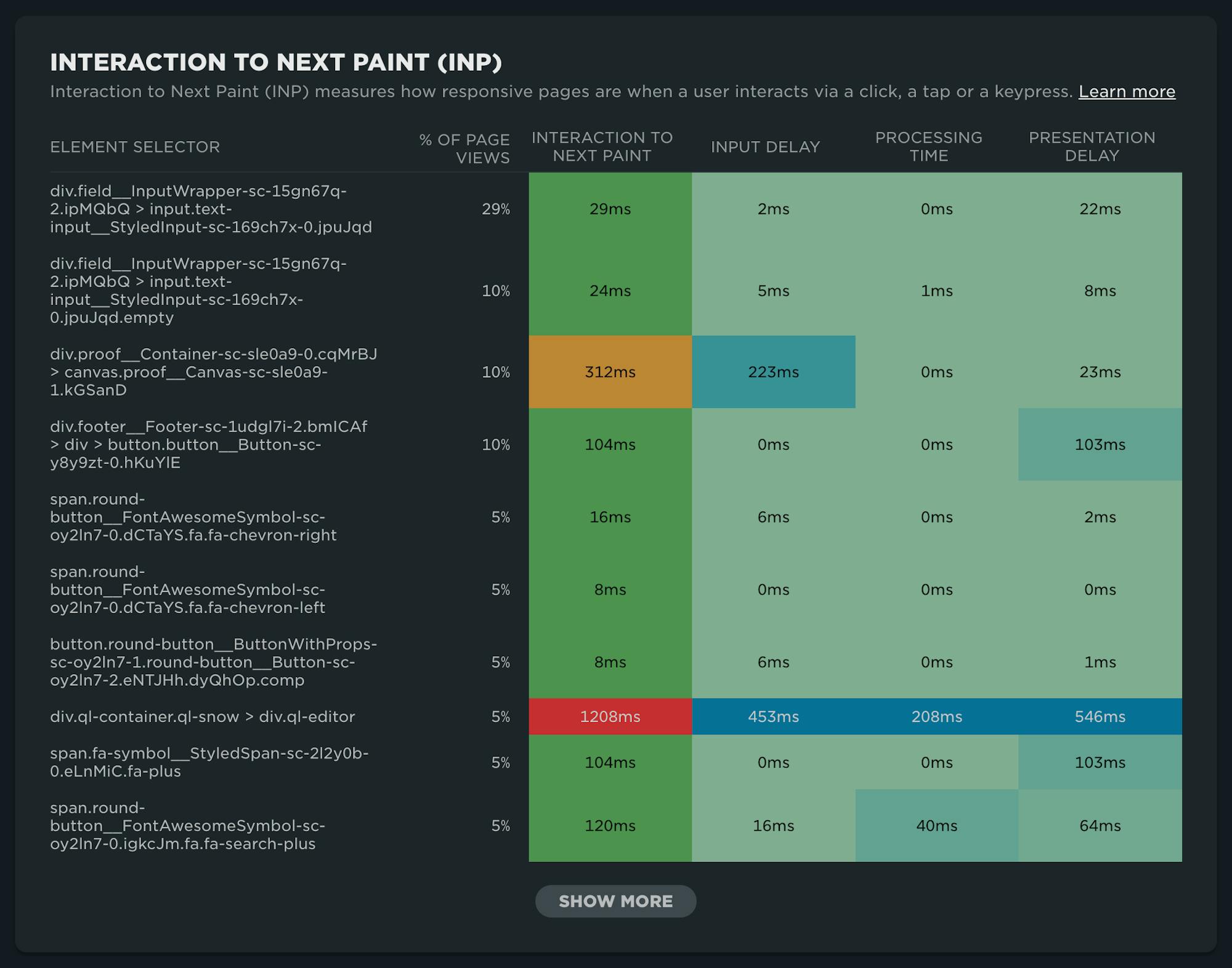

NEW: RUM attribution and subparts for Interaction to Next Paint!

Now it's even easier to find and fix Interaction to Next Paint issues and improve your Core Web Vitals.

Our newest release continues our theme of making your RUM data even more actionable. In addition to advanced settings, navigation types, and page attributes, we've just released more diagnostic detail for the latest flavor in Core Web Vitals: Interaction to Next Paint (INP).

This post covers:

- Element attribution for INP

- A breakdown of where time is spent within INP, leveraging subparts

- How to use this information to find and fix INP issues

- A look ahead at RUM diagnostics at SpeedCurve